![]()

Part 1 First steps

Most data scientists spend more time working on the data than on the algorithms. Most books and courses on machine learning, however, focus on the algorithms. This book addresses this gap in material about the data side of machine learning.

The first part of this book introduces the building blocks for creating training and evaluation data: annotation, active learning, and the human–computer interaction concepts that help humans and machines combine their intelligence most effectively. By the end of chapter 2, you will have built a human-in-the-loop machine learning application for labeling news headlines, completing the cycle from annotating new data to retraining a model and then using the new model to decide which data should be annotated next.

In the remaining chapters, you will learn how you might extend your first application with more sophisticated techniques for data sampling, annotation, and combining human and machine intelligence. The book also covers how to apply the techniques you will learn to different types of machine learning tasks, including object detection, semantic segmentation, sequence labeling, and language generation.

![]()

1 Introduction to human-in-the-loop machine learning

This chapter covers

- Annotating unlabeled data to create training, validation, and evaluation data

- Sampling the most important unlabeled data items (active learning)

- Incorporating human–computer interaction principles into annotation

- Implementing transfer learning to take advantage of information in existing models

Unlike robots in the movies, most of today’s artificial intelligence (AI) cannot learn by itself; instead, it relies on intensive human feedback. Probably 90% of machine learning applications today are powered by supervised machine learning. This figure covers a wide range of use cases. An autonomous vehicle can drive you safely down the street because humans have spent thousands of hours telling it when its sensors are seeing a pedestrian, moving vehicle, lane marking, or other relevant object. Your in-home device knows what to do when you say “Turn up the volume” because humans have spent thousands of hours telling it how to interpret different commands. And your machine translation service can translate between languages because it has been trained on thousands (or maybe millions) of human-translated texts.

Compared with the past, our intelligent devices are learning less from programmers who are hardcoding rules and more from examples and feedback given by humans who do not need to code. These human-encoded examples—the training data—are used to train machine learning models and make them more accurate for their given tasks. But programmers still need to create the software that allows the feedback from nontechnical humans, which raises one of the most important questions in technology today: What are the right ways for humans and machine learning algorithms to interact to solve problems. After reading this book, you will be able to answer this question for many uses that you might face in machine learning.

Annotation and active learning are the cornerstones of human-in-the-loop machine learning. They specify how you elicit training data from people and determine the right data to put in front of people when you don’t have the budget or time for human feedback on all your data. Transfer learning allows us to avoid a cold start, adapting existing machine learning models to our new task rather than starting at square one. We will introduce each of these concepts in this chapter.

1.1 The basic principles of human-in-the-loop machine learning

Human-in-the-loop machine learning is a set of strategies for combining human and machine intelligence in applications that use AI. The goal typically is to do one or more of the following:

-

Increase the accuracy of a machine learning model.

-

Reach the target accuracy for a machine learning model faster.

-

Combine human and machine intelligence to maximize accuracy.

-

Assist human tasks with machine learning to increase efficiency.

This book covers the most common active learning and annotation strategies and how to design the best interface for your data, task, and annotation workforce. The book gradually builds from simpler to more complicated examples and is written to be read in sequence. You are unlikely to apply all these techniques at the same time, however, so the book is also designed to be a reference for each specific technique.

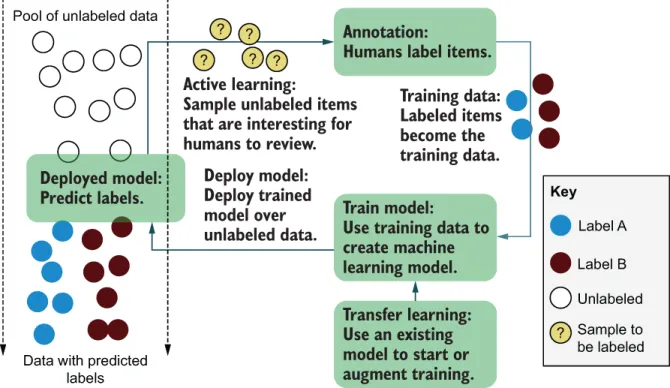

Figure 1.1 shows the human-in-the-loop machine learning process for adding labels to data. This process could be any labeling process: adding the topic to news stories, classifying sports photos according to the sport being played, identifying the sentiment of a social media comment, rating a video on how explicit the content is, and so on. In all cases, you could use machine learning to automate some of the process of labeling or to speed up the human process. In all cases, using best practices means implementing the cycle shown in figure 1.1: sampling the right data to label, using that data to train a model, and using that model to sample more data to annotate.

Figure 1.1 A mental model of the human-in-the-loop process for predicting labels on data

In some cases, you may want only some of the techniques. If you have a system that backs off to a human when the machine learning model is uncertain, for example, you would look at the relevant chapters and sections on uncertainty sampling, annotation quality, and interface design. Those topics still represent the majority of this book even if you aren’t completing the “loop.”

This book assumes that you have some familiarity with machine learning. Some concepts are especially important for human-in-the-loop systems, including deep understanding of softmax and its limitations. You also need to know how to calculate accuracy with metrics that take model confidence into consideration, calculate chance-adjusted accuracy, and measure the performance of machine learning from a human perspective. (The appendix contains a summary of this knowledge.)

1.2 Introducing annotation

Annotation is the process of labeling raw data so that it becomes training data for machine learning. Most data scientists will tell you that they spend much more time curating and annotating datasets than they spend building the machine learning models. Quality control for human annotation relies on more complicated statistics than most machine learning models do, so it is important to take the necessary time to learn how to create quality training data.

1.2.1 Simple and more complicated annotation strategies

An annotation process can be simple. If you want to label social media posts about a product as positive, negative, or neutral to analyze broad trends in sentiment about that product, for example, you could build and deploy an HTML form in a few hours. A simple HTML form could allow someone to rate each social media post according to the sentiment option, and each rating would become the label on the social media post for your training data.

An annotation process can also be complicated. If you want to label every object in a video with a bounding box, for example, a simple HTML form is not enough; you need a graphical interface that allows annotators to draw those boxes, and a good user experience might take months of engineering hours to build.

1.2.2 Plugging the gap in data science knowledge

Your machine learning algorithm strategy and your data annotation strategy can be optimized at the same time. The two strategies are closely intertwined, and you often get better accuracy from your models faster if you have a combined approach. Algorithms and annotation are equally important components of good machine learning.

All computer science departments offer machine learning courses, but few offer courses on creating training data. At most, you might find one or two lectures about creating training data among hundreds of machine learning lectures across half a dozen courses. This situation is changing, but slowly. For historical reasons, academic machine learning researchers have tended to keep the datasets constant and evaluated their research only in terms of different algorithms.

By contrast with academic machine learning, it is more common in industry to improve model performance by annotating more training data. Especially when the nature of the data is changing over time (which is also common), using a handful of new annotations can be far more effective than trying to adapt an existing model to a new domain of data. But far more academic papers focus on how to adapt algorithms to new domains without new training data than on how to annotate the right new training data efficiently.

Because of this imbalance in academia, I’ve often seen people in industry make the same mistake. They hire a dozen smart PhDs who know how to build state-of-the-art algorithms but don’t have experience creating training data or thinking about the right interfaces for annotation. I saw exactly this situation recently at one of the world’s largest auto manufacturers. The company had hired a large number of recent machine learning graduates, but it couldn’t operationalize its autonomous vehicle technology because the new employees couldn’t scale their data annotation strategy. The company ended up letting that entire team go. During the aftermath, I advised the company how to rebuild its strategy by using algorithms and annotation as equally-important, intertwined components of good machine learning.

1.2.3 Quality human annotation: Why is it hard?

To those who study it, annotation is a science that’s tied closely to machine learning. The most obvious example is that the humans who provide the labels can make errors, and overcoming these errors requires surprisingly sophisticated statistics.

Human errors in training data can be more or less important, depending on the use case. If a machine learning model is being used only to identify broad trends in consumer sentiment, it probably won’t matter whether errors propagate from 1% bad training data. But if an algorithm that powers an autonomous vehicle doesn’t see 1% of pedestrians due to errors propagated from bad training data, the result will be disastrous. Some algorithms can handle a little noise in the training data, and random noise even helps some algorithms become more accurate by avoiding overfitting. But human errors tend not to be random noise; therefore, they tend to introduce irreco...