PySpark Cookbook

Over 60 recipes for implementing big data processing and analytics using Apache Spark and Python

- 330 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

PySpark Cookbook

Over 60 recipes for implementing big data processing and analytics using Apache Spark and Python

About this book

Combine the power of Apache Spark and Python to build effective big data applicationsAbout This Book• Perform effective data processing, machine learning, and analytics using PySpark• Overcome challenges in developing and deploying Spark solutions using Python• Explore recipes for efficiently combining Python and Apache Spark to process dataWho This Book Is ForThe PySpark Cookbook is for you if you are a Python developer looking for hands-on recipes for using the Apache Spark 2.x ecosystem in the best possible way. A thorough understanding of Python (and some familiarity with Spark) will help you get the best out of the book.What You Will Learn• Configure a local instance of PySpark in a virtual environment • Install and configure Jupyter in local and multi-node environments• Create DataFrames from JSON and a dictionary using pyspark.sql• Explore regression and clustering models available in the ML module• Use DataFrames to transform data used for modeling• Connect to PubNub and perform aggregations on streamsIn DetailApache Spark is an open source framework for efficient cluster computing with a strong interface for data parallelism and fault tolerance. The PySpark Cookbook presents effective and time-saving recipes for leveraging the power of Python and putting it to use in the Spark ecosystem.You'll start by learning the Apache Spark architecture and how to set up a Python environment for Spark. You'll then get familiar with the modules available in PySpark and start using them effortlessly. In addition to this, you'll discover how to abstract data with RDDs and DataFrames, and understand the streaming capabilities of PySpark. You'll then move on to using ML and MLlib in order to solve any problems related to the machine learning capabilities of PySpark and use GraphFrames to solve graph-processing problems. Finally, you will explore how to deploy your applications to the cloud using the spark-submit command.By the end of this book, you will be able to use the Python API for Apache Spark to solve any problems associated with building data-intensive applications.Style and approachThis book is a rich collection of recipes that will come in handy when you are working with PySparkAddressing your common and not-so-common pain points, this is a book that you must have on the shelf.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Installing and Configuring Spark

- Installing Spark requirements

- Installing Spark from sources

- Installing Spark from binaries

- Configuring a local instance of Spark

- Configuring a multi-node instance of Spark

- Installing Jupyter

- Configuring a session in Jupyter

- Working with Cloudera Spark images

Introduction

- It is fast: It is estimated that Spark is 100 times faster than Hadoop when working purely in memory, and around 10 times faster when reading or writing data to a disk.

- It is flexible: You can leverage the power of Spark from a number of programming languages; Spark natively supports interfaces in Scala, Java, Python, and R.

- It is extendible: As Spark is an open source package, you can easily extend it by introducing your own classes or extending the existing ones.

- It is powerful: Many machine learning algorithms are already implemented in Spark so you do not need to add more tools to your stack—most of the data engineering and data science tasks can be accomplished while working in a single environment.

- It is familiar: Data scientists and data engineers, who are accustomed to using Python's pandas, or R's data.frames or data.tables, should have a much gentler learning curve (although the differences between these data types exist). Moreover, if you know SQL, you can also use it to wrangle data in Spark!

- It is scalable: Spark can run locally on your machine (with all the limitations such a solution entails). However, the same code that runs locally can be deployed to a cluster of thousands of machines with little-to-no changes.

Installing Spark requirements

Getting ready

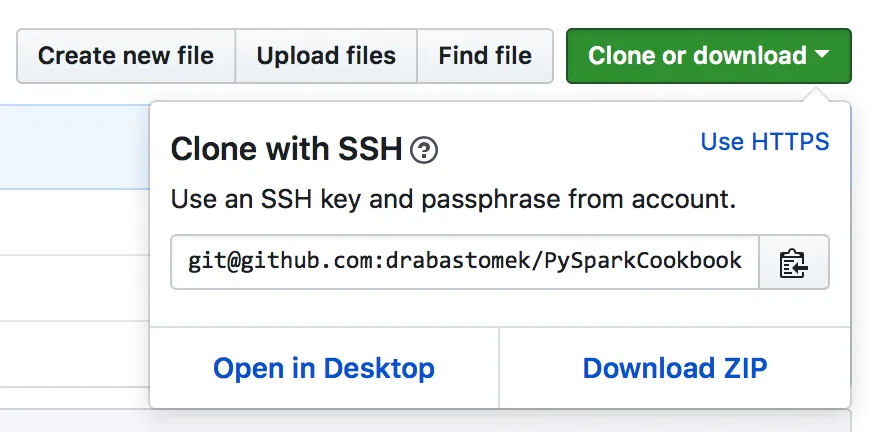

git clone [email protected]:drabastomek/PySparkCookbook.git

How to do it...

#!/bin/bash

# Shell script for checking the dependencies

#

# PySpark Cookbook

# Author: Tomasz Drabas, Denny Lee

# Version: 0.1

# Date: 12/2/2017

_java_required=1.8

_python_required=3.4

_r_required=3.1

_scala_required=2.11

_mvn_required=3.3.9

# parse command line arguments

_args_len="$#"

...

printHeader

checkJava

checkPython

if [ "${_check_R_req}" = "true" ]; then

checkR

fi

if [ "${_check_Scala_req}" = "true" ]; then

checkScala

fi

if [ "${_check_Maven_req}" = "true" ]; then

checkMaven

fi

How it works...

You can check the requirements for building Spark here: https://spark.apache.org/docs/latest/building-spark.html.

if [ "$_args_len" -ge 0 ]; then

while [[ "$#" -gt 0 ]]

do

key="$1"

case $key in

-m|--Maven)

_check_Maven_req="true"

shift # past argument

;;

-r|--R)

_check_R_req="true"

shift # past argument

;;

-s|--Scala)

_check_Scala_req="true"

shift # past argument

;;

*)

shift # past argument

esac

done

fi

./checkRequirements.sh -s -m -r

Table of contents

- Title Page

- Copyright and Credits

- Packt Upsell

- Contributors

- Preface

- Installing and Configuring Spark

- Abstracting Data with RDDs

- Abstracting Data with DataFrames

- Preparing Data for Modeling

- Machine Learning with MLlib

- Machine Learning with the ML Module

- Structured Streaming with PySpark

- GraphFrames – Graph Theory with PySpark

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app