Hands-On Data Science and Python Machine Learning

Frank Kane

- 420 páginas

- English

- ePUB (apto para móviles)

- Disponible en iOS y Android

Hands-On Data Science and Python Machine Learning

Frank Kane

Información del libro

This book covers the fundamentals of machine learning with Python in a concise and dynamic manner. It covers data mining and large-scale machine learning using Apache Spark.About This Book• Take your first steps in the world of data science by understanding the tools and techniques of data analysis• Train efficient Machine Learning models in Python using the supervised and unsupervised learning methods• Learn how to use Apache Spark for processing Big Data efficientlyWho This Book Is ForIf you are a budding data scientist or a data analyst who wants to analyze and gain actionable insights from data using Python, this book is for you. Programmers with some experience in Python who want to enter the lucrative world of Data Science will also find this book to be very useful, but you don't need to be an expert Python coder or mathematician to get the most from this book.What You Will Learn• Learn how to clean your data and ready it for analysis• Implement the popular clustering and regression methods in Python• Train efficient machine learning models using decision trees and random forests• Visualize the results of your analysis using Python's Matplotlib library• Use Apache Spark's MLlib package to perform machine learning on large datasetsIn DetailJoin Frank Kane, who worked on Amazon and IMDb's machine learning algorithms, as he guides you on your first steps into the world of data science. Hands-On Data Science and Python Machine Learning gives you the tools that you need to understand and explore the core topics in the field, and the confidence and practice to build and analyze your own machine learning models. With the help of interesting and easy-to-follow practical examples, Frank Kane explains potentially complex topics such as Bayesian methods and K-means clustering in a way that anybody can understand them.Based on Frank's successful data science course, Hands-On Data Science and Python Machine Learning empowers you to conduct data analysis and perform efficient machine learning using Python. Let Frank help you unearth the value in your data using the various data mining and data analysis techniques available in Python, and to develop efficient predictive models to predict future results. You will also learn how to perform large-scale machine learning on Big Data using Apache Spark. The book covers preparing your data for analysis, training machine learning models, and visualizing the final data analysis.Style and approachThis comprehensive book is a perfect blend of theory and hands-on code examples in Python which can be used for your reference at any time.

Preguntas frecuentes

Información

Apache Spark - Machine Learning on Big Data

- Installing and working with Spark

- Resilient Distributed Datasets (RDDs)

- The MLlib (Machine Learning Library)

- Decision Trees in Spark

- K-Means Clustering in Spark

Installing Spark

Installing Spark on Windows

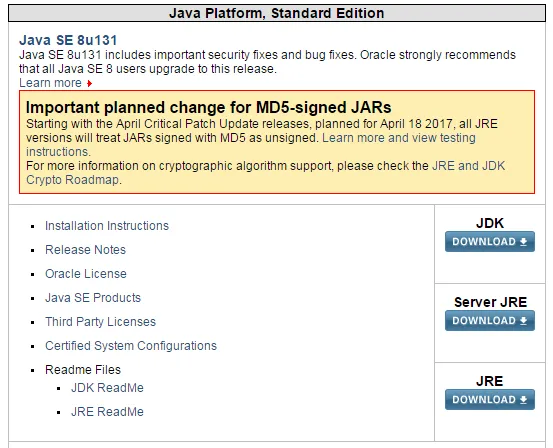

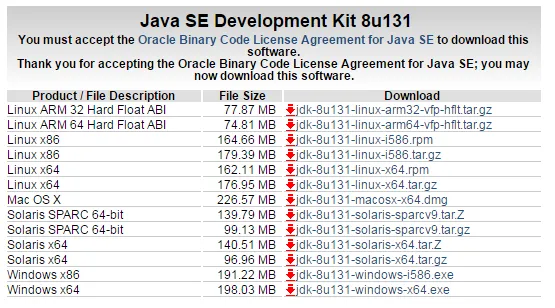

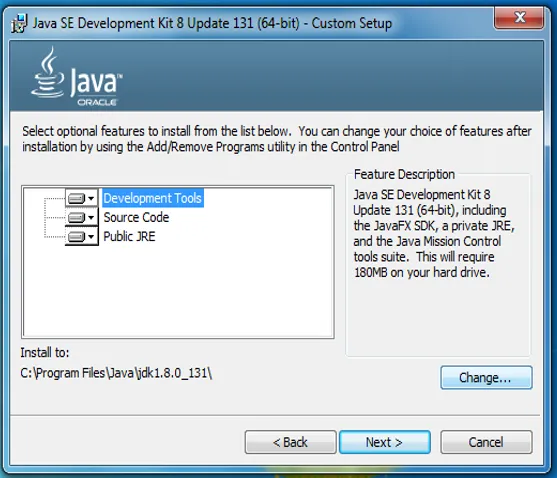

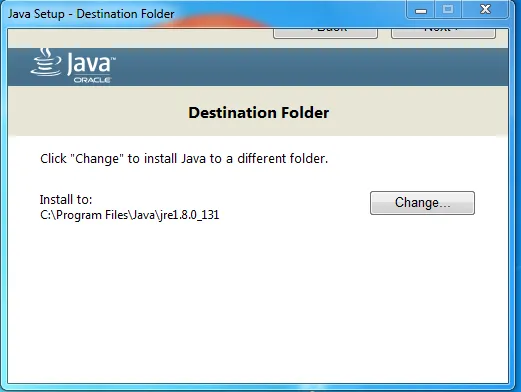

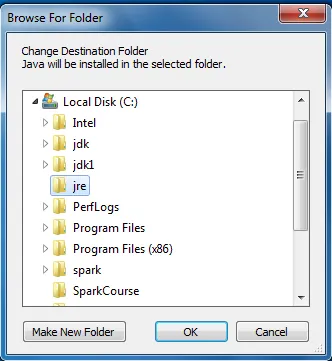

- Install a JDK: You need to first install a JDK, that's a Java Development Kit. You can just go to Sun's website and download that and install it if you need to. We need the JDK because, even though we're going to be developing in Python during this course, that gets translated under the hood to Scala code, which is what Spark is developed in natively. And, Scala, in turn, runs on top of the Java interpreter. So, in order to run Python code, you need a Scala system, which will be installed by default as part of Spark. Also, we need Java, or more specifically Java's interpreter, to actually run that Scala code. It's like a technology layer cake.

- Install Python: Obviously you're going to need Python, but if you've gotten to this point in the book, you should already have a Python environment set up, hopefully with Enthought Canopy. So, we can skip this step.

- Install a prebuilt version of Spark for Hadoop: Fortunately, the Apache website makes available prebuilt versions of Spark that will just run out of the box that are precompiled for the latest Hadoop version. You don't have to build anything, you can just download that to your computer and stick it in the right place and be good to go for the most part.

- Create a conf/log4j.properties file: We have a few configuration things to take care of. One thing we want to do is adjust our warning level so we don't get a bunch of warning spam when we run our jobs. We'll walk through how to do that. Basically, you need to rename one of the properties files, and then adjust the error setting within it.

- Add a SPARK_HOME environment variable: Next, we need to set up some environment variables to make sure that you can actually run Spark from any path that you might have. We're going to add a SPARK_HOME environment variable pointing to where you installed Spark, and then we will add %SPARK_HOME%\bin to your system path, so that when you run Spark Submit, or PySpark or whatever Spark command you need, Windows will know where to find it.

- Set a HADOOP_HOME variable: On Windows there's one more thing we need to do, we need to set a HADOOP_HOME variable as well because it's going to expect to find one little bit of Hadoop, even if you're not using Hadoop on your standalone system.

- Install winutils.exe: Finally, we need to install a file called winutils.exe. There's a link to winutils.exe within the resources for this book, so you can get that there.

Installing Spark on other operating systems

Installing the Java Development Kit

Installing Spark