eBook - ePub

Heterogeneous Computing Architectures

Challenges and Vision

Olivier Terzo, Karim Djemame, Alberto Scionti, Clara Pezuela, Olivier Terzo, Karim Djemame, Alberto Scionti, Clara Pezuela

This is a test

Condividi libro

- 316 pagine

- English

- ePUB (disponibile sull'app)

- Disponibile su iOS e Android

eBook - ePub

Heterogeneous Computing Architectures

Challenges and Vision

Olivier Terzo, Karim Djemame, Alberto Scionti, Clara Pezuela, Olivier Terzo, Karim Djemame, Alberto Scionti, Clara Pezuela

Dettagli del libro

Anteprima del libro

Indice dei contenuti

Citazioni

Informazioni sul libro

Heterogeneous Computing Architectures: Challenges and Vision provides an updated vision of the state-of-the-art of heterogeneous computing systems, covering all the aspects related to their design: from the architecture and programming models to hardware/software integration and orchestration to real-time and security requirements. The transitions from multicore processors, GPU computing, and Cloud computing are not separate trends, but aspects of a single trend-mainstream; computers from desktop to smartphones are being permanently transformed into heterogeneous supercomputer clusters. The reader will get an organic perspective of modern heterogeneous systems and their future evolution.

Domande frequenti

Come faccio ad annullare l'abbonamento?

È semplicissimo: basta accedere alla sezione Account nelle Impostazioni e cliccare su "Annulla abbonamento". Dopo la cancellazione, l'abbonamento rimarrà attivo per il periodo rimanente già pagato. Per maggiori informazioni, clicca qui

È possibile scaricare libri? Se sì, come?

Al momento è possibile scaricare tramite l'app tutti i nostri libri ePub mobile-friendly. Anche la maggior parte dei nostri PDF è scaricabile e stiamo lavorando per rendere disponibile quanto prima il download di tutti gli altri file. Per maggiori informazioni, clicca qui

Che differenza c'è tra i piani?

Entrambi i piani ti danno accesso illimitato alla libreria e a tutte le funzionalità di Perlego. Le uniche differenze sono il prezzo e il periodo di abbonamento: con il piano annuale risparmierai circa il 30% rispetto a 12 rate con quello mensile.

Cos'è Perlego?

Perlego è un servizio di abbonamento a testi accademici, che ti permette di accedere a un'intera libreria online a un prezzo inferiore rispetto a quello che pagheresti per acquistare un singolo libro al mese. Con oltre 1 milione di testi suddivisi in più di 1.000 categorie, troverai sicuramente ciò che fa per te! Per maggiori informazioni, clicca qui.

Perlego supporta la sintesi vocale?

Cerca l'icona Sintesi vocale nel prossimo libro che leggerai per verificare se è possibile riprodurre l'audio. Questo strumento permette di leggere il testo a voce alta, evidenziandolo man mano che la lettura procede. Puoi aumentare o diminuire la velocità della sintesi vocale, oppure sospendere la riproduzione. Per maggiori informazioni, clicca qui.

Heterogeneous Computing Architectures è disponibile online in formato PDF/ePub?

Sì, puoi accedere a Heterogeneous Computing Architectures di Olivier Terzo, Karim Djemame, Alberto Scionti, Clara Pezuela, Olivier Terzo, Karim Djemame, Alberto Scionti, Clara Pezuela in formato PDF e/o ePub, così come ad altri libri molto apprezzati nelle sezioni relative a Informatik e Systemarchitektur. Scopri oltre 1 milione di libri disponibili nel nostro catalogo.

Informazioni

1

Heterogeneous Data Center Architectures: Software and Hardware Integration and Orchestration Aspects

A. Scionti, F. Lubrano, and O. Terzo

LINKS Foundation — Leading Innovation & Knowledge for Society, Torino, Italy

S. Mazumdar

Simula Research Laboratory, Lysaker, Norway

CONTENTS

1.1Heterogeneous Computing Architectures: Challenges and Vision

1.2Backgrounds

1.2.1Microprocessors and Accelerators Organization

1.3Heterogeneous Devices in Modern Data Centers

1.3.1Multi-/Many-Core Systems

1.3.2Graphics Processing Units (GPUs)

1.3.3Field-Programmable Gate Arrays (FPGAs)

1.3.4Specialised Architectures

1.4Orchestration in Heterogeneous Environments

1.4.1Application Descriptor

1.4.2Static Energy-Aware Resource Allocation

1.4.2.1Modelling Accelerators

1.4.3Dynamic Energy-Aware Workload Management

1.4.3.1Evolving the Optimal Workload Schedule

1.5Simulations

1.5.1Experimental Setup

1.5.2Experimental Results

1.6Conclusions

Machine learning (ML) and deep learning (DL) algorithms are emerging as the new driving force for the computer architecture evolution. With an ever-larger adoption of ML/DL techniques in Cloud and high-performance computing (HPC) domains, several new architectures (spanning from chips to entire systems) have been pushed on the market to better support applications based on ML/DL algorithms. While HPC and Cloud remained for long time distinguished domains with their own challenges (i.e., HPC looks at maximising FLOPS, while Cloud at adopting COTS components), an ever-larger number of new applications is pushing for their rapid convergence. In this context, many accelerators (GP-GPUs, FPGAs) and customised ASICs (e.g., Google TPUs, Intel Neural Network Processor—NNP) with dedicated functionalities have been proposed, further enlarging the data center heterogeneity landscape. Also Internet of Things (IoT) devices started integrating specific acceleration functions, still aimed at preserving energy. Application acceleration is common also outside ML/DL applications; here, scientific applications popularised the use of GP-GPUs, as well as other architectures, such as Accelerated Processing units (APUs), Digital Signal Processors (DSPs), and many-cores (e.g., Intel XeonPhi). On one hand, training complex deep learning models requires powerful architectures capable of crunching large number of operations per second and limiting power consumption; on the other hand, flexibility in supporting the execution of a broader range of applications (HPC domain requires the support for double-precision floating-point arithmetic) is still mandatory. From this viewpoint, heterogeneity is also pushed down: chip architectures sport a mix of general-purpose cores and dedicated accelerating functions.

Supporting such large (at scale) heterogeneity demands for an adequate software environment able to maximise productivity and to extract maximum performance from the underlying hardware. Such challenge is addressed when one looks at single platforms (e.g., Nvidia provides CUDA programming framework for supporting a flexible programming environment, OpenCL has been proposed as a vendor independent solution targeting different devices—from GPUs to FPGAs); however, moving at scale, effectively exploiting heterogeneity remains a challenge. Whenever large number of heterogeneous resources (with such variety) must be managed, it poses new challenges also. Most of the tools and frameworks (e.g., OpenStack) for managing the allocation of resources to process jobs still provide a limited support to heterogeneous hardware.

The chapter contribution is two fold: i) presenting a comprehensive vision on hardware and software heterogeneity, covering the whole spectrum of a modern Cloud/HPC system architecture; ii) presenting ECRAE, i.e., an orchestration solution devised to explicitly deal with heterogeneous devices.

1.1 Heterogeneous Computing Architectures: Challenges and Vision

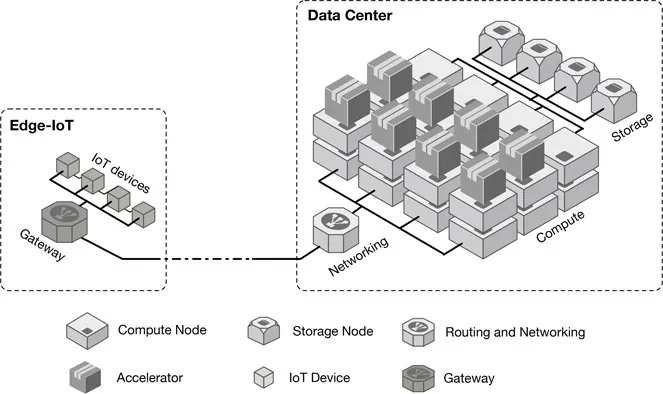

Cloud computing represents a well-established paradigm in the modern computing domain showing an ever-growing adoption over the years. The continuous improvement in processing, storage and interconnection technologies, along with the recent explosion of machine learning (ML) and more specifically of deep learning (DL) technologies has pushed Cloud infrastructures beyond their traditional role. Besides traditional services, we are witnessing at the diffusion of (Cloud) platforms supporting the ingestion and processing of massive data sets coming from ever-larger sensors networks. Also, the availability of a huge amount of cheap computing resources attracted the scientific community in using Cloud computing resources to run complex HPC-oriented applications without requiring access to expensive supercomputers [175,176]. Figure 1.1 shows the various levels of the computing continuum, within which heterogeneity manifest itself.

In this context data centers have a key role in providing necessary IT resources to a broad range of end-users. Thus, unlike past years, they became more heterogeneous, starting to include processing technologies, as well as storage and interconnection systems that were prerogative of only high-performance systems (i.e., high-end clusters, supercomputers). Cloud providers have been pushed to embed several types of accelerators, ranging from well known (GP-)GPUs to reconfigurable devices (mainly FPGAs) to more exotic hardware (e.g., Google TPU, Intel Neural Network Processor—NNP). Also, CPUs became more variegated in terms of micro-architectural features and ISAs. With ever-more complex chip-manufacturing processes, heterogeneity reached IoT devices too: here, hardware specialisation minimises energy consumption. Such vast diversity in hardware systems, along with the demand for more energy efficiency at any level and the growing complexity of applications, make mandatory rethinking the approach used to manage Cloud resources.

Among the others, recently, FPGAs emerged as a valuable candidate providing an adequate level of performance, energy efficiency and programmability for a large variety of applications. Thanks to the optimal trade-off between performance and flexibility (high-level synthesis—HLS—compilers ease mapping between high-level code and circuit synthesis), most of the (scientific) applications nowadays benefit from FPGA acceleration. Energy (power) consumption is the main concern for Cloud providers, since the rapid growth of the demand for computational power has led to the creation of large-scale data centers and consequently to the consumption of enormous amounts of electricity power resulting in high operational costs and carbon dioxide emissions. On the other hand, in IoT world, reducing energy consumption is mandatory to keep working devices for longer time, even in case of disconnection from the power supply grid. Data centers need proper (energy) power management strategies to cope with the growing energy consumption, that involves high economic cost and environmental issues. New challenges emerge in providing adequate management strategies. Specifically, resource allocation is made more effective by dynamically selecting the most appropriate resource for a given task to run, taking into account availability of specialised processing elements. Furt...