![]()

What is Artificial Intelligence? Understanding What a Computer, an Algorithm, a Program, and, in Particular, an Artificial Intelligence Program Are | 1 |

What is artificial intelligence? Before we start debating whether machines could enslave humans and raise them on farms like cattle, perhaps we should ask ourselves what AI is made of. Let’s be clear: artificial intelligence is not about making computers intelligent. Computers are still machines. They simply do what we ask of them, nothing more.

COMPUTER SCIENCE AND COMPUTERS

To understand what a computer is and isn’t capable of, one must first understand what computer science is. Let’s start there.

Computer science is the science of processing information.1 It’s about building, creating, and inventing machines that automatically process all kinds of information, from numbers to text, images, or video.

This started with the calculating machine. Here, the information consists of numbers and arithmetic operations. For example:

Then, as it was with prehistoric tools, there were advancements over time, and the information processed became more and more complex. First it was numbers, then words, then images, then sound. Today, we know how to make machines that listen to what we say to them (this is “the information”) and turn it into a concrete action. For example, when you ask your iPhone: “Siri, tell me what time my doctor’s appointment is,” the computer is the machine that processes this information.

COMPUTERS AND ALGORITHMS

To process the information, the computer applies a method called an algorithm. Let’s try to understand what this is about.

When you went to elementary school, you learned addition: you have to put the numbers in columns, with the digits correctly aligned. Then, you calculate the sum of the units. If there is a carried number, you make note of it and then you add the tens, and so on.

This method is an algorithm.

The algorithms are like cooking recipes for mathematicians: crack open the eggs, put them in the bowl, mix, pour in the frying pan, and so on. It’s the same thing. Like writing an omelet recipe for a cookbook, you can write an algorithm to describe how to process information. For example, to do addition, we can learn addition algorithms and apply them.

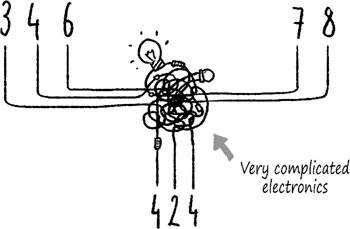

When building a calculator, engineers turn these algorithms into a set of electronic wires. We obtain a machine capable, when provided with two numbers, of calculating and displaying the resulting sum. These three notions (the cooking recipe, the algorithm, and the electronic machine applying the algorithm) vary in complexity, but they are well understood: a cook knows how to write and follow a recipe; a computer scientist knows how to write an algorithm; an electrical engineer knows how to build a calculator.

ALGORITHMS AND COMPUTER SCIENCE

The originality of computer science is to think of the algorithm, itself, as information. Imagine it’s possible to describe our addition recipe as numbers or some other symbols that a machine can interpret. And imagine that, instead of a calculator, we’re building a slightly more sophisticated machine. When given two numbers and our addition algorithm, this machine is able to “decode” the algorithm to perform the operations it describes. What will happen?

The machine is going to do an addition, which is not very surprising. But then, one could use the exact same machine with a different algorithm, let’s say a multiplication algorithm. And now we have a machine that can do both additions and multiplications, depending on which algorithm you give to it, at the same time.

I can sense the excitement reaching its climax. Doing additions and multiplications may not seem like anything extraordinary to you. However, this brilliant idea, which we owe to Charles Babbage (1791–1871), is where computers originated. A computer is a machine that processes data provided on a physical medium (for example a perforated card, a magnetic tape, a compact disc) by following a set of instructions written on a physical medium (the same medium as the data, usually): it’s a machine that carries out algorithms.

THE ALL-PURPOSE MACHINE

In 1936, Alan Turing proposed a mathematical model of computation: the famous Turing machines.

A Turing machine consists of a strip of tape on which symbols can be written. To give you a better idea, imagine a 35 mm reel of film with small cells into which you can put a photo. With a Turing machine, however, we don’t use photos. Instead, we use an alphabet – in other words, a list of symbols (for example 0 and 1, which are computer engineers’ favorite symbols). In each cell, we can write only one symbol.

For the Turing machine to work, you need to give it a set of numbered instructions, as shown below.

Instruction 1267:

Symbol 0 → Move tape one cell to the right,

Go to instruction 3146

Symbol 1 → Write 0,

Move tape one cell to the left,

Resume instruction 1267.

The Turing machine analyzes the symbol in the current cell and carries out the instruction.

In a way, this principle resembles choose-your-own-adventure books: Make a note that you picked up a sword and go to page 37. The comparison ends here. In contrast to the reader of a choose-your-own-adventure book, the machine does not choose to open the chest or go into the dragon’s lair: it only does what the book’s author has written on the page, and it does not make any decision on its own.

It follows exactly what is written in the algorithm.

Alan Turing showed that his “machines” could reproduce any algorithm, no matter how complicated. And, indeed, a computer works exactly like a Turing machine: it has a memory (equivalent to the Turing machine’s “tape”), it reads symbols contained in memory cells, and it carries out specific instructions with the help of electronic wires. Thus, a computer, in theory, is capable of performing any algorithm.

PROGRAMS THAT MAKE PROGRAMS

Let’s recap. A computer is a machine equipped with a memory on which two things are recorded: data (or, more generally, information, hence the word information technology) and an algorithm, coded in a particular language, which specifies how the data is to be processed. An algorithm written in a language that can be interpreted by a machine is called a computer program, and when the machine carries out what is described in the algorithm, we say that the computer is running the program.

As we can see with Turing machines, writing a program is a little more complex than simply saying “Put the numbers in columns and add them up.” It’s more like this:

Take the last digit of the first number.

Take the last digit of the second number.

Calculate the sum.

Write the last digit in the “sum” cell.

Write the preceding digits in the “carrty” cell.

Resume in the preceding column.

One must accurately describe, step by step, what the machine must do, using only the operations allowed by the little electronic wires. Writing algorithms in this manner is very limiting.

That’s why computer engineers have invented languages and programs to interpret these languages. For example, we can ask the machine to transform the + symbol in the series of operations described above.

This makes programming much easier, as one can reuse already-written programs to write other, more complex ones – just like with prehistoric tools! Once you have the wheel, you can make wheelbarrows, and with enough time and energy you can even make a machine to make wheels.

AND WHERE DOES ARTIFICIAL INTELLIGENCE FIT IN ALL THIS?

Artificial intelligence consists of writing specific programs.

According to Minsky (1968), who helped found the discipline in the 1950s, AI is “the building of computer programs which perform tasks which are, for the moment, performed in a more satisfactory way by humans because they require high level mental processes such as: perception learning, memory organization and critical reasoning.”

In other words, it’s a matter of writing programs to perform information-processing tasks for which humans are, at first glance, more competent. Thus, we really ought to say “an AI program” and not “an AI.”

Nowadays, there exist many AI programs capable of resolving information-processing tasks including playing chess, predicting tomorrow’s weather, and answering the question “Who was the fifth president of the United States?” All of these things that can be accomplished by machines rely on methods and algorithms that come from artificial intelligence. In this way, there is nothing magical or intelligent about what an AI does: the machine applies the algorithm – an algorithm that was written by a human. If there is any intelligence, it comes from the programmer who gave the machine the right instructions.

A MACHINE THAT LEARNS?

Obviously, writing an AI program is no easy task: one must write instructions that produce a response that looks “intelligent,” no matter the data provided. Rather than writing detailed instructions by hand, computer scie...