- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Safety Management for Software-based Equipment

About this book

A review of the principles of the safety of software-based equipment, this book begins by presenting the definition principles of safety objectives. It then moves on to show how it is possible to define a safety architecture (including redundancy, diversification, error-detection techniques) on the basis of safety objectives and how to identify objectives related to software programs. From software objectives, the authors present the different safety techniques (fault detection, redundancy and quality control). "Certifiable system" aspects are taken into account throughout the book.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Edition

11

Safety Management

This chapter introduces the concept of system dependability (reliability, availability, safety and maintenance) and the associated definitions (fault, error, failure). One important attribute of dependability is safety. Safety management is in general related to people and everyday life. It is a difficult and high cost activity.

1.1. Introduction

The aim of this book is to describe the general principles behind the designing of a dependable software-based package. This first chapter will concentrate on the basic concepts of system dependability as well as some basic definitions.

1.2. Dependability

1.2.1. Introduction

First of all, let us define dependability.

DEFINITION 1.1 (DEPENDABILITY).– Dependability can be defined as the quality of the service provided by a system so that the users of that particular service may place a justified trust in the system providing it.

In this book, definition 1.1 will be used. However, it is to be noted that there are other more technical approaches to dependability. For reference, for the IEC/CEI 1069 ([IEC 91]), dependability measures whether the system in question performs exclusively and correctly the task(s) assigned to it.

For more information on dependability and its implementation, we refer readers to [VIL 88] and [LIS 95].

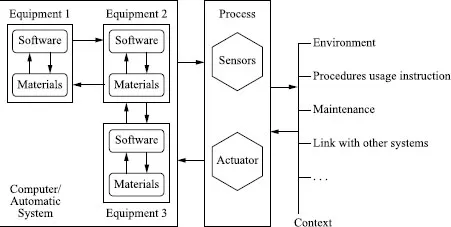

Figure 1.1. System and interactions

Figure 1.1 shows that a system is a structured set (of computer systems, processes and usage context) which has to form an organized entity. Further on, we shall look at software implementations found in the computer/automatic part of the system.

Dependability is characterized by a number of attributes: reliability, availability, maintainability and safety, as seen in RAMS 1.

New attributes are starting to play a more important role, such as security, and we now refer to RAMSS 2.

1.2.2. Obstacles to dependability

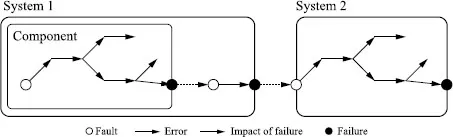

As indicated in [LAP 92], dependability in a complex system may be impacted through three different types of event (see Figure 1.2): failures, faults and errors.

The elements of the system are subject to failures, which can lead the system to situations of potential accidents.

Figure 1.2. Impact from one chain to another

DEFINITION 1.2 (FAILURE). – Failure (sometimes referred to as breakdown) is a disruption in a functioning entity’s ability to perform a required function. As the performance of a required function necessarily excludes certain behaviors, and as some functions may be specified in terms of behaviors to be avoided, the occurrence of a behavior to be avoided is a failure.

From definition 1.2 follows the necessity to define the notions of normal (safe) behavior and of abnormal (unsafe) behavior with a clear distinction between the two.

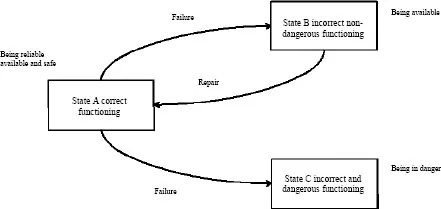

Figure 1.3 shows a representation of the possible states of a system (correct vs. incorrect) as well as all the possible transitions among those states. The states of the system can be classified into three families:

– correct states: there are no dangerous situations;

– safe incorrect states: a failure has been detected but the system is in a safe state;

– incorrect states: the situation is dangerous and out of control; potential accidents.

Figure 1.3. Evolution of a system’s state

When a system reaches a state of safe emergency shutdown, there may be a complete or partial disruption of the service. This status may allow a return to the correct state after repair.

The failures can be random or systematic. Random failures are unpredictable and are the result of a number of degradations involving the material aspects of the system. Generally, random failures can be quantified due to their nature (wear-out, ageing, etc.).

Systematic failures are deterministically linked to a cause. The cause of failure can only be eliminated by a resumption of the implementation process (design, fabrication, documentation) or a resumption of the procedures. Given its nature, systematic failures are not quantifiable.

The failure is an observable external manifestation of an error (the standard CEI/IEC 61508 [IEC 08] refers to it as an anomaly).

DEFINITION 1.3 (ERROR).– Error is an internal consequence of an anomaly in the implementation of the product (a variable or a state of the flawed program).

In spite of all the precautions taken in the design of a component, this may be subject to flaws in its conception, verification, usage and maintenance in operational conditions.

DEFINITION 1.4 (ANOMALY).– An anomaly is a non-conformity introduced in the product (e.g. an error in a code).

From the notion of an anomaly (see definition 1.4), it is possible to introduce the notion of a fault. The fault is the cause of the error (e.g. short-circuiting, electromagnetic perturbation, or fault in the design). The fault (see definition 1.5), which is the most widely acknowledged term, is the introduction of a flaw in the component.

DEFINITION 1.5 (FAULT).– A fault is an anomaly-generating process that can be due to human or non-human error.

Figure 1.4. Fundamental chain

To summarize, let us recall that trust in the dependability of a system may be compromised by the occurrence of obstacles to dependability, i.e. faults, errors and failures.

Figure 1.4 shows the fundamental chain that links these obstacles together. The occurrence of a failure may entail a fault, which in turn may bring one or more error(s). This (these) new error(s) may consequently produce a new failure.

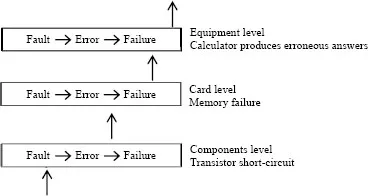

Figure 1.5. Propagation in a system

The relationship between the obstacles (faults, errors, failures) must be seen throughout the system as an entity, as shown by the case study in Figure 1.5.

The vocabulary surrounding dependability has been precisely defined. We shall henceforth only present the concepts useful to our argument; [LAP 92] contains all the necessary definitions to fully grasp this notion.

1.2.3. Obstacles to dependability: case study

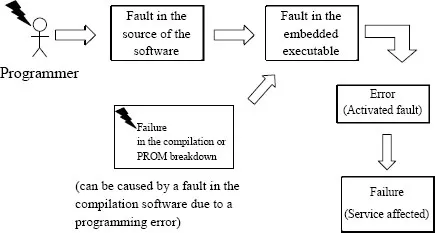

Figure 1.6 illustrates an example of how failures can occur. As previously mentioned, a failure is detected through the behavior of the system as it diverges from what has been specified. This failure occurs at the limits of the system because of a number of errors, which are internal to the system, and has consequences on the working out of the results.

In our case study, the source of errors is a fault in the embedded executable. These faults may be introduced either by the programmer (a bug) or the tools (in generating the executable, downloading tools, etc.) or they can occur because of failures of the material (memory failure, short-circuiting of a component, external perturbation (e.g. EMC 3), etc.).

Figure 1.6. Example of failure

It is to be noted that faults may be introduced in the design (fault in the software, under-sizing of the system, etc.), in the production (when ge...

Table of contents

- Cover

- Contents

- Title

- Copyright

- Introduction

- Chapter 1: Safety Management

- Chapter 2: From System to Software

- Chapter 3: Certifiable Systems

- Chapter 4: Risk and Safety Levels

- Chapter 5: Principles of Hardware Safety

- Chapter 6: Principles of Software Safety

- Chapter 7: Certification

- Conclusion

- Index

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access Safety Management for Software-based Equipment by Jean-Louis Boulanger in PDF and/or ePUB format, as well as other popular books in Technology & Engineering & Electrical Engineering & Telecommunications. We have over one million books available in our catalogue for you to explore.