![]()

Chapter 1

Learning the

First Language(s)

One of the most extraordinary features of the human brain is its ability to acquire spoken language quickly and accurately. We are born with an innate capacity to distinguish the distinct sounds (phonemes) of all the languages on this planet, with no predisposition for one language over another. Eventually, we are able to associate those sounds with arbitrary written symbols to express our thoughts and emotions to others.

Other animals have developed sophisticated ways to communicate with members of their species. Birds and apes bow and wave appendages, honeybees dance to map out the location of food, and even one-celled animals can signal neighbors by emitting an array of various chemicals. The communicative systems of vervet monkeys, for instance, have been studied extensively. They are known to make up to ten different vocalizations. Amazingly, many of these are used to warn other members of the group about specific approaching predators. A “snake call” will trigger a different defensive strategy than a “leopard call” or an “eagle call.” Apes in captivity show similar communicative abilities, having been taught rudimentary sign language and the use of lexigrams— symbols that do not graphically resemble their corresponding words—and computer keyboards. Some apes, such as the famous Kanzi, have been able to learn and use hundreds of lexigrams (Savage-Rumbaugh & Lewin, 1994). However, although these apes can learn a basic syntactic and referential system, their communications certainly lack the complexity of a full language.

By contrast, human beings have developed an elaborate and complex means of spoken communication that many say is largely responsible for our place as the dominant species on this planet. To accomplish this required both the development of the anatomical apparatus for precise speech (i.e., the larynx and vocal cords) along with the necessary neurological changes in the brain to support language itself. The enlargement of the larynx probably occurred as our ancestors began to walk upright. Meanwhile, as brain development became more complex, regions emerged that specialized in sound processing as well as musical and arithmetic notations (Vandervert, 2009). Somewhere along the way, too, a gene variation known as FOXP2 appeared. Geneticists believe it contributed significantly to our ability to create precise speech. Evolutionary anthropologists are still debating whether language evolved slowly as these physical and cerebral capabilities were acquired, resulting in a period of semilanguage, or whether it emerged suddenly once all these capabilities were available.

SPOKEN LANGUAGE COMES NATURALLY

Spoken language is truly a marvelous accomplishment for many reasons. At the very least, it gives form to our memories and words to express our thoughts. A single human voice can pronounce all the hundreds of vowel and consonant sounds that allow it to speak any of the estimated 6,500 languages that exist today. (Scholars believe there were once about 10,000 languages, but many have since died out.) With practice, the voice becomes so fine-tuned that it makes only about one sound error per million sounds and one word error per million words (Pinker, 1994). Figure 1.1 presents a general timeline for spoken language development during the first three years of growth. The chart is a rough approximation. Some children will progress faster or slower than the chart indicates. Nonetheless, it is a useful guide to show the progression of skills acquired during the process of learning any language.

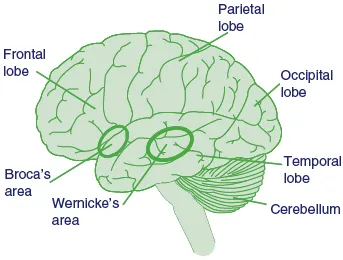

Before the advent of scanning technologies, we explained how the brain produced spoken language on the basis of evidence from injured brains. In 1861, French physician Paul Broca noticed that patients with brain damage to an area near the left temple understood language but had difficulty speaking, a condition known as aphasia. About the size of a quarter, this region of the brain is commonly referred to as Broca’s area (Figure 1.2).

Figure 1.1 An average timeline of spoken language development during the child’s first three years. There is considerable variation among individual children as visual and auditory processing develop at different rates.

In 1881, German neurologist Carl Wernicke described a different type of aphasia—one in which patients could not make sense out of words they spoke or heard. These patients had damage in the left temporal lobe. Now called Wernicke’s area, it is located above the left ear and is about the size of a silver dollar. Those with damage to Wernicke’s area could speak fluently, but what they said was quite meaningless. Ever since Broca discovered that the left hemisphere of the brain was specialized for language, researchers have attempted to understand the way in which normal human beings acquire and process their native language.

Processing Spoken Language

Recent research studies using imaging scanners reveal that spoken language production is a far more complex process than previously thought. When preparing to produce a spoken sentence, the brain uses not only Broca’s and Wernicke’s areas but also calls on several other neural networks scattered throughout the left hemisphere. Nouns are processed through one set of patterns; verbs are processed by separate neural networks. The more complex the sentence structure, the more areas that are activated, including some in the right hemisphere.

Figure 1.2 The language system in the left hemisphere is comprised mainly of Broca’s area and Wernicke’s area. The four lobes of the brain and the cerebellum are also identified.

In most people, the left hemisphere is home to the major components of the language processing system. Broca’s area is a region of the left frontal lobe that is believed to be responsible for processing vocabulary, syntax (how word order affects meaning), and rules of grammar. Wernicke’s area is part of the left temporal lobe and is thought to process the sense and meaning of language. However, the emotional content of language is governed by areas in the right hemisphere. More recent imaging studies have unexpectedly found that the cerebellum—long thought to be involved mainly in the planning and control of movement— also seems to be involved in language processing (Booth, Wood, Lu, Houk, & Bitan, 2007; Ghosh, Tourville, & Guenther, 2008). Four decades ago, researchers discovered that infants responded to speech patterns (Eimas, Siqueland, Jusczyk, & Vigorito, 1971). More recently, brain imaging studies of infants as young as 4 months of age confirm that the brain possesses neural networks that specialize in responding to the auditory components of language. Dehaene-Lambertz (2000) used electroencephalograph (EEG) recordings to measure the brain activity of 16 four-month-old infants as they listened to language syllables and acoustic tones. After numerous trials, the data showed that syllables and tones were processed primarily in different areas of the left hemisphere, although there was also some right-hemisphere activity. For language input, various features, such as the voice and the phonetic category of a syllable, were encoded by separate neural networks into sensory memory.

Subsequent studies have supported these results (Bortfeld, Wruck, & Boas, 2007; Friederici, Friedrich, & Christophe, 2007). These remarkable findings suggest that even at this early age, the brain is already organized into functional networks that can distinguish between language fragments and other sounds. Other studies of families with severe speech and language disorders isolated a mutated gene—the FOXP2 mentioned earlier—believed to be responsible for their deficits. This discovery lends further credence to the notion that the ability to acquire spoken language is encoded in our genes (Gibson & Gruen, 2008; Lai, Fisher, Hurst, Vargha-Khadem, & Monaco, 2001). The apparent genetic predisposition of the brain to the sounds of language explains why most young children respond to and acquire spoken language quickly. After the first year in a language environment, the child becomes increasingly able to differentiate those sounds heard in the native language and begins to lose the ability to perceive other sounds (Conboy, Sommerville, & Kuhl, 2008).

The ability to acquire language appears to be encoded in our genes.

Gestures appear to have a significant impact on a young child’s development of language, particularly vocabulary. Studies show that a child’s gesturing is a significant predictor of vocabulary acquisition. The daily activities for children between the ages of 14 and 34 months were videotaped for 90 minutes every four months. At 42 months, children were given a picture vocabulary test. The researchers found that child gesture use at 14 months was a significant predictor of vocabulary size at 42 months, above and beyond the effects of parent and child word use at 14 months. These results held even when background factors such as socioeconomic status were controlled (Rowe, Özçaliskan, & Goldin-Meadow, 2008).

Brain Areas for Logographic and Tonal Languages

Functional magnetic resonance imaging (fMRI) and magnetoencephalography (MEG) studies have shown that native speakers of other languages, such as Chinese and Spanish, also use these same brain regions for language processing. Native Chinese speakers, however, showed additional activation in the right temporal and parietal lobe regions. This may be because Chinese is a logographic language and native speakers may be using the right hemisphere’s visual processing areas to assist in processing language interpretation (Pu et al., 2001; Valaki et al., 2004). Additional evidence for this involvement of visual areas in the brain comes from imaging studies of Japanese participants reading both the older form of Japanese logographs (called kanji) and the simplified syllabic form (called hiragana). Kanji showed more activation than hiragana in the right-hemisphere visual processing areas, while hiragana showed more activation than kanji in the left-hemisphere areas responsible for phonological processing (Buchweitz, Mason, Hasegawa, & Just, 2009). Another interesting finding among native Chinese speakers was that the brain area processing vowel sounds was separate from the areas processing variations of tone because Chinese is a tonal language (Green, Crinion, & Price, 2007; Liang & van Heuven, 2004).

Role of the Mirror Neuron System

To some degree language acquisition is dependent on imitation. Babies and toddlers listen closely to the sounds in their environment as their brain records those that are present more frequently than others. Eventually the toddler begins to repeat these sounds. Whatever response the toddlers get from adult listeners will affect their decision to repeat, modify, or perhaps discard the sounds they just uttered. This ongoing process of trying specific sounds and evaluating adult reactions is now believed to be orchestrated by the recently discovered mirror neuron system.

It seems the old saying “monkey see, monkey do” is truer than we would ever have believed. Scientists using brain imaging technology recently discovered clusters of neurons in the premotor cortex (the area in front of the motor cortex that plans movements) firing just before a person carried out a planned movement. Curiously, these neurons also fired when a person saw someone else perform the same movement. For example, the firing patterns of these neurons that preceded the subject grasping a pencil was identical to the pattern when the subject saw someone else do the same thing. Thus, similar brain areas process both the production and perception of movement (Fadiga, Craighero, & Olivier, 2005; Iacoboni et al., 2005). Neuroscientists believe these mirror neurons are responsible for helping babies and toddlers imitate the movements, facial expressions, emotions, and sounds of their caregivers. Subsequent studies suggest that the mirror neuron system also helps infants develop the neural networks that link the words they hear to actions of adults they see in their environment (Arbib, 2009).

Gender Differences in Language Processing

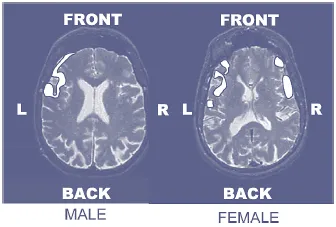

Figure 1.3 These are combined representational fMRIs showing the solid white areas of the male and female brains that were activated during language processing (Clements et al., 2006; Shaywitz et al., 1995).

One of the earliest and most interesting discove...