- English

- ePUB (mobile friendly)

- Available on iOS & Android

Robust Statistics

About this book

A new edition of the classic, groundbreaking book on robust statistics

Over twenty-five years after the publication of its predecessor, Robust Statistics, Second Edition continues to provide an authoritative and systematic treatment of the topic. This new edition has been thoroughly updated and expanded to reflect the latest advances in the field while also outlining the established theory and applications for building a solid foundation in robust statistics for both the theoretical and the applied statistician.

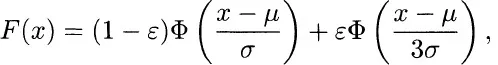

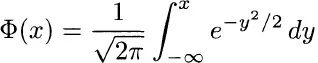

A comprehensive introduction and discussion on the formal mathematical background behind qualitative and quantitative robustness is provided, and subsequent chapters delve into basic types of scale estimates, asymptotic minimax theory, regression, robust covariance, and robust design. In addition to an extended treatment of robust regression, the Second Edition features four new chapters covering:

-

Robust Tests

-

Small Sample Asymptotics

-

Breakdown Point

-

Bayesian Robustness

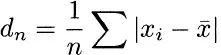

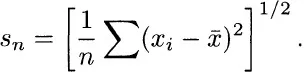

An expanded treatment of robust regression and pseudo-values is also featured, and concepts, rather than mathematical completeness, are stressed in every discussion. Selected numerical algorithms for computing robust estimates and convergence proofs are provided throughout the book, along with quantitative robustness information for a variety of estimates. A General Remarks section appears at the beginning of each chapter and provides readers with ample motivation for working with the presented methods and techniques.

Robust Statistics, Second Edition is an ideal book for graduate-level courses on the topic. It also serves as a valuable reference for researchers and practitioners who wish to study the statistical research associated with robust statistics.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

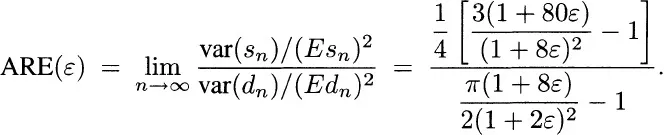

| ε | ARE(ε) |

| 0 | 0.876 |

| 0.001 | 0.948 |

| 0.002 | 1.016 |

| 0.005 | 1.198 |

| 0.01 | 1.439 |

| 0.02 | 1.752 |

| 0.05 | 2.035 |

| 0.10 | 1.903 |

| 0.15 | 1.689 |

| 0.25 | 1.371 |

| 0.5 | 1.017 |

| 1.0 | 0.876 |

Table of contents

- COVER

- TITLE

- COPYRIGHT

- PREFACE

- PREFACE TO FIRST EDITION

- CHAPTER 1: GENERALITIES

- CHAPTER 2: THE WEAK TOPOLOGY AND ITS METRIZATION

- CHAPTER 3: THE BASIC TYPES OF ESTIMATES

- CHAPTER 4: ASYMPTOTIC MINIMAX THEORY FOR ESTIMATING LOCATION

- CHAPTER 5: SCALE ESTIMATES

- CHAPTER 6: MULTIPARAMETER PROBLEMS—IN PARTICULAR JOINT ESTIMATION OF LOCATION AND SCALE

- CHAPTER 7: REGRESSION

- CHAPTER 8: ROBUST COVARIANCE AND CORRELATION MATRICES

- CHAPTER 9: ROBUSTNESS OF DESIGN

- CHAPTER 10: EXACT FINITE SAMPLE RESULTS

- CHAPTER 11: FINITE SAMPLE BREAKDOWN POINT

- CHAPTER 12: INFINITESIMAL ROBUSTNESS

- CHAPTER 13: ROBUST TESTS

- CHAPTER 14: SMALL SAMPLE ASYMPTOTICS

- CHAPTER 15: BAYESIAN ROBUSTNESS

- REFERENCES

- INDEX

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app