![]()

1

HD Video Remote Collaboration Application

Beomjoo Seo

Xiaomin Liu

Roger Zimmermann

School of Computing, National University of Singapore, Singapore

1.1 Introduction

High-quality, interactive collaboration tools increasingly allow remote participants to engage in problem solving scenarios resulting in quicker and improved decision-making processes. With high-resolution displays becoming increasingly common and significant network bandwidth being available, high-quality video streaming has become feasible and innovative applications are possible. Initial work on systems to support high-definition (HD) quality streaming focused on off-line content. Such video-on-demand systems for IPTV (Internet protocol television) applications use elaborate buffering techniques that provide high robustness with commodity IP networks, but introduce long latencies. Recent work has focused on interactive, real-time applications that utilize HD video. A number of technical challenges have to be addressed to make such systems a reality. Ideally, a system would achieve low end-to-end latency, low transmission bandwidth requirements, and high visual quality all at the same time. However, since the pixel stream from an HD camera can reach a raw data rate of 1.4 Gbps, simultaneously achieving low latency while maintaining a low transmission bandwidth—through extensive compression—are conflicting and challenging requirements.

This chapter describes the design, architectural approach, and technical details of the remote collaboration system (RCS) prototype developed under the auspices of the Pratt & Whitney, UTC Institute for Collaborative Engineering (PWICE), at the University of Southern California (USC).

The focus of the RCS project was on the acquisition, transmission, and rendering of high-resolution media such as HD quality video for the purpose of building multisite, collaborative applications. The goal of the system is to facilitate and speed up collaborative maintenance procedures between an airline's technical help desk, its personnel working on the tarmac on an aircraft engine, and the engine manufacturer. RCS consists of multiple components to achieve its overall functionality and objectives through the following means:

1. Use high fidelity digital audio and high-definition video (HDV) technology (based on MPEG-2 or MPEG-4/AVC compressed video) to deliver a high-presence experience and allow several people in different physical locations to collaborate in a natural way to, for example, discuss a customer request.

2. Provide multipoint connectivity that allows participants to interact with each other from three or more physically distinct locations.

3. Design and investigate acquisition and rendering components in support of the above application to optimize bandwidth usage and provide high-quality service over the existing and future networking infrastructures.

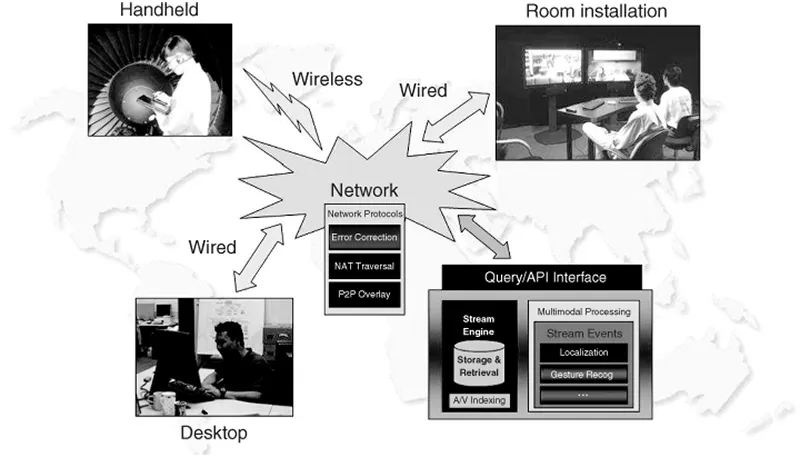

Figure 1.1 illustrates the overall architecture of RCS with different possible end-stations: room installations, desktop and mobile computers.

1.2 Design and Architecture

HD displays have become common in recent years and large network bandwidth is available in many places. As a result, high-quality interactive video streaming has become feasible as an innovative application. One of the challenges is the massive amount of data required for transmitting such streams, and hence simultaneously achieving low latency and keeping the bandwidth low are often contradictory. The RCS project has focused on the design of a system that enables HD quality video and multiple channels of audio to be streamed across an IP based network with commodity equipment. This has been made possible due to the technological advancements in capturing and encoding HD streams with modern, high-quality codecs such as MPEG-4/AVC and MPEG-2. In addition to wired network environments, RCS extends HD live streaming to the wireless networks, where bandwidth is limited and the packet loss rate can be very high.

The system components for one-way streaming from a source (capture device) to a sink (media player) can be divided into four stages: media acquisition, media transmission, media reception, and media rendering. The media acquisition component specifies how to acquire media data from a capture device such as a camera. Media acquisition generally includes a video compression module (though there are systems that use uncompressed video), which reduces the massive amount of raw data into a more manageable quantity. After the acquisition, the media data is split into a number of small data packets that will then be efficiently transmitted to a receiver node over a network (media transmission). Once a data packet is received, it will be reassembled into the original media data stream (media reception). The reconstructed data is then decompressed and played back (media rendering). The client and server streaming architecture divides the above stages naturally into two parts: a server that performs media acquisition and transmission and a client that executes media reception and rendering.

A more general live streaming architecture that allows multipoint communications may be described as an extension of the one-way streaming architecture. Two-way live streaming between two nodes establishes two separate one-way streaming paths between the two entities. To connect more than two sites together, a number of different network topologies may be used. For example, the full-mesh topology for multiway live streaming applies two-way live streaming paths among each pair of nodes. Although full-mesh connectivity results in low end-to-end latencies, it is often not suitable for larger installations and systems where the bandwidth between different sites is heterogeneous.

For RCS, we present several design alternatives and we describe the choices made in the creation of a multiway live streaming application. Below are introductory outlines of the different components of RCS which will subsequently be described in turn.

Acquisition. In RCS, MPEG-2-compressed HD camera streams are acquired via a FireWire interface from HDV consumer cameras, which feature a built-in codec module. MPEG-4/AVC streams are obtained from cameras via an external Hauppauge HD-PVR (high-definition personal video recorder) encoder that provides its output through a USB connection. With MPEG-2, any camera that conforms to the HDV standard1 can be used as a video input device. We have tested multiple models from JVC, Sony, and Canon. As a benefit, cameras can easily be upgraded whenever better models become available. MPEG-2 camera streams are acquired at a data rate of 20–25 Mbps, whereas MPEG-4/AVC streams require a bandwidth of 6.5–13.5 Mbps.

Multipoint Communication. The system is designed to accommodate the setup of many-to-many scenarios via a convenient configuration file. A graphical user interface is available to more easily define and manipulate the configuration file. Because the software is modular, it can naturally take advantage of multiple processors and multiple cores. Furthermore, the software runs on standard Windows PCs and can therefore take advantage of the latest (and fastest) computers.

Compressed Domain Transcoding. This functionality is achieved for our RCS implementation on Microsoft Windows via a commercial DirectShow filter module. It allows for an optional and custom reduction of the bandwidth for each acquired stream. This is especially useful when streaming across low bandwidth and wireless links.

Rendering. MPEG-2 and MPEG-4/AVC decoding is performed via modules that take advantage of motion compensation and iDCT (inverse discreet cosine transform) hardware acceleration operation in modern graphics cards. The number of streams that can be rendered concurrently is only limited by the CPU processing power (and in practice by the size of the screens attached to the computer). We have demonstrated three-way HD communication on dual-core machines.

1.2.1 Media Processing Mechanism

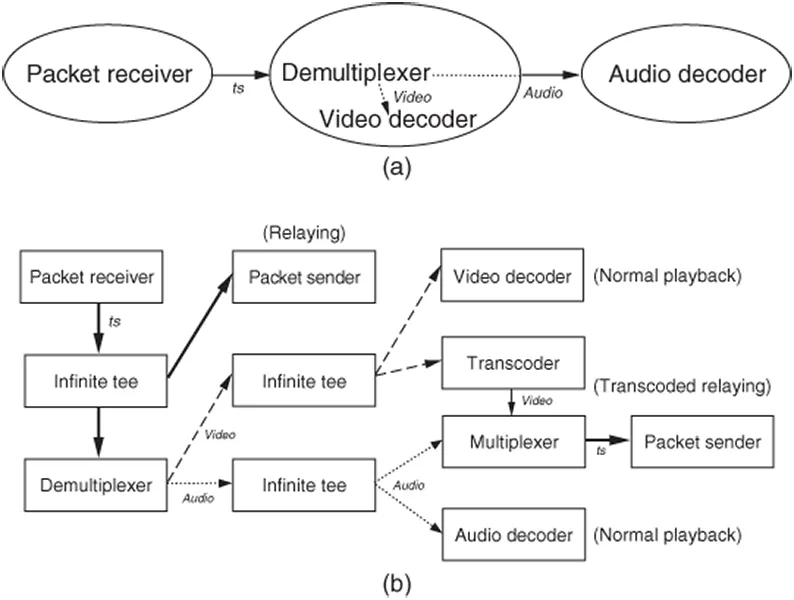

We implemented our RCS package in two different operating system environments, namely, Linux and Windows. Under Linux, every task is implemented as a process and data delivery between two processes uses a pipe, one of the typical interprocess communication (IPC) methods, that transmit the data via standard input and output. In the Linux environment, the pipe mechanism is integrated with the virtual memory management, and so it provides effective input/output (I/O) performance. Figure 1.2a illustrates how a prototypical pipe-based media processing chain handles the received media samples. A packet receiver process receives RTP (real-time transport protocol)-similar packets from a network, reconstructs the original transport stream (TS) by stripping the packet headers, and delivers them to an unnamed standard output pipe. A multiplexer, embedded in a video decoder process, waits on the unnamed pipe, parses incoming transport packets, consumes video elementary streams (ES) internally, and forwards audio ES to its unnamed pipe. Lastly, an audio decoder process at the end of the process chain consumes the incoming streams. Alternatively, the demultiplexer may be separated from the video decoder by delivering the video streams to a named pipe, on which the decoder is waiting.

On the Windows platform, our investigative experiments showed that a pipe-based interprocess data delivery mechanism would be very I/O-intensive, causing significant video glitches. As an alternative design to the pipe mechanism, we chose a DirectShow filter pipeline. DirectShow—previously known as ActiveMovie and a part of the DirectX software development kit (SDK)—is a component object model (COM)-based streaming framework for the Microsoft Windows platform. It allows application developers not only to rapidly prototype the control of audio/video data flows through high-level interfaces (APIs, application programming interfaces) but also to customize low-level media processing components (filters).

The DirectShow filters are COM objects that have a custom behavior implemented along filter-specific standard interfaces and then communicate with other filters. User-mode applications are built by connecting such filters. The collection of connected filters is called a filter graph, which is managed by a high-level object called the filter graph manager (FGM). Media data is moved from the source filter to the sink filter (or renderer filter) one by one along the connections defined in the filter graph under the orchestration of the FGM. An application invokes control methods (Play, Pause, Stop, Run, etc.) on an FGM and it may in fact use multiple FGMs. Figure 1.2b depicts one reception filter graph among various filter graphs implemented in our applications. It illustrates how media samples that are delivered from the network are processed along multiple branching paths—that is, a relaying branch, a transcoded relaying branch, and normal playback. The infinite tee in the figure is an SDK provided standard filter, enabling source samples to be transmitted to multiple filters simultaneously.

Unlike the pipe mechanism under Windows, a DirectShow filter chain has several advantages. First, communication between filters is performed in the same address space, meaning that all the filters (which are a set of methods and processing routines) communicate through simple function calls. The data delivery is via passed pointers to data buffers (i.e., a zero-copy mechanism). Compared to IPC, this is much more efficient in terms of I/O overhead. Second, many codecs are available as DirectShow filters, which enables faster prototyping and deployments. During the implementation, however, we observed several problems with the DirectShow filter chaining mechanism. First, the developer has no control over the existing filters other than the methods provided by the vendors, thus leaving little room for any further software optimizations to reduce the acquisition and playback latency. Second, as a rather minor issue, some filter components can cause synchronization problems. We elaborate on this in Section 1.6.1.

1.3 HD Video Acquisition

For HD video acquisition, we relied on solutions that included hardware-implemented MPEG compressors. Such solutions generally generate high-quality output video streams. While hardware-based MPEG encoders that are able to handle HD resolutions used to cost tens of thousands of dollars in the past, they are now affordable due to the proliferation of mass-market consumer products. If video data is desired in the MPEG-2 format, there exist many consumer cameras that can capture and stream HD video in real time. Specifically, the HDV standard commonly implemented in consumer camcorders includes real-time MPEG-2 encoded output via a FireWire (IEEE 1394) interface. Our system can acquire digital video from several types of camera models, which transmit MPEG-2 TS via FireWire interface in HDV format. The HDV compressed data rate is approximately 20–25 Mbps and a large number of manufacturers are supporting this consumer format. Our earliest experiments...