Learning OpenCV 4 Computer Vision with Python 3

Get to grips with tools, techniques, and algorithms for computer vision and machine learning, 3rd Edition

- 372 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Learning OpenCV 4 Computer Vision with Python 3

Get to grips with tools, techniques, and algorithms for computer vision and machine learning, 3rd Edition

About this book

Updated for OpenCV 4 and Python 3, this book covers the latest on depth cameras, 3D tracking, augmented reality, and deep neural networks, helping you solve real-world computer vision problems with practical code

Key Features

- Build powerful computer vision applications in concise code with OpenCV 4 and Python 3

- Learn the fundamental concepts of image processing, object classification, and 2D and 3D tracking

- Train, use, and understand machine learning models such as Support Vector Machines (SVMs) and neural networks

Book Description

Computer vision is a rapidly evolving science, encompassing diverse applications and techniques. This book will not only help those who are getting started with computer vision but also experts in the domain. You'll be able to put theory into practice by building apps with OpenCV 4 and Python 3.

You'll start by understanding OpenCV 4 and how to set it up with Python 3 on various platforms. Next, you'll learn how to perform basic operations such as reading, writing, manipulating, and displaying still images, videos, and camera feeds. From taking you through image processing, video analysis, and depth estimation and segmentation, to helping you gain practice by building a GUI app, this book ensures you'll have opportunities for hands-on activities. Next, you'll tackle two popular challenges: face detection and face recognition. You'll also learn about object classification and machine learning concepts, which will enable you to create and use object detectors and classifiers, and even track objects in movies or video camera feed. Later, you'll develop your skills in 3D tracking and augmented reality. Finally, you'll cover ANNs and DNNs, learning how to develop apps for recognizing handwritten digits and classifying a person's gender and age.

By the end of this book, you'll have the skills you need to execute real-world computer vision projects.

What you will learn

- Install and familiarize yourself with OpenCV 4's Python 3 bindings

- Understand image processing and video analysis basics

- Use a depth camera to distinguish foreground and background regions

- Detect and identify objects, and track their motion in videos

- Train and use your own models to match images and classify objects

- Detect and recognize faces, and classify their gender and age

- Build an augmented reality application to track an image in 3D

- Work with machine learning models, including SVMs, artificial neural networks (ANNs), and deep neural networks (DNNs)

Who this book is for

If you are interested in learning computer vision, machine learning, and OpenCV in the context of practical real-world applications, then this book is for you. This OpenCV book will also be useful for anyone getting started with computer vision as well as experts who want to stay up-to-date with OpenCV 4 and Python 3. Although no prior knowledge of image processing, computer vision or machine learning is required, familiarity with basic Python programming is a must.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Camera Models and Augmented Reality

- Modeling the parameters of a camera and lens

- Modeling a 3D object using 2D and 3D keypoints

- Detecting the object by matching keypoints

- Finding the object's 3D pose using the cv2.solvePnPRansac function

- Smoothing the 3D pose using a Kalman filter

- Drawing graphics atop the object

Technical requirements

Understanding 3D image tracking and augmented reality

- tx: This is the object's translation along the x axis.

- ty: This is the object's translation along the y axis.

- tz: This is the object's translation along the z axis.

- rx: This is the first element of the object's Rodrigues rotation vector.

- ry: This is the second element of the object's Rodrigues rotation vector.

- rz: This is the third element of the object's Rodrigues rotation vector.

- Define the parameters of the camera and lens. We will introduce this topic in this chapter.

- Initialize a Kalman filter that we will use to stabilize the 6DOF tracking results. For more information about Kalman filtering, refer back to Chapter 8, Tracking Objects.

- Choose a reference image, representing the surface of the object we want to track. For our demo, the object will be a plane, such as a piece of paper on which the image is printed.

- Create a list of 3D points, representing the vertices of the object. The coordinates can be in any unit, such as meters, millimeters, or something arbitrary. For example, you could arbitrarily define 1 unit to be equal to the object's height.

- Extract feature descriptors from the reference image. For 3D tracking applications, ORB is a popular choice of descriptor since it can be computed in real time, even on modest hardware such as smartphones. Our demo will use ORB. For more information about ORB, refer back to Chapter 6, Retrieving Images and Searching Using Image Descriptors.

- Convert the feature descriptors from pixel coordinates to 3D coordinates, using the same mapping that we used in step 4.

- Start capturing frames from the camera. For each frame, perform the following steps:

- Extract feature descriptors, and attempt to find good matches between the reference image and the frame. Our demo will use FLANN-based matching with a ratio test. For more information about these approaches for matching descriptors, refer back to Chapter 6, Retrieving Images and Searching Using Image Descriptors.

- If an insufficient number of good matches were found, continue to the next frame. Otherwise, proceed with the remaining steps.

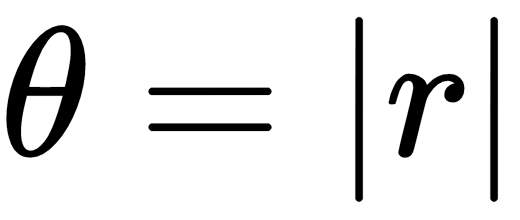

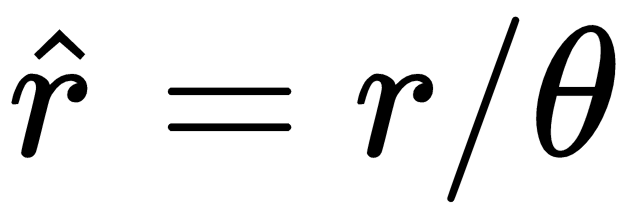

-

- Attempt to find a good estimate of the tracked object's 6DOF pose based on the camera and lens parameters, the matches, and the 3D model of the reference object. For this, we will use the cv2.solvePnPRansac function.

- Apply the Kalman filter to stabilize the 6DOF pose so that it does not jitter too much from frame to frame.

- Based on the camera and lens parameters, and the 6DOF tracking results, draw a projection of some 3D grap...

Table of contents

- Title Page

- Copyright and Credits

- Dedication

- About Packt

- Contributors

- Preface

- Setting Up OpenCV

- Handling Files, Cameras, and GUIs

- Processing Images with OpenCV

- Depth Estimation and Segmentation

- Detecting and Recognizing Faces

- Retrieving Images and Searching Using Image Descriptors

- Building Custom Object Detectors

- Tracking Objects

- Camera Models and Augmented Reality

- Introduction to Neural Networks with OpenCV

- Appendix A: Bending Color Space with the Curves Filter

- Other Book You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app