eBook - ePub

Mind, Machine and Morality

Toward a Philosophy of Human-Technology Symbiosis

- 202 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

About this book

Technology is our conduit of power. In our modern world, technology is the gatekeeper deciding who shall have and who shall have not. Either technology works for you or you work for technology. It shapes the human race just as much as we shape it. But where is this symbiosis going? Who provides the directions, the intentions, the goals of this human-machine partnership? Such decisions do not derive from the creators of technology who are enmeshed in their individual innovations. They neither come from our social leaders who possess only sufficient technical understanding to react to innovations, not to anticipate or direct their progress. Neither is there evidence of some omnipotent 'invisible hand,' the simple fact is that no one is directing this enterprise. In Mind, Machine and Morality, Peter Hancock asks questions about this insensate progress and has the temerity to suggest some cognate answers. He argues for the unbreakable symbiosis of purpose and process, and examines the dangerous possibilities that emerge when science and purpose meet. Historically, this work is a modern-day child of Bacon's hope for the 'Great Instauration.' However, unlike its forebear, the focus here is on human-machine systems. The emphasis centers on the conception that the active, extensive face of modern philosophy is technology. Whatever we are to become is bound up not only in our biology but critically in our technology also. And to achieve rational progress we need to articulate manifest purpose. This book is one step along the purposive road. Drawing together his many seminal writings on human-machine interaction and adapting these works specifically for this collection, Peter Hancock provides real food for thought, delighting readers with his unique philosophical perspective and outstanding insights. This is theoretical work of the highest order and will open minds accordingly.

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription.

At the moment all of our mobile-responsive ePub books are available to download via the app. Most of our PDFs are also available to download and we're working on making the final remaining ones downloadable now. Learn more here.

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 1000+ topics, we’ve got you covered! Learn more here.

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more here.

Yes! You can use the Perlego app on both iOS or Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Yes, you can access Mind, Machine and Morality by Peter A. Hancock in PDF and/or ePUB format, as well as other popular books in Computer Science & Human-Computer Interaction. We have over one million books available in our catalogue for you to explore.

Information

Chapter 1

The Science and Philosophy of Human-Machine Systems

Science above all things is for the uses of life.

(Francis Bacon)

Preamble

This first chapter develops a theoretical structure for the science of human-machine systems. This structure is based on the premise that technology is the principal method through which humans expand their ranges of perception and action in order to understand and control the world around them. The theory presents a broad rationale for the contemporary impetus in human-machine systems development and the historical motivations for its growth. Unlike any other interdisciplinary fusion of knowledge, the science of human-machine systems is more than a convenient collaboration among proximal areas of knowledge. Through the identification of opportunities and constraints that derive from the interplay of human, machine, task, and environment, I point to this area of study as the vital bridge between evolving biological and non-biological forms of intelligence. Absence of such a bridge will see the certain demise of one and the fundamental impoverishment, if not the extinction, of the other.

Introduction

THE SECRET OF MACHINES

We can pull and haul and push and lift and drive,

We can print and plough and weave and heat and light,

We can run and race and swim and fly and drive,

We can see and hear and count and read and write.

But remember please, the Law by which we live,

We are not built to comprehend a lie.

We can neither love nor pity nor forgive –

If you make a slip in handling us, you die.

(Rudyard Kipling)

Humans and Technology

Rudyard Kipling’s The Secret of Machines is as appropriate for the supervisor of modern-day complex systems as it was when it was written for the individual worker in the factory of the nineteenth century. Slips and errors in handling machines can and frequently do lead to death. Yet, we have built a global society whose dependence on technology grows daily. The way in which humans and machines integrate their actions lies at the very heart of this development. The emerging science of human-machine systems seeks to maximize the benefit derived from technology while exercising a continual vigilance over its darker side and its dangerous potentialities. It looks to turn human-machine antagonism into human-machine synergy.

Traditionally, the study of humans and machines has been represented simply as a discipline that makes technology more appropriate or palatable for human consumption. It also forces the human to adapt, as was foretold in the film Metropolis. However, this is a very reactive interpretation and is one that is usually cited or employed after some spectacular technological disaster has rendered this perennial issue momentarily ‘newsworthy’. My purpose here is to take a proactive perspective and to represent this area of study as one that actually motivates all of science, engineering, and indeed the systematic empirical exploration of the human condition itself in the very first place.

As the quotation from Francis Bacon at the start of this chapter implies, in order to understand the motivation for science and its material manifestation in technology, we must first have a clear vision of what Bacon’s ‘uses of life’ are. We need to understand how people use their capacities for perception, cognition, and action to decide on specific goals and then carry out meaningful and useful tasks in the pursuit of those goals. As well then as a fundamental examination of human purpose, this effort demands a rational analysis of tasks themselves, a psychological analysis of human behaviour and capability, and an engineering analysis of how humans interact with the tools and systems they have created so that they may accomplish these tasks.

Therefore, my first task is to address how humans use technology in the goal and task-oriented exploration and manipulation of their environment. It was Powers (1974, 1978) who asserted that goal-directed behaviour is organized through a hierarchy of control systems (but see also Lashley, 1951). Higher-order systems receive input from and subsequently control an assemblage of lower-order systems and it is these lower-order systems that interact directly with the external world. One of Powers’ central points is that the flow of control is bi-directional, with control flowing upward from lower-order systems as much as it does in the downward direction from higher-order systems. Human activity has thus been characterized as an inner loop of skilled manual control and perceptual processing which is embedded within an outer loop of control that, among other capacities, features knowledge-based problem solving. Moray (1986), for instance, gives the example of a nested series of goals working from an extreme outer loop of very general goals (such as influencing society and raising children) to extreme inner loop processes (such as controlling the momentary position of a vehicle’s steering wheel in order to negotiate a curve in the road). These different levels of temporal and spatial scales of perception-action can serve to frame our overall human exploration.

Unaided by any tools or instruments, human perception and action are necessarily limited. However, with the birth of technology, and its growth in each succeeding generation, the bounds of these respective capabilities have expanded and are in constant redefinition. To describe this historic line of progress, I start here with a description of the limits to unaided action and unaided perception. However, we must first recognize that the limit of human perception has always exceeded that of human action. We have always been able to see further than we can control. Imagine you are standing on the top of a high hill. You might well be able to see more than thirty miles into the distance on a clear day and yet, without technical assistance you can only exercise physical control over the few square yards of that spreading vista that surrounds you. The ‘tension’ that results from this disparity between what can be perceived and what can be controlled provides the major motivational force for human exploration. It is a major theme in the theoretical position I develop in this book. Indeed, the presence of this tension between perception and action may well underlie the fact that astronomy was arguably our first science, although perhaps geometry for agriculture may have evolved in parallel. The long days anticipating the harvest and the long nights contemplating the vagaries of the wandering stars and the fiery messengers they contained might well have started human beings on the road to formalized observation (Koestler, 1959). Thus the link between perception and action may explain how we humans explore the environment. However, it is the gap between the powers of perception and action which may explain why we humans explore the environment.

In regard to this exploration, technological innovations often generate a dual effect. That is, new technologies increase the range of our actions while simultaneously expanding the range of our perception. The further these respective bounds are extended from our everyday experience, the more complex the technical systems that are needed to support such exploration. At the point where even aided perception starts to become inadequate – and this occurs at the very edges of our understanding – there is an increasingly greater reliance placed on metaphorical representations of the spaces involved. The most obvious example is that at the limit of celestial mechanics and quantum mechanics it is indeed largely metaphor that we are dealing with. In elaborating this overall theme of expanding ranges of perception and action, I look to use the Minkowskian framework to describe the evolving vista of capabilities (see Moray and Hancock, 2009). For example, leaps of progress such as the genesis of tools (Oakley, 1949), and the more recent advent of ‘intelligent’ orthotics can be captured and expressed easily using this form of description.

There is, however, a dissonance when we contrast the progress of technological innovation and the advance not of perception and action, but of human nature itself. The latter appears to have changed very little across recorded history while technology changes almost daily. The result is an ever-increasing potential for a catastrophic disconnection or more colourfully our moral dilemma in exercising ‘the power of gods with the minds of children’. There are strong constraints on human nature, but we are rapidly augmenting our basic perception-action abilities as we systematically explore and engineer our environment. Such environments serve to ‘create’ our future selves and we have seen more radical changes between the last two to three generations than we have in the fifty generations before them. The future of human beings is now bound to the co-evolution of biological and non-biological (computational) forms of life. This in turn implies the need to regard the goal-oriented interaction between humans and the perceivable environment as the basic unit of analysis (see Flach and Dominguez, 1995).

Perception and Action in Space and Time

The personal and collective odyssey of humankind has been to find and establish our place and role in the universe. While this journey might be considered from one perspective as a spiritual endeavour, my focus here is on the goal-oriented use of technology as a process to provide mastery over the environment. While the environment is best measured by the physical metrics of space and time, the exploration of space and time is motivated by personal and collective goals that can be expressed as our desire for certain future states of our world. Our success or failure with respect to these goals is evaluated by the associated perceptual experiences that they engender. In this overall process, our behavioural strategies can be defined as the particular ways of achieving these desired goals. In contrast to strategies, tasks represent a finer-grained level of action, closer to the centre of the nested loops referred to earlier. Tasks are the steps by which strategies work toward goals (see also Shaw and Kinsella-Shaw, 1989). While a goal is a desired future state, strategies and tasks are supportive elements that provide the specific transitional steps to achieve that goal. In the case of a task, the transformation is explicitly an energetic one. That is, a task is only achieved with a formal change to the physical state of the world. Goal achievement relies on the success of strategies which are themselves composed of the successful and integrated completion of more than one task. From a thermodynamic perspective, tasks typically result in a reduction of local entropy (Swenson and Turvey, 1991) and the expenditure of energy toward a more ordered state of any sub-system. The idea of ‘progress’ is implied by the fact that transformations take time to occur. Therefore, the simple perpetuation of a system without any goal-directed alteration cannot be regarded as a task within this definition.

The demands a task places on an operator or the cost of performing the required transformation can only be measured with respect to what it is to be achieved. That is, a task is a relational concept. In human performance such costs are typically expressed as a function of the time taken to pass from an initial state to a following state and the accuracy with which that transition is achieved (that is, speed and accuracy). Transformation cost can also be expressed in terms of the cognitive effort or the muscular energy involved. Machines, as transformers of energy, act to increase the number of paths (or successful strategies) by which a goal can be achieved. Technology thus serves to broaden the horizon of achievable goal states (for example, an astronaut’s presence in outer space).

While technology serves to open up windows of opportunity, environmental circumstances often serve to constrain and limit the goals which can be achieved (for example, contemporary astronaut presence on Mars). However, one hallmark of expertise is the ability to project what the future expected environmental constraints might be and to seek ways in which to ‘navigate’ around them. The environment also presents unexpected and unanticipated constraints that interrupt ongoing tasks and strategies and can, under certain circumstances, remove the desired goal from the range of possible outcomes. With respect to goals then, human-machine systems seek to expand ranges of possible achievement, while the environment can act to restrict them, although it can occasionally present surprising opportunities also. Unfortunately, it is the antagonistic aspect of the interaction between the human and the environment which has permeated much of the history of design. Thus, the idea of ‘conquering’ nature, although predominantly of Occidental origin, is one that has grown into a global preoccupation (see McPhee, 1989). Thus technological systems are often shaped to ‘conquer’ and ‘control’ the environment, rather than recognize and harmoniously incorporate relevant, intrinsic constraints. Ultimately, it is the ability to recognize and benefit from these mutual constraints and limitations to action that characterizes ‘intelligence’ on behalf of human-machine systems. Although there are few such systems operating at present, there is still hope and promise for the fulfilment in the future.

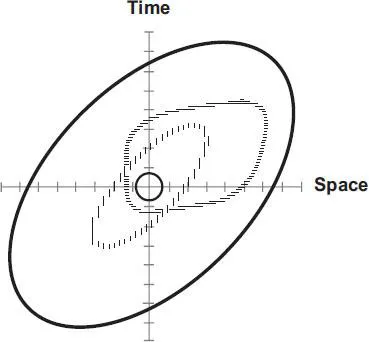

Goal-oriented behaviour is thus initiated in part by reacting to environmental constraints that limit the range and effectiveness of perception and action. The limitations of perception and action can be considered initially within a framework that views space and time as orthogonal axes. The environment may be scaled from the very small to the very large and from the very brief to the extremely prolonged. Within these continua there are ranges of space and time that relate most closely to our own physical size and our own perception of a lifetime’s duration (Hancock, 2002). One representation of this in terms of orders of magnitude is illustrated in Figure 1.1 (see Hoffman, 1990).

Human-range (the innermost circle), illustrates the limit of unaided human action (for example, throwing a javelin). Perceptual-range (the horizontal lines envelope) illustrates the range of unaided human perception (for example, looking into the night sky). Orthotic-range (the vertical hashed-lines envelope) shows how the range of human action increases vastly with the addition of technology. Universalrange (the outermost envelope) illustrates the paradox that while technology expands the range of action it is vastly more effective in increasing the range of perception (for example, the Hubble telescope). These envelopes are expressed as functions of space and time. These regions are illustrative approximations and are not drawn to represent definitive boundaries which, given the dynamics nature of technology, would change on almost a daily basis anyway.

In identifying our own location in the universe, we humans almost always place ourselves at the centre. The history of the science of astronomy for example, can be seen as an account of our progressive physical displacement from this notion of a central position. In particular, the step from an earth-centred t...

Table of contents

- Cover

- Half Title

- Title Page

- Copyright Page

- Table of Contents

- List of Figures

- List of Tables

- Preface

- Acknowledgements

- 1 The Science and Philosophy of Human-Machine Systems

- 2 Teleology for Technology

- 3 Convergent Technological Evolution

- 4 The Future of Function Allocation

- 5 The Sheepdog and the Japanese Garden

- 6 On the Future of Work

- 7 Men Without Machines

- 8 Life, Liberty, and the Design of Happiness

- 9 Mind, Machine and Morality

- Permissions

- References

- Index