- 196 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Genetics and DNA Technology: Legal Aspects

About this book

First published in 2005. This second edition of Genetics DNA Technology retains the original's focus on professional lawyers. Not a law textbook, it is an adjunct to those who are studying law or want to become forensic scientists.

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription.

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn more here.

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 1000+ topics, we’ve got you covered! Learn more here.

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more here.

Yes! You can use the Perlego app on both iOS or Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Yes, you can access Genetics and DNA Technology: Legal Aspects by Wilson Wall in PDF and/or ePUB format, as well as other popular books in Law & Law Theory & Practice. We have over one million books available in our catalogue for you to explore.

Information

CHAPTER 1

THE HISTORICAL CONTEXT OF PERSONAL IDENTIFICATION

INTRODUCTION

This chapter will introduce the historical context of deoxyribonucleic acid (DNA) profiling and its predecessors of personal identification: fingerprints and blood group analysis. The range of possible uses will be described, but not the techniques used to analyse body fluids. Analysis techniques will be described in later chapters. The range of different techniques of DNA analysis will be dealt with in their historical context, from the first systems, which are no longer used, to the next generation of analysis which will become available in the next few years.

Without doubt the easiest way to identify an individual is to ask them who they are, or to ask someone who knows them who they are, but in reality this is not always possible or reliable. Consequently, a great industry has arisen throughout history to find methods which could be used to pinpoint unique characteristics in an individual and therefore the individual themselves.

This developing need for reliable systems of identification seems to mirror the rise of urbanised societies. This is a result of both an increase in size of social groups, as small villages disappeared, and a reduction in social contact within the larger group. Perhaps people have more acquaintances, but fewer individuals who could be relied on to identify the person unequivocally.

Early methods of recognition relied on passwords and signs, but this only gave recognition of membership of a society or club, not recognition of the individual per se. The holder of the sign could still claim to be anybody they wanted to.

An effective system of identification should therefore comply with four basic features:

- for any individual it should be fixed and unalterable;

- the measured feature should be present in every individual in some form;

- ideally it should be unique to an individual;

- it should be recordable in a manner that allows for comparison.

1.1 Bertillonage

An early attempt clearly to identify an individual was made by Alphonse Bertillon (1853–1914). Both his father and brother were statisticians in France, which could well account for the direction in which the police career of Alphonse went. He was appointed the chief of the Identification Bureau in Paris. It was while there that he developed a system which came to be known as ‘Bertillonage’, or sometimes ‘anthropometry’, which he introduced in 1882. It was a useful attempt but, as we shall see, flawed by the very intractability of measuring biological systems. His system required measurements to be made of various parts of the body, and notes taken of scars and other body marks, as well as personality characteristics. These were:

- height standing;

- height sitting;

- reach from fingertip to fingertip;

- length and width of head;

- length and width of right ear;

- length of left foot;

- length of left middle finger;

- length of left little finger;

- length of left forearm.

Bertillon was also keen on the analysis of handwriting, and in 1894 he was asked to give his opinion on the origin of a handwritten document. This document was pivotal in the infamous Dreyfus case. Alfred Dreyfus (1859–1935) was a captain in the French army when, in 1894, circumstances resulted in a judicial error which left him imprisoned for several years. The only significant evidence was a letter, the content of which directly implicated the writer, a French officer, of betraying his country. The letter was purported to have been written by Dreyfus, an assertion which was accepted on the testimony of Alphonse Bertillon, then chief of the Identification Bureau in Paris, as an expert in handwriting. It was later shown that the incriminating document had been written by another officer. The resulting attempts at covering up the error resulted in another officer being imprisoned on a trumped-up charge, and Emile Zola being sentenced to a year’s imprisonment for his now famous open letter to the President of the Republic, which started: ‘J’accuse’. Zola managed to avoid his sentence by fleeing to England. Dreyfus was eventually reinstated and was promoted to lieutenant colonel during the First World War.

Bertillonage was dogged by two related problems, both of which must be considered whenever any system of identification is being used. These are accuracy and precision. The difference between the two is important. If repeated measurements are made on the same object, then the degree to which the results are scattered about the true value is the precision, while the closeness to the true value that the mean of the measurements comes is the accuracy.

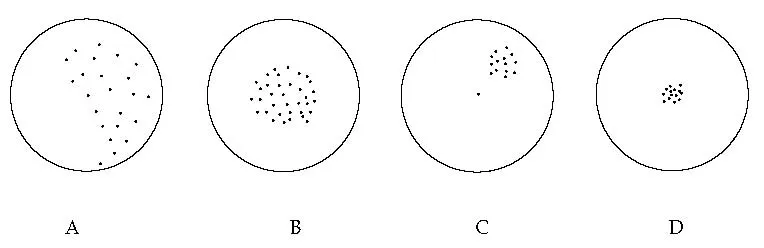

Put another way, using the analogy of a shooting target, if the shots are spread all over the target, this is both low accuracy and low precision. If the shots are around the centre of the target, the accuracy is high but the precision low, but if shots are grouped tightly to one side of the target centre, the precision is high but the accuracy low. From this we can clearly see that it is possible to gain an accurate result from repeated measurements of low precision, as long as the measurements are not systematically distorted in one direction. This is shown diagrammatically in Figure 1.1.

These two errors are also referred to as ‘systematic’ and ‘statistical’ errors. Repeated measurements can go some way to alleviate statistical errors, but systematic errors are more difficult to control as they distort the results in a single direction. Systematic errors make comparison of results from one instrument or observer to another very difficult, if not impossible, to carry out. So besides being expensive and having to train officers specially to take the Bertillonage measurements, it was also unknown what the probability of two individuals sharing the same measurements was. For these reasons, and the intrinsic lack of repeatability and complexity of measurements, it became much easier to use fingerprints and gradually replace Bertillonage completely. This resulted in fingerprints being the personal identification system of choice for over a century.

Key

A – Large statistical and systematic error so low precision and low accuracy.

B – Large statistical error but no systematic error so low precision but high accuracy.

C – Small statistical error but large systematic error so high precision but low accuracy.

D – Small statistical error and no systematic error so high precision and accuracy.

Figure 1.1

1.2 Fingerprints

The method by which fingerprints are inherited is unknown. Identical twins who share all of their DNA can be separated by their fingerprint patterns. This alone would suggest that genetic inheritance has little or nothing to do with fingerprints. However, contrary evidence suggests otherwise. Individuals with gross genetic abnormalities, that is, additional whole chromosomes as in Down’s syndrome, tend to have very distinctive ridge patterns both on the fingers and palms of the hands. These are so distinctive that they alone can be used to distinguish between the few of these conditions which are compatible with life. From this we can say that there is both a genetic component and an environmental one. But how these forces interact is unknown, which is why, once formed, the pattern does not change with time or damage.

Fingerprints have a very ancient history as marks of individuality, but usually without formal measurement. The Chinese used thumbprints to verify bank notes. A thumbprint was made half on the note itself and half on the counterfoil, giving a reliable method of matching the two. The English engraver, Thomas Bewick, was sufficiently impressed by the delicate tracery of his fingerprints that he produced engravings of two of his fingertips and used them as signatures to his work.

The constancy of fingerprints was first described by Johannes Purkinje, a Czech scientist, in a paper he read at a scientific meeting in 1823. It was, however, much later that the foundation of modern fingerprinting was laid down with the publication in 1892 of Finger Prints (London: HMSO), by Sir Francis Galton. Before this, in 1870, one of the first reliable uses of fingerprinting in the solving of a crime was made. A Scottish physician, by the name of Henry Faulds, amassed a large collection of fingerprints while working in Tokyo. Knowing of his interest and expertise, he was asked to help in solving a crime where a sooty fingerprint had been left by the perpetrator. Faulds was able to demonstrate that the man originally arrested for the crime was innocent. When another suspect was arrested, he showed that his fingerprints matched those left at the scene. While the method used by Faulds was deemed adequate at the time, it was quickly realised that formalising both the methods of fingerprint analysis, and the principles upon which it was based, was of significant importance. In 1902, the London Metropolitan Police set up the Central Fingerprint Branch, and by the end of that year 1,722 identifications had been made.

The idea that every contact between two objects leaves a trace is sometime called Locard’s Principle, after Edmond Locard, who first formalised the idea. Locard (1877–1966) was a forensic scientist in France and a renowned fingerprint expert. This can be extended beyond fingerprints to cover any material from an assailant recovered from the victim, either as a result of resistance, as in the case of blood or skin scratched from the attacker, or as in the case of rape, semen left as a direct result of the assault. This, however, is probably as far as the use of such a principle should go, since it can easily be imagined that contact between two nonshedding surfaces, such as some man-made materials, would not leave a trace. There is also the question of traces left in such small quantities that they are beyond the power of detection using contemporary methods. Fingerprints represent a form of detectable transfer evidence.

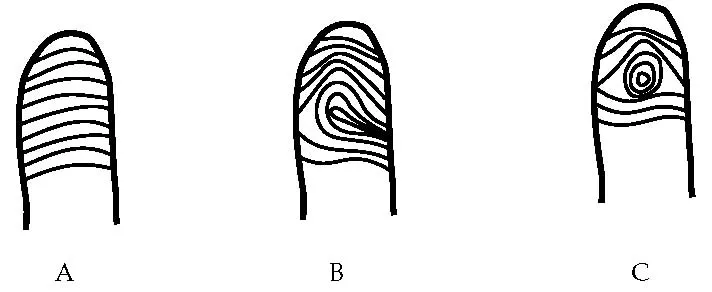

Key

A – Arch.

B – Loop.

C – Whorl.

Figure 1.2

1.2.1 The nature of fingerprints

Broadly speaking, the ridge patterns found on fingertips come in three forms, with a recognised frequency in the population. It is worth noting that individuals with abnormal chromosome complements, such as Down’s syndrome, have a very different frequency of ridge pattern which can be used as an aid to diagnosis of the condition. The different types and normal frequencies are:

- loops (70%);

- whorls (25%);

- arches (5%).

These different pattern types are shown in Figure 1.2.

The seemingly straightforward nature of fingerprints should be looked at with an open mind because, as we shall see, results are not always unambiguous.

Most countries maintain collections of fingerprints, which serve two purposes: the first is to identify an individual so that they can be associated with past convictions and to determine if they are required to answer further charges associated with other crimes; the second is to find out whether marks left at a crime scene can be associated with a known person and therefore reduce the number of suspects that need to be looked for.

When comparing a fingerprint, or set of prints, from an individual with a set of records in a database, it is normal to have a good, clear set of prints in the collection from a previous encounter as well as a clear set of prints from the individual to be compared with the database. The difficulties arise with the comparison of a scene of crime mark with a database. This is essentially complicated because the mark will usually be partial or smudged, so that only certain features can be compared. The process is assisted by the use of computers once the characteristics of a fingerprint have been designated by a fingerprint expert. It is the convention of characterising fingerprints, and their underlying assumptions, that might give cause for concern.

The first thing we should remember about any method of personal identification is that unless it has adequate discriminatory power it will not perform the job. Fingerprints are very good at separating individuals, of that there is no doubt, but there are two questions which are asked about fingerprints which we should consider before looking at the various systems of measurement of them which are commonly used. The first of these questions is ‘are fingerprints unique?’ and the second is ‘how many different fingerprints are there?’. It may seem that these are somehow contradictory, for if there is a number which can be put to the range of different fingerprints, a fingerprint cannot be unique. Similarly, if a fingerprint is unique then it would be reasonable to assume that there is an infinite range of fingerprints possible. However, if we take these two questions separately it is possible to show that both can be answered without contradiction.

Is a fingerprint unique? There are two answers to this. At any given time it is probably true to say that no two people share the same fingerprint, so in a temporal sense they are unique; but in absolute terms they are most definitely not. There are also two reasons for this – one is that the possibility of two people sharing a fingerprint by chance alone cannot be absolutely ruled out, and the second reason bears on our other question: how many fingerprints are possible? For this we have to realise that, contrary to common belief, there is not an infinite variety of fingerprints available. To understand this we need to think a little more about the difference between a large number and infinity. Infinity is essentially a mathematical construct, and it really has no place in our physical world. We can say that a straight line is the circumference of a circle of infinite radius, but no such circle exists. On the other hand, even if all the atoms in the universe were rearranged in all the different ways possible, there would still not be an infinite number of permutations. The number would be very large, indeed incalculably large, but it would still be a number, a finite figure. So, in a finger of finite dimensions (however big or small it is) there is a finite number of atoms, and even if we could detect the difference of a single atom there would still be a finite number of different fingerprints possible. To bring this down to more manageable levels, it is only the ridges that are looked at on a fingerprint, with the result that there is very definitely a fixed number of possibilities available. Since fingerprint comparisons are made subjectively, only using certain features, the possibility of similar fingerprints being mistakenly thought to have come from the same person becomes a much more realistic one.

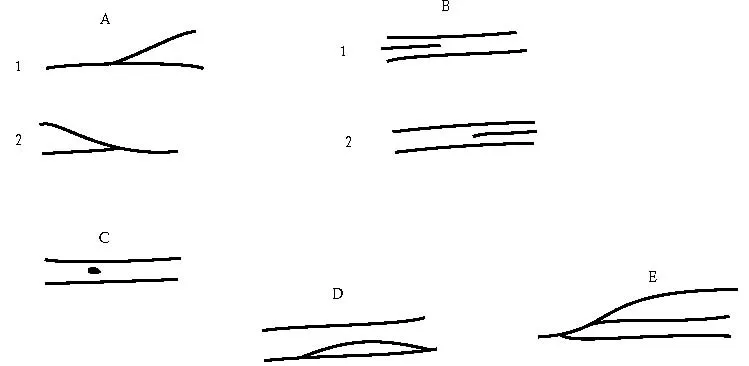

When using fingerprints for identification, they are normally classified into basic patterns, as shown in Figure 1.2, with the addition of compounds of these patterns and consideration of any scarring that may be present. After this, the ridge count between features can be used, although it is the gap between ridges which is counted as these tend to be pressure independent, so while the distance between features might change with the pressure exerted by the finger leaving the print, the ridge count will not. While features, also called typica, may vary from system to system, for any database of fingerprints to be useful it must at least be consistent in what are defined as features. Some of these are shown in Figure 1.3, although this should not be taken as a definitive listing.

By measuring features relative to each other a numerical picture can be created which can be compared from print to print. Different countries use different systems to evaluate fingerprints for comparison. For example, the Netherlands uses 12 points while the UK uses 16 points. By assuming that features originate effectively at random and are not related, it is possible to generate databases of tens of millions of fingerprints, all of which are different using these systems. There is no doubt that great weight is given to fingerprint evidence, and quite rightly so, but as we shall discuss next, there is still a need for caution when faced with this evidence because interpretation is not necessarily exact or entirely objective.

It is in the realm of practical analysis where problems may arise with fingerprints. Two imprints from the same finger may ostensibly differ quite markedly because of the pressure with which they were made, of the amount of material already on the finger obscuring the ridges, or when a formal fingerprint is taken, the amount of ink used. This potential variation in the fingerprint, and therefore its interpretation, can be most clearly seen in considering the typica termed a ‘dot and ridge’ ending. Unless the print is of very high quality, not only could a dot disappear, but a dot could be artificially produced by a ridge end having a stutter. To help in this it is normal to allow for a match to be declared by the fingerprinter. Using the skill of the fingerprinter to decide matches between features is, therefore, being perhaps a little more subjective than would necessarily be desirable.

Key

A – Forks (1) right fork (2) left fork.

B – Ridges (1) ending left (2) ending right.

C – Dot.

D – Island.

E – Trifurcation.

Figure 1.3

Fingerprint evidence has developed a sense of infallibility which is not wholly justified. While the number of matching points on a fingerprint is set down, the decision as to whether a match between two points is valid rests with the fingerprint specialist. It is not necessary for the fingerprint technician to understand the theoretical basis of his work to produce a result that can be taken into court and, as we shall see when we deal in detail with DNA evidence, this is also true of far more complex systems. It has been pointed out that although there is no absolute value for the number of matching points which are needed for declaring, in the opinion of the fingerprinter, that two fingerprints match, it remains a matter of opinion. There is also a scientific debate about fingerprints which may seem academic, but does raise suspicions in scientific circles. Without a calculated error rate, that is, incorrectly declared matches and incorrectly declared exclusions, it is virtually impossible to regard a set of results as reliable. Not stating an error rate is not the same as a zero error rate, which would in itself be highly suspicious.

This has two potentially conflicting results. The first is that it may be possible to challenge the opinion of one expert with that of another, and the second is that not having enough matching points may deprive the court of probative information. Fingerprints are rarely taken in isolation, so it could be said that a partial print, not necessarily conforming to an arbitrarily set standard, may give additional information to the court in assessing a case. However, even if the fingerprint expert can demonstrate a clear understanding of the theory of point matching and construction of probabilities, because such standards are set, not adhering to them could be seen as an invitation to contest the result by the provision of an alternative fingerprint expert.

All of these arguments lead us to consider the possibility that point matching is a form of numerical tyranny, giving an objective veneer to what is essentially a matter of opinion. There is nothing wrong with relying upon opinion, so long as every opinion is a result of the same training, which is based on the same clear theoretical basis. Unfortunately, people differ and so do their opinions. So although the number of points of match is regarded as the lynchpin of reliability in fingerprints, opinions may well differ as to the number of points that need to match.

It may be that it would be desirable to automate the process of fingerprint identification. This has been tried before, but with limited success, the failure not being associated with the performance of the available computers, but with the way in which the problem is dealt with by the software. Similar dynamic problems of identification have been dealt with both in speech recognition and handwriting interpretation. The advent of software based on neural networks, which are very much more complicated than the more usual software algorithms, could solve the problem of consistency and finally bring a level of objectivity into the use of fingerprints which has not previously been possible....

Table of contents

- Cover Page

- Title Page

- Copyright Page

- Preface

- Glossary

- Introduction

- Chapter 1 The Historical Context of Personal Identification

- Chapter 2 An Ideal Sample

- Chapter 3 Blood Groups and Other Cellular Markers

- Chapter 4 DNA Analysis

- Chapter 5 Paternity Tests and Criminal Cases

- Chapter 6 DNA Databases

- Chapter 7 Ethical Considerations of DNA and DNA Profiling

- Chapter 8 DNA Profiling and the Law: A Broader Aspect to DNA Analysis

- Appendix

- Further Reading