![]()

Technological Innovations in Large-Scale Assessment

April L. Zenisky and Stephen G. Sireci

Center for Educational Assessment, School of Education University of Massachusetts Amherst

Computers have had a tremendous impact on assessment practices over the past half century. Advances in computer technology have substantially influenced the ways in which tests are made, administered, scored, and reported to examinees. These changes are particularly evident in computer-based testing, where the use of computers has allowed test developers to re-envision what test items look like and how they are scored. By integrating technology into assessments, it is increasingly possible to create test items that can sample as broad or as narrow a range of behaviors as needed while preserving a great deal of fidelity to the construct of interest. In this article we review and illustrate some of the current technological developments in computer-based testing, focusing on novel item formats and automated scoring methodologies. Our review indicates that a number of technological innovations in performance assessment are increasingly being researched and implemented by testing programs. In some cases, complex psychometric and operational issues have successfully been dealt with, but a variety of substantial measurement concerns associated with novel item types and other technological aspects impede more widespread use. Given emerging research, however, there appears to be vast potential for expanding the use of more computerized constructed-response type items in a variety of testing contexts.

The rapid evolution of computers and computing technology has played a critical role in defining current measurement practices. Many tests are now administered on a computer, and a number of psychometric software programs are widely used to facilitate all aspects of test development and analysis. Such technological advances provide testing programs with many new tools with which to build tests and understand assessment data from a variety of perspectives and traditions. The potential for computers to influence testing is not yet exhausted, however, as the needs and interests of testing programs continue to evolve.

Test users increasingly express interest in assessing skills that can be difficult to fully tap using traditional paper-and-pencil tests. As the potential for integrating technology into task presentation and response collection has become more of a practical reality, a variety of innovative computerized constructed-response item types emerge. Many of these new types call for reconceptualization of what examinee responses look like, how they are entered into the computer, and how they are scored (Bennett, 1998). This is good news for test users in all testing contexts, as a greater selection of item types may allow test developers to increase the extent to which tasks on a test approximate the knowledge, skills, and abilities of interest.

The purpose of this article is to review the current advances in computer-based assessment, including innovative item types, response technologies, and scoring methodologies. Each of these topics defines an area where applications of technology are rapidly evolving. As research and practical implementation continues, these emerging assessment methods are likely to significantly alter measurement practices. In this article we provide an overview of the recent developments in task presentation and response scoring algorithms that are currently used or have the potential for use in large-scale testing.

Innovations in Task Presentation

Response Actions

In developing test items for computerized performance assessment, one critical component for test developers to think about is the format of the response an examinee is to provide. It may at first seem backward to think about examinee response formats before item stems, but how answers to test questions are structured has an obvious bearing on the nature of the information being collected. Thus, as the process of designing assessment tasks gets underway, some reflection on the inferences to be made on the basis of test scores and how best to collect that data is essential.

To this end, an understanding of what Parshall, Davey, and Pashley (2000) termed response action and what Bennett, Morley and Quardt (2000) described as response type may be helpful. Prior to actually constructing test items, some consideration of the type of responses desired from examinees and the method by which the responses could be entered can help a test developer to discern the kinds of item types that might provide the most useful and construct-relevant information about an examinee (Mislevy, Steinberg, Breyer, Almond, & Johnson, 1999). In a computer-based testing (CBT) environment, the precise nature of the information that test developers would like examinees to provide might be best expressed in one of several ways. For example, examinees could be required to type textbased responses, enter numerical answers via a keyboard or by clicking onscreen buttons, or manipulate or synthesize information on a computer screen in some way (e.g., use a mouse to direct an onscreen cursor to click on text boxes, pull-down menus, or audio or video prompts). The mouse can also be used to draw onscreen images as well as to "drag-and-drop" objects.

The keyboard and mouse are the input devices most familiar to examinees and are the ones overwhelmingly implemented in current computerized testing applications, but response actions in a computerized context are not exclusively limited to the keyboard and mouse. Pending additional research, some additional input devices by which examinees' constructed responses could one day be collected include touch screens, light pens, joysticks, trackballs, speech recognition software and microphones, and pressure-feedback (haptic) devices (Parshall, Davey, & Pashley, 2000). Each of these emerging methods represent inventive ways by which test developers and users can gather different pieces of information about examinee skills. However, at this time these alternate data collection mechanisms are largely in experimental stages and are not yet implemented as part of many (if any) novel item types. For this reason, we focus on emerging item types that use the keyboard, mouse, or both for collecting responses from examinees.

Novel Item Types

For many testing programs, the familiar item types currently in use such as multiple-choice and essay items provide Sufficient measurement information for the kinds of decisions being made on the basis of test scores. However, a substantial number of increasingly interactive item types that may increase measurement information are now available, and some are being used operationally. This proliferation of item types has largely come about in response to requests from test consumers for assessments aligned more closely with the constructs or skills being assessed. Although many of these newer item types were developed for specific testing applications such as licensure, certification, or graduate admissions testing, it is possible to envision each of these item types being adapted in countless ways to access different constructs as needed by a particular testing program.

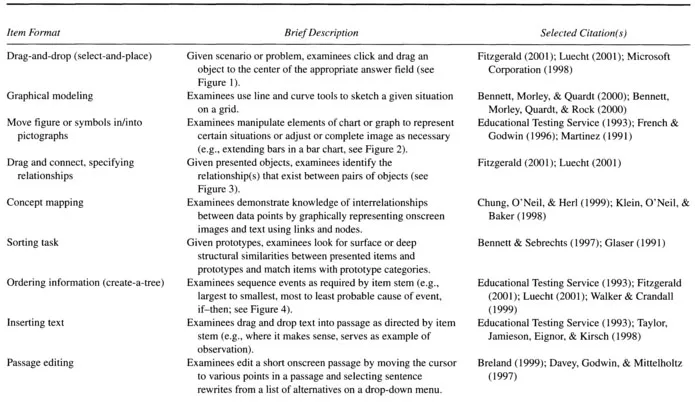

Numerous computerized item types have emerged over the past decade, so it is virtually impossible to illustrate and describe them all in a single article. Nevertheless, we conducted a review of the psychometric literature and of test publishers' Web sites and selected several promising item types for presentation and discussion. A nonexhaustive list of 21 of these item types is presented in Table 1, along with a brief description of each type and some relevant references. Some of the item types listed in Table 1 are being used operationally, whereas others have been only proposed for use.

TABLE 1

Computerized Performance Assessment Item Types

Our discussion of novel item types begins with those items requiring use of the mouse for different onscreen actions as methods for data collection. Some of these item types bear greater resemblance to traditional selected response item types and are easier to score mechanically, whereas others integrate technology in more inventive ways. After introducing these item types, we turn to those item types involving text-based responses. Last, we focus on items with more complex examinee responses that expand the concept of what responses to test items look like in fundamental ways and pose more difficult challenges for automated scoring.

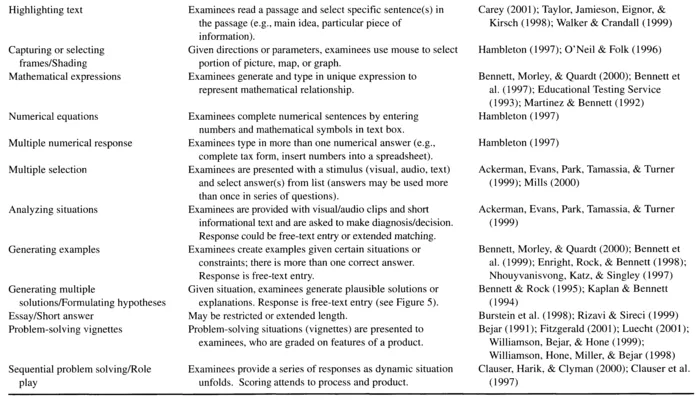

FIGURE 1 Example of drag-and-drop item type.

Item types requiring use of a mouse. Many of the emerging computerbased item types take advantage of the way in which people interact with a computer, specifically via a keyboard and mouse. The mouse and onscreen cursor provide a flexible mechanism by which items can be manipulated. Using a mouse, pull-down menus, and arrow keys, examinees can highlight text, drag-and-drop text and other stimuli, create drawings or graphics, or point to critical features of an item. An example of a drag-and-drop item (also called a select-and-place item) is presented in Figure 1. This item type is used on a number of the Microsoft certification examinations (Fitzgerald, 2001; Microsoft Corporation, 1998). These items can be scored right/wrong or using partial credit.

The graphical modeling item type also uses the drag-and-drop capability of a computer. This item type requires examinees to sketch out situations graphically using onsc...