- 268 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Compressed Sensing for Engineers

About this book

Compressed Sensing (CS) in theory deals with the problem of recovering a sparse signal from an under-determined system of linear equations. The topic is of immense practical significance since all naturally occurring signals can be sparsely represented in some domain. In recent years, CS has helped reduce scan time in Magnetic Resonance Imaging (making scans more feasible for pediatric and geriatric subjects) and has also helped reduce the health hazard in X-Ray Computed CT. This book is a valuable resource suitable for an engineering student in signal processing and requires a basic understanding of signal processing and linear algebra.

- Covers fundamental concepts of compressed sensing

- Makes subject matter accessible for engineers of various levels

- Focuses on algorithms including group-sparsity and row-sparsity, as well as applications to computational imaging, medical imaging, biomedical signal processing, and machine learning

- Includes MATLAB examples for further development

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

1

Introduction

There is a difference between data and information. We hear about big data. In most cases, the humongous amount of data contains only concise information. For example, when we read a news report, we get the gist from the title of the article. The actual text has just the details and does not add much to the information content. Loosely speaking, the text is the data and the title is its information content.

The fact that data is compressible has been known for ages; that is, the field of statistics—the art and science of summarizing and modeling data—was developed. The fact that we can get the essence of millions of samples from only a few moments or represent it as a distribution with very few parameters points to the compressibility of data.

Broadly speaking, compressed sensing deals with this duality—abundance of data and its relatively sparse information content. Truly speaking, compressed sensing is concerned with an important sub-class of such problems—where the sparse information content has a linear relationship with data. There are many such problems arising in real life. We will discuss a few of them here so as to motivate you to read through the rest of the book.

Machine Learning

Consider a health analyst trying to figure out what are the causes of infant mortality in a developing country. Usually, when an expecting mother comes to a hospital, she needs to fill up a form. The form asks many questions: for example, the date of birth of the mother and father (thereby their ages), their education level, their income level, type of food (vegetarian or otherwise), number of times the mother has visited the doctor, and the occupation of the mother (housewife or otherwise). Once the mother delivers the baby, the hospital keeps a record of the baby’s condition. Therefore, there is a one-to-one correspondence between the filled-up form and the condition of the baby. From this data, the analyst tries to find out what are the factors that lead to the outcome (infant mortality).

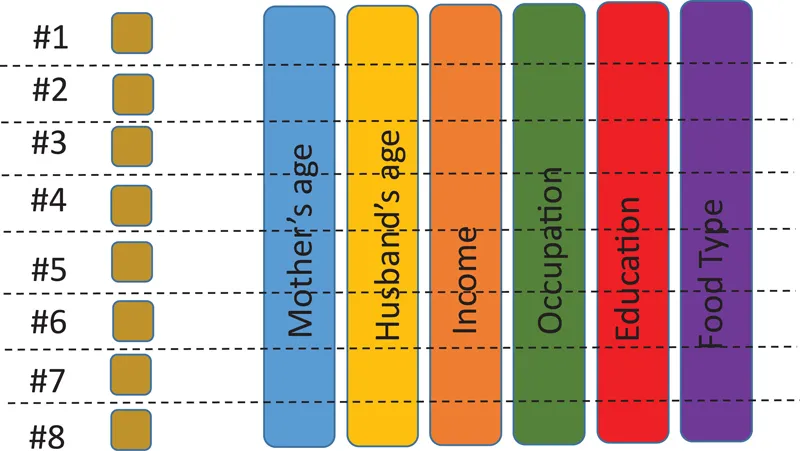

FIGURE 1.1

Health record and outcome.

Health record and outcome.

For simplicity, the analyst can assume that the relationship between the factors (age, health, income, etc.) and the outcome (mortality) is linear, so that it can be expressed as shown in the following (Figure 1.1).

Formally, this can be expressed as follows:

(1.1) |

where b is the outcome, H is the health record (factors along columns and patients along rows), and x is the (unknown) variable that tells us the relative importance of the different factors. The model allows for some inconsistencies in the form of noise n.

In the simplest situation, we will solve it by assuming that the noise is normally distributed; we will minimize the l2-norm.

(1.2) |

However, the l2-norm will not give us the desired solution. It will yield an x that is dense, that is, will have non-zero values in all positions. This would mean that ALL the factors are somewhat responsible for the outcome. If the analyst says so, the situation would not be very practical. It is not possible to control all aspects of the mother (and her husband’s) life. Typically, we can control only a few factors, not all. But the solution (1.2) does not yield the desired solution.

Such types of problems fall under the category of regression. The simplest form of regression, the ridge regression/Tikhonov regularization (1.3), does not solve the problem either.

(1.3) |

This too yields a dense solution, which in our example is ineffective. Such problems have been studied for long statistics. Initial studies in this area proposed greedy solutions, where each of the factors was selected following some heuristic criterion. In statistics, such techniques were called sequential forward selection; in signal processing, they were called matching pursuit.

The most comprehensive solution to this problem is from Tibshirani; he introduced the Least Angle Selection and Shrinkage Operator (LASSO). He proposed solving,

(1.4) |

The l1-norm penalty promotes a sparse solution (we will learn the reason later). It means that x will have non-zero values only corresponding to certain factors, and the rest will be zeroes. This would translate to important (non-zero) and unimportant (zero) factors. Once we decide on a few important factors, we can concentrate on controlling them and improving child mortality.

However, there is a problem with the LASSO solution. It only selects the most important factor from the set of related factors. For example, in child’s health, it is medically well known that the parents’ age is an important aspect. Therefore, we consider the age of both parents. If LASSO is given a free run (without any medical expe...

Table of contents

- Cover

- Half Title

- Title Page

- Copyright Page

- Table of Contents

- Foreword

- Preface

- Acknowledgments

- Author

- 1. Introduction

- 2. Greedy Algorithms

- 3. Sparse Recovery

- 4. Co-sparse Recovery

- 5. Group Sparsity

- 6. Joint Sparsity

- 7. Low-Rank Matrix Recovery

- 8. Combined Sparse and Low-Rank Recovery

- 9. Dictionary Learning

- 10. Medical Imaging

- 11. Biomedical Signal Reconstruction

- 12. Regression

- 13. Classification

- 14. Computational Imaging

- 15. Denoising

- Index

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access Compressed Sensing for Engineers by Angshul Majumdar in PDF and/or ePUB format, as well as other popular books in Technology & Engineering & Electrical Engineering & Telecommunications. We have over one million books available in our catalogue for you to explore.