![]()

Chapter 1

Perception of Spatial Sound

Elizabeth M. Wenzel, Durand R. Begault, and Martine Godfroy-Cooper

Immersion refers acoustically to sounds as coming from all directions around a listener, which normally is an inevitable consequence of natural human listening in an air medium. Audible sound sources are everywhere in real environments where sound waves propagate and reflect from surfaces around a listener. Even in the quietest of environments, such as an anechoic chamber, the sounds of one’s own body will be audible. However, the common meaning of immersion in audio and acoustics refers to the psychological sensation of being surrounded by specific sound sources as well as ambient sound. Although acoustically a sound can reach a listener from multiple surrounding directions, its spatial characteristics may be judged as unrealistic, static or constrained. For example, good quality concert hall acoustics has traditionally been correlated with a listener’s sensation of being immersed by the sound of the orchestra, as opposed to the sound seeming distant and removed. Spatial audio techniques, particularly 3D audio, can provide an immersive experience because virtual sound sources and sound reflections can be made to appear from anywhere in space around a listener. This chapter introduces a listener to the physiological, psychoacoustic and acoustic bases of these sensations.

Auditory Physiology

Auditory perception is a complex phenomenon determined by the physiology of the auditory system and affected by cognitive processes. The auditory system transforms the fundamental independent aspects of sound stimuli, such as their spectral content, temporal properties and location in space into distinct patterns of neural activity. These patterns will give rise to the qualitative experience of pitch, loudness, timbre and location. They will ultimately be integrated with information from the other sensory systems to form a unified perceptual representation and provide behavior guidance that includes orienting to acoustical stimuli and engaging in intra-species communication.

Auditory Function: Peripheral Processing

The functional auditory system extends from the ears to the brain’s frontal lobes with successively more complex functions occurring as one ascends the hierarchy of the nervous system (Figure 1.1). The different functions performed by the auditory system are classically categorized as peripheral auditory processing and central auditory processing.

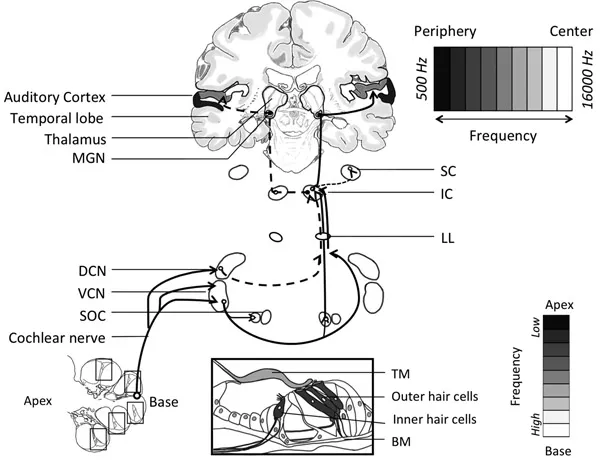

Figure 1.1Diagram of the major auditory pathways. Note the auditory system entails several parallel pathways and that information from each ear reaches both sides of the system (dashed line: ipsilateral, continuous line: contralateral). Top Right: Tonotopic representation in the auditory cortex. High frequencies are represented at the center of the cortical map while low frequencies are represented at its periphery. Bottom Left: Cochlea. Bottom Center: Organ of Corti. Bottom Right: Cochleotopic coding along the basilar membrane (BM).

The peripheral auditory system includes processing stages from the outer ear to the cochlear nerve. A crucial transformation is performed within these early stages, which is often compared to a Fourier analysis of the incoming sound waves that defines how the sounds are processed at the later stages of the auditory hierarchy. Sound enters the ear as pressure waves. At the periphery of the system, the external and the middle ear respectively collect sound waves and selectively amplify their pressure, so that they can be successfully transmitted to the fluid-filled cochlea in the inner ear.

The external ear, which consists of pinna (plural, pinnae) and auditory meatus (or canal), gathers the pressure waves and focuses them on the eardrum (tympanic membrane) at the end of the canal. One consequence of the configuration of the human auditory canal is that it selectively boosts the sound pressure 30 to 100 fold for frequencies around 3 kHz via a passive resonance effect due to the length of the ear canal. This amplification makes humans especially sensitive to frequencies in the range of 2–5 kHz, which appears to be directly related to speech perception. A second important function of the pinnae is to selectively filter sound frequencies in order to provide cues about the elevation of a sound source: up/down and front/back angles (Shaw, 1974). The vertically asymmetrical convolutions of the pinna are shaped so that the external ear transmits more high frequency components from an elevated source than from the same source at ear level. Similarly, high frequencies tend to be more attenuated for sources in the rear than for sources in the front, as a consequence of the orientation and structure of the pinna (Blauert, 1997).

The middle ear is a small cavity, which separates the outer and the inner ear. The cavity contains the three smallest bones (hammer, anvil and stirrup) in the body called ossicles, connected more or less flexibly to each other. Its major function is to match the relatively low impedance (impedance in this context refers to a medium’s resistance to movement) airborne sounds to the higher impedance fluid in the inner ear. Without this action, there would be a loss of transmission of 1000:1 corresponding to a loss of sensitivity of 30 dB. The middle ear has two small muscles known as the tensor tympani and the stapedius, which have a protective function. When sound pressure reaches a threshold loudness level (at approximately 85 dB HL1 in humans with normal hearing), a sensory driven afferent signal is sent to the brainstem via the cochlear nerve, which initiates an efferent reflexive contraction of the stapedius muscle within the middle ears referred to as the stapedius reflex or acoustic middle ear reflex. The excitation of the muscle results in a stimulus level-dependent attenuation of low-frequency (< 1 kHz) ossicular chain vibration reaching the cochlea (Wilson & Margolis, 1999).

Transduction Mechanisms in the Cochlea

The cochlea in the inner ear is the most critical structure in the peripheral auditory pathway. The cochlea is a small-coiled snail-like structure that responds to the sound-induced vibrations and converts them into electrical impulses, a process known as mechanoelectrical transduction. Cochlear signal transduction involves amplification and decomposition of complex acoustical waveforms into their component frequencies.

Two membranes, the basilar membrane (BM) and the vestibular membrane (VM), divide the cochlea in three fluid-filled chambers. The organ of Corti sits on the BM and contains an array of sensory hair cells that contact with the tectorial membrane (TM), a structure that plays multiple, critical roles in hearing including coupling elements along the length of the cochlea, supporting a travelling wave and ensuring the gain and timing of cochlear feedback are optimal (Richardson, Lukashkin, & Russell, 2008). The sensory hair cells are responsible for the mechanoelectrical transduction, i.e., the transformation of mechanical stimulus into electrochemical activity. The acoustical stimulus initiates a traveling wave by displacing the hair, thus enabling the encoding of frequency, amplitude and phase of the original sound stimulus by the electrical activity of the auditory nerve fibers. As the BM displaces, it causes deflection in the hair bundles (stereocilliae, tiny processes that protrude from the apical ends of the hair cells) of the hair cells of the location-matched inner hair cells, which results in a current flow and ultimately an action potential. Because the stiffness of the BM changes throughout the cochlea, the displacement of the membrane induced by an incoming sound depends on the frequency of that sound. Specifically, the BM is stiffer near its “base” than near the middle of the spiral (“the apex”). Consequently, high frequency sounds (20 kHz) produce displacement near the base, while low frequency sounds (20 Hz) disturb the membrane near the apex. As a result, each locus on the BM is identified by its characteristic frequency (CF) and the whole BM can be described as a bank of overlapping filters (Patterson et al., 1987; Meddis & Lopez-Poveda, 2010). Because the BM displaces in a frequency-dependent manner, the corresponding hair cells are “tuned” to sound frequency. The resulting spatial proximity of contiguous preferred sound frequency (“place theory of hearing”, von Békésy, 1960; “place code” model, Jeffress, 1948) is referred to as tonotopy or better, cochleotopy. This tonotopic organization is carried up through the auditory hierarchy to the cortex (Moerel et al., 2013, Saenz & Langers, 2014) and defines the functional topography in each of the intermediate relays.

Auditory Function: Central Processing

The central auditory system is composed of a number of nuclei and complex pathways that ascend within the brainstem. The earliest stage of central processing occurs at the cochlear nucleii (dorsal, DCN and ventral, VCN), where the tonotopic organization of the cochlea is maintained. Accordingly the output of the CN has several targets.

One is the superior olivary complex (SOC), the first point at which information from the two ears interacts. The best understood function of the SOC is sound localization. Humans use at least two different strategies, and two different pathways, to localize the horizontal position of sound sources, depending on the frequencies of the stimulus. For frequencies below 3 kHz, which the auditory nerve can follow in a phase-locked manner, interaural time differences (ITDs) are used to localize the source; above these frequencies, interaural intensity differences (IIDs) are used as cues (King & Middlebrooks, 2011; Yin, 2002). ITDs are processed in the medial superior olive (MSO), while IIDs are processed in the lateral superior olive (LSO). These two pathways eventually merge in the midbrain auditory centers. The elevation of sound sources is determined by spectral filtering mediated by the external pinnae. Experimental evidence suggests that the spectral notches created by the shape of the pinnae are detected by neurons in the DCN. See the section on human sound localization for additional discussion of these cues.

The binaural pathways for sound localization are only part of the output of the CN. A second major set of pathways from the CN bypasses the SOC and terminates in the nuclei of the lateral lemniscus (LL) on the contralateral side of the brainstem. These particular pathways respond to sound arriving at one ear only and are thus referred to as monaural. Some cells in the nuclei of the LL signal the onset of sound, regardless of its intensity or frequency. Other cells process other temporal aspects of sound such as duration.

As with the outputs of the SOC, the pathways from the LL project to the midbrain auditory center, also known as the inferior colliculus (IC). This structure is a major integrative center where the convergence of binaural inputs produces a computed topographical representation of the auditory space. At this level, neurons are typically sensitive to multiple localization cues (Chase & Young, 2006) and respond best to sounds originating in a specific region of space, with a preferred elevation and a preferred azimuthal location. As a consequence, it is the first point at which auditory information can interact with the motor system. Another important property of the IC is its ability to process sounds with complex temporal patterns. Many neurons in the IC respond only to frequency-modulated sounds while others respond only to sounds of specific durations. Such sounds are typical components of biologically relevant sounds, such as those made by predators and in humans, speech.

The IC relays auditory information to the medial geniculate nucleus (MGN) of the thalamus, which is an obligatory relay for all ascending information destined for the cortex. It is the first station in the auditory pathway where pronounced selectivity for combinations of frequencies is found. Cells in the MGN are also selective for specific time intervals between frequencies. The detection of harmonic and temporal combination of sounds is an important feature of the processing of speech.

In addition to the cortical projection, a pathway to the superior colliculus (SC) gives rise to an organized representation of ITDs and IIDs in a point-to-point map of the auditory space (King & Palmer, 1983). Topographic representations of multiple sensory modalities (visual, auditory and somatosensory) are integrated to control the orientation of movements toward specific spatial locations (King, 2005).

Auditory Function: The Auditory Cortex

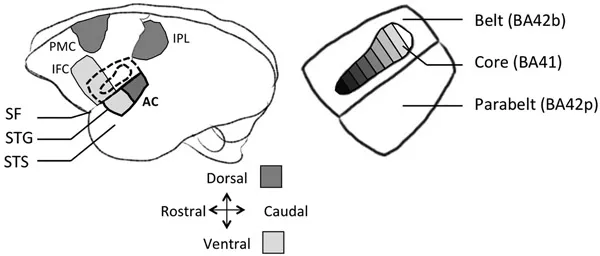

The auditory cortex (AC, Figure 1.2) is the major target of the ascending fibers from the MGC and plays an essential role in our conscious perception of sound, including speech comprehension, which is arguably the most significant social stimulus for humans.

Although the AC has a number of subdivisions, a broad distinction can be made between a primary area and a secondary area. The primary auditory cortex (BA41, core area) located on the superior temporal gyrus of the temporal lobe receives point-to-point input from the MGC and comprises three distinct tonotopic fields (Saenz & Langers, 2014). Neurons in BA41 have narrow tuning functions and respond best to tone stimuli, supporting basic auditory functions such as frequency discrimination and sound localization. It also plays a role in processing of within-species communication sounds.

Figure 1.2 Left: Auditory cortex (AC) location in the human brain. The superior temporal gyrus (STG) is bordered superiorly by the Sylvian fissure (SF). The STG is bordered on the inferior side by the superior temporal sulcus (STS). Dorsal stream: intraparietal lobe (IPL), premotor cortex (PMC). Ventral stream: inferior frontal cortex (IFC). Right...