eBook - ePub

The Multisensory Driver

Implications for Ergonomic Car Interface Design

- 158 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

About this book

Driver inattention has been identified as one of the leading causes for car accidents. The problem of distraction while driving is likely to worsen, partly due to increasingly complex in-car technologies. However, intelligent transport systems are being developed to assist drivers and to ensure a safe road environment. One approach to the design of ergonomic automobile systems is to integrate our understanding of the human information processing systems into the design process. This book aims to further the design of ergonomic multisensory interfaces using research from the fast-growing field of cognitive neuroscience. It focuses on two aspects of driver information-processing in particular: multisensory interactions and the spatial distribution of attention in driving. The Multisensory Driver provides interface design guidelines together with a detailed review of current cognitive neuroscience and behavioural research in multisensory human perception, which will help the development of ergonomic interfaces. The discussion on spatial attention is particularly relevant for car interface designers, but it will also appeal to cognitive psychologists interested in spatial attention and the applications of these theoretical research findings. Giving a detailed description of a cohesive series of psychophysical experiments on multisensory warning signals, conducted in both laboratory and simulator settings, this book provides an approach for those in the engineering discipline who wish to test their systems with human observers.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Chapter 1

Introduction

The attentional limitations associated with driving

Humans are inherently limited capacity creatures; that is, they are able to process only a small amount of the sensory information that is typically available at any given time (see Broadbent, 1958; Driver, 2001; Simons and Chabris, 1999; Spence and Driver, 1997a). Although it is unclear what the exact nature of this limited capacity is (see Posner and Boies, 1971; Schumacher et al., 2001), the inability of humans to simultaneously process multiple sources of sensory information places a number of important constraints on their attentional processing of stimuli both in the laboratory and in a number of real-life settings (e.g., see McCarley et al., 2004; O’Regan, Rensink, and Clark, 1999; Spence and Read, 2003; Velichkovsky et al., 2002).

Over the years, attention has been defined in a number of different ways. It has, for example, been defined as the ‘ability to concentrate perceptual experience on a selected portion of the available sensory information to achieve a clear and vivid impression of the environment’ (Motter, 2001, p. 41). One very important element of any definition of attention, however, is its selectivity (see Driver, 2001, for a review). Among all of the various different dimensions along which the selective processing of information may take place, the spatial distribution of attention represents an area that is of great interest to many researchers, from both a theoretical and an applied standpoint (see Posner, 1978; Spence, 2001; Spence and Driver, 1994; Spence and Read, 2003).

The limited capacity of spatial attention to process sensory information in humans raises important constraints on the design and utilization of, for instance, vehicular information systems (e.g., Brown, Tickner, and Simmonds, 1969; Burke, Gilson, and Jagacinski, 1980; Chan and Chan, 2006; Mather, 2004; Spence and Driver, 1997a). The act of driving represents a highly complex skill requiring the sustained monitoring of integrated perceptual and cognitive inputs (Hills, 1980). The ability of drivers to attend selectively and their limited ability to divide their attention amongst all of the competing sensory inputs have a number of important consequences for driver performance. This, in turn, links inevitably to the topic of vehicular accidents. For instance, a driver may fail to detect the sudden braking of the vehicle in front if distracted by a passenger’s conversation (or by the conversation with someone at the other end of a mobile phone; e.g., Horrey and Wickens, 2006; Spence and Read, 2003; see Chapter 2), resulting in a collision with the vehicle in front (see Sagberg, 2001; Strayer and Drews, 2004). In fact, one recent research report has shown that the presence of two or more car passengers is associated with a two-fold increase in the likelihood of a driver having an accident as compared to people who drive unaccompanied (see McEvoy, Stevenson, and Woodward, 2007b). In addition, these attentional limitations on driver performance are currently being exacerbated by the ever-increasing availability of complex in-vehicle technologies (Ashley, 2001; Lee, Hoffman, and Hayes, 2004; Wang, Knipling, and Goodman, 1996; though see also Cnossen, Meijman, and Rothengatter, 2004), such as satellite navigation systems (e.g., Burnett and Joyner, 1997; Dingus, Hulse, Mollenhauer, Fleischman, McGehee, and Manakkal, 1997; Fairclough, Ashby, and Parks, 1993), mobile phones (e.g., Jamson, Westerman, Hockey, & Carstens, 2004; Patten, Kircher, Ostlund, and Nilsson, 2004; Spence and Read, 2003; Strayer, Drews, and Johnston, 2003), email (e.g., Harbluk and Lalande, 2005; Lee, Caven, Haake, and Brown, 2001) and ever more elaborate sound systems (e.g., Jordan and Johnson, 1993). Somewhat surprisingly, this proliferation of in-vehicle interfaces has taken place in the face of extensive research highlighting the visual informational overload suffered by many drivers (e.g., Bruce, Boehm-Davis, and Mahach, 2000; Dewar, 1988; Dukic, Hanson, and Falkmer, 2006; Hills, 1980) and the widely reported claim in the literature that at least 90 per cent of the information used by drivers is visual (e.g., Booher, 1978; Bryan, 1957; Spence & Ho, forthcoming; though see also Sivak, 1996).

Given the many competing demands on a driver’s limited cognitive resources, it should come as little surprise that driver inattention, including drowsiness, distraction and ‘improper outlook’, has been identified as one of the leading causes of vehicular accidents, estimated to account for anywhere between 26 per cent (Wang et al., 1996) and 56 per cent (Treat et al., 1977) of all road traffic accidents (see also Ashley, 2001; Gibson and Crooks, 1938; Klauer, Dingus, Neale, Sudweeks, and Ramsey, 2006; McEvoy et al., 2007; Sussman, Bishop, Madnick, and Walter, 1985). Fortunately, however, the last few years have also seen the development of a variety of new technologies, such as sophisticated advanced collision avoidance systems, designed to monitor the traffic environment automatically, and to provide additional information to drivers in situations with a safety implication. In fact, the development of these new technologies, known collectively as intelligent transport systems (ITS; Noy, 1997), means that more information than ever before can now potentially be delivered to drivers in a bid to enhance their situation awareness and ultimately improve road safety.

One of the most common types of car accident, estimated to account for around a quarter of all collisions, is the front-to-rear-end (FTRE) collision (McGehee, Brown, Lee, and Wilson, 2002; see also Evans, 1991). The research that has been published to date suggests that driver distraction represents a particularly common cause of this kind of accident (no matter whether the lead vehicle is stationary or moving; Wang et al., 1996; see also Rumar, 1990). Research by Strayer and Drews (2004) has also shown that mobile phone use tends to be one of the factors leading to front-to-rear-end collisions (see also Alm and Nilsson, 2001; Sagberg, 2001).

The potential benefits associated with improving the situation awareness of drivers to road dangers such as impending collisions are huge. To put this into some kind of perspective, it has been estimated that the introduction of a system that provided even a modest decrease in the latency of overall driver responses of, say, around 500 ms, would reduce front-to-rear-end collisions by as much as 60 per cent (Suetomi and Kido, 1997; see also ‘The mobile phone report’, 2002). Given that a number of different forward collision warning systems now exist, it has become increasingly important to determine the optimal means of assisting drivers to avoid such collisions (e.g., Graham, 1999). Current strategies differ in terms of their degree of intervention, varying from pro-active collision avoidance systems that can initiate automatic emergency braking responses, to collision warning systems that simply present warning signals to drivers in an attempt to get them to adjust their speed voluntarily instead (Hunter, Bundy, and Daniel, 1976; Janssen and Nilsson, 1993).

In fact, the technology now exists to enable ‘intelligent’ cars to detect dangerous road situations on the road ahead (e.g., such as adaptive radar cruise control systems and computerized safety systems that enable cars to communicate with each other prior to potential collisions), which in theory means that the cars of the future could potentially soon take control away from the driver and become autonomous (Knight, 2006). However, at present, car manufacturers and governmental organizations appear to prefer that the control of the car remains in the hands of the drivers (though see Smith, 2008). The primary reason for this is related to the legal implications and issues arising should an accident occur during automatic cruising, such as those relating to liability for the accident (see Hutton and Smith, 2005; Knight, 2006). Therefore, investigations into the design of optimal warning signals that can alert drivers to potential dangers are essential given the increasing availability of advanced driver assistance systems (see Yomiuri Shimbun, 2008).

A great deal of empirical effort has gone into studying how best to alert and warn inattentive drivers of impending road dangers. For example, a number of researchers have suggested that non-visual (e.g., auditory and/or tactile) warning signals could be used in interface design (e.g., Deatherage, 1972; Hirst and Graham, 1997; Horowitz and Dingus, 1992; Lee et al., 2004; Sorkin, 1987; Stokes, Wickens, and Kite, 1990; see Table 1.1).

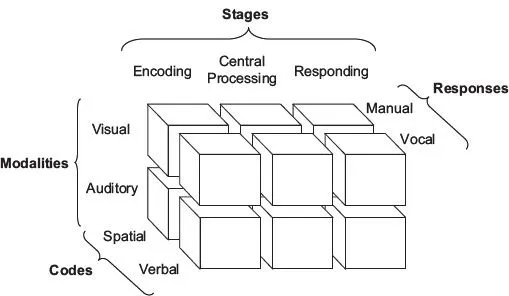

Multisensory integration

It is our belief that one increasingly important way in which to design effective in-car interfaces is by understanding the limitations of the human brain’s information processing system (see Spence and Ho, forthcoming). This area of research is known as ‘neuroergonomics’ (e.g., see Fafrowicz and Marek, 2007; Sarter & Sarter, 2003). Traditional theories of human information processing have typically considered individual sensory modalities, such as audition, vision and touch, as being independent. In particular, the most influential multiple resource theory (MRT) on human workload and performance originally proposed by Christopher Wickens in 1980 (see e.g., Wickens, 1980, 1984, 1992, 2002) more than a quarter of a century ago specifically postulated the existence of independent pools of attentional resources for the processing of visual and auditory information (Hancock, Oron-Gilad, and Szalma, 2007; see Figure 1.1). According to the multiple resources account, when people are engaged in concurrent tasks that consume their visual and auditory resources simultaneously, such as talking on the mobile phone while driving, there should be no dual-task cost due to the putative independence of the attentional resources concerned. That is, according to Wickens’ theory, the conversation is processed by the auditory-verbal-vocal pathway, while the act of driving is processed by the visual-spatial-manual pathway. Therefore, these two processes should not, in theory, conflict with each other.

Table 1.1 Advantages of non-visual (auditory and/or tactile) warning signals for interface design

Purported advantages of non-visual over visual warning signals | Study |

Responses to auditory and tactile signals typically more rapid than to visual signals. | Jordan (1972); Nelson, McCandlish, and Douglas (1990); Todd (1912) |

Non-visual warning signals should not overload the driver’s ‘visual’ system (cf. Sivak, 1996). | McKeown and Isherwood (2007); though see Spence and Driver (1997b); Spence and Read (2003) |

Inherently more alerting. | Campbell et al. (1996); Gilmer (1961); Posner, Nissen, and Klein (1976) |

Non-visual warnings do not depend for their effectiveness on the current direction of a driver’s gaze. They are perceptible even when the drivers’ eyes are closed, such as during blinking; or when the visual system is effectively ‘turned off’, such as during saccades that typically occur several times a second. | Bristow, Haynes, Sylvester, Frith, and Rees (2005); Hirst and Graham (1997); Stanton and Edworthy (1999) |

Non-visual warning signals tend to be judged as less annoying than many other kinds of warning signal. | Lee et al. (2004); McGehee and Raby (2003) |

The evidence that has emerged from both behavioural and neuroimaging studies over the last decade or so has, however, argued against this traditional view (see Navon, 1984). Instead, the recently developing multisensory approach to human information processing has put forward the view that people typically integrate the multiple streams of sensory information coming from each of their senses (e.g., vision, audition, touch, olfaction and taste) in order to generate coherent multisensory perceptual representations of the external world (e.g., see the chapters in Calvert, Spence, and Stein, 2004; Spence and Driver, 2004). In fact, the multisensory integration of inputs from different sensory modalities appears to be the norm, not the exception. What is more, the research published to date has shown that multisensory integration takes place automatically under the majority of experimental conditions (see Navarra, Alsius, Soto-Faraco, and Spence, forthcoming, for a review). Linking back to the limited capacity of human information processing, interference or bottlenecks in attention could therefore occur at at least two different stages, specifically, at the modality-specific level and/or at the crossmodal level that is shared between different sensory modalities (as well as at the perceptual; see Lavie, 2005, and also at the response selection stages; see Levy, Pashler, and Boer, 2006; Pashler, 1991; Schumacher et al., 2001).

Figure 1.1 Schematic diagram of the Multiple Resource Theory of human workload and performance proposed by Wickens (e.g., 1980, 2002)

The extensive body of evidence that has emerged from recent laboratory-based research has provided support for the existence of robust crossmodal links in spatial attention between different sensory modalities (see Spence and Driver, 2004; Spence and Gallace, 2007). In particular, the available research now suggests that the efficiency of human multisensory information processing can be enhanced if the relevant information provided to the different senses is presented from approximately the same spatial location (see Driver and Spence, 2004) at approximately the same time (Spence and Squire, 2003). The research has also shown that it is normally harder to selectively attend to one sensory signal if a concurrent (but irrelevant) signal coming from another sensory modality is presented from approximately the same location (see Spence and Driver, 1997b; Spence, Ranson, and Driver, 2000b). This also implies that it may be harder to ignore a sensory signal in one sensory modality if it is presented at (or near) the current focus of a person’s spatial attention in another sensory modality (see Spence et al., 2000b).

Crossmodal links in spatial attention have now been observed between all possible combinations of auditory, visual and tactile stimuli (Spence and Driver, 1997a, 2004). These links in spatial attention have been shown to influence both exogenous and endogenous attentional orienting (Klein, 2004; Klein and Shore, 2000; Posner, 1980; see Driver and Spence, 2004; Spence, McDonald, and Driver, 2004, for reviews). Exogenous orienting refers to the stimulus-driven (or bottom-up) shifting of a person’s attention whereby the reflexive orienting of attention occurs as a result of external stimulation. By contrast, endogenous orienting refers to the voluntary shifting of a person’s attention that is driven internally by top-down control. A variety of laboratory-based research has suggested that independent mechanisms may control these two kinds of attentional orienting (e.g., Berger, Henik, and Rafal, 2005; Hopfinger and West, 2006; Klein and Shore, 2000; Santangelo and Spence, forthcoming; Spence and Driver, 2004).

Current approaches to the design of auditory warning signals

Over the years, a number of different approaches to the design of effective auditory warning signals have been proposed. These include the use of spatially-localized auditory warning signals (e.g., Begault, 1993, 1994; Bliss and Acton, 2003; Bronkhorst, Veltman, and van Breda, 1996; Campbell et al., 1996; Humphrey, 1952; Lee, Gore, and Campbell, 1999), the use of multisensory warning signals (e.g., Hirst and Graham, 1997; Kenny, Anthony, Charissis, Darawish, and Keir, 2004; Mariani, 2001; Mowbray and Gebhard, 1961; Selcon, Taylor, and McKenna, 1995; Spence and Driver, 1999; see also Haas, 1995; Spence and Ho, forthcoming) and the use of synthetic warning signals (such as auditory earcons; e.g., Lucas, 1995; McKeown and Isherwood, 2007) that have been artificially engineered to deliver a certain degree of perceived urgency.

To date, these various different approaches have met with mixed success (see Lee et al., 1999; cf. Rodway, 2005). For example, researchers have found that people often find it difficult to localize auditory warning signals, especially when they are presented in enclosed spaces such as inside a car, hence often negating any benefit associated with the spatial attributes of the warning sound (see Bliss and Acton, 2003; Fitch, Kiefer, Hankey, and Kleiner, 2007). Meanwhile, other researchers have reported favourably on the potential use of directional sounds in confined spaces (e.g., Cabrera, Ferguson, and Laing, 2005; Catchpole, McKeown, and Withington, 2004).

Moreover, it often takes time for interface operators to learn the arbitrary association between a particular auditory earcon and the appropriate response, as the perceived urgency is transmitted by the physical characteristics of the warning signal itself (such as the rate of presentation, the fundamental frequency of the sound and/or its loudness, etc.; e.g., Edworthy, Loxley, and Dennis, 1991; Graham, 1999; Haas and Casali, 1995; Haas and Edworthy, 1996). It would appear, therefore, that unless auditory earcons can somehow be associated with intuitive responses on the part of the driver (Graham, 1999; Lucas, 1995), they should not be used in dangerous situations to which an interface operator (and, in particular, the driver of a road vehicle) may only rarely be exposed, since they may fail to produce the appropriate actions immediately (see also Guillaume, Pellieux, Chastres, and Drake, 2003).

Given these limitations in the use of traditional warning signals, a number of researchers have attempted to investigate whether auditory icons (i.e., sounds that imitate real-world events; Gaver, 1986) might provide more effective warning signals, the idea being that they should inherently convey the meaning of the events that they are meant to signify (e.g., Blattner, Sumikawa, and Greenberg, 1989; Gaver, 1989; Gaver, Smith, and O’Shea, 1991; Lazarus and Höge, 1986; McKeown, 2005). Over the years, the effectiveness of a variety of different ‘urgent’ auditory icons has been evaluated in terms of their ability to capture a person’s attention, and perhaps more importantly, to elicit the appropriate behavioural responses from them (e.g., see Deatherage, 1972; Oyer ...

Table of contents

- Cover

- Half Title

- Title Page

- Copyright Page

- Table of Contents

- List of Figures

- List of Tables

- Acknowledgements

- Chapter 1 Introduction

- Chapter 2 Driven to Distraction

- Chapter 3 Driven to Listen

- Chapter 4 The Auditory Spatial Cuing of Driver Attention

- Chapter 5 The Vibrotactile Spatial Cuing of Driver Attention

- Chapter 6 The Multisensory Perceptual versus Decisional Facilitation of Driving

- Chapter 7 The Multisensory Spatial Cuing of Driver Attention

- Chapter 8 Conclusions

- References

- Index

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access The Multisensory Driver by Cristy Ho,Charles Spence in PDF and/or ePUB format, as well as other popular books in Computer Science & Human-Computer Interaction. We have over one million books available in our catalogue for you to explore.