1 | Neuromorphic Engineering |

“The human brain performs computations inaccessible to the most powerful of today’s computers—all while consuming no more power than a light bulb. Understanding how the brain computes reliably with unreliable elements, and how different elements of the brain communicate, can provide the key to a completely new category of hardware (Neuromorphic Computing Systems) and to a paradigm shift for computing as a whole. The economic and industrial impact is potentially enormous.”

Human Brain Project (2014)

Complexity manifests in our world in countless ways [1, 2]. Social interactions between large populations of individuals [3], physical processes such as protein folding [4], and biological systems such as human brain area function [5] are examples of complex systems, which each have enormous numbers of interacting parts that lead to emergent global behavior. Emergence is a type of behavior that arises only when many elements in a system interact strongly and with variation [6], which is very difficult to capture with reductionary models. Understanding, extracting knowledge about, and creating predictive models of emergent phenomena in networked groups of dynamical units represent some the most challenging questions facing society and scientific investigation. Emergent phenomena play an important role in gene expression, brain disease, homeland security, and condensed matter physics. Analyzing complex and emergent phenomena requires data-driven approaches in which reams of data are synthesized by computational tools into probable models and predictive knowledge. Most current approaches to complex system and big-data analysis are software solutions that run on traditional von Neumann machines; however, the interconnected structure that leads to emergent behavior is precisely the reason why complex systems are difficult to reproduce in conventional computing frameworks. Memory and data interaction bandwidths greatly constrain the types of informatic systems that are feasible to simulate.

The human brain is believed to be the most complex system in the universe. It has approximately 1011 neurons, and each neuron connected to up to 10,000 other neurons, communicating with each other via as many as 1015 synaptic connections. The brain is also indubitably a natural standard for information processing, one that has been compared to artificial processing systems since their earliest inception. It is estimated to perform between 1013 and 1016 operations per second while consuming only 25 W of power [7]. Such exceptional performance is in part due to the neuron biochemistry, its underlying architecture, and the biophysics of neuronal computation algorithms. The brain as a processor differs radically from computers today, both at the physical level and at the architectural level.

Brain-inspired computing systems could potentially have paradigm defining degrees of data interconnection throughput (a key correlate of emergent behavior), which could enable the study of new regimes in signal processing, at least some of which (e.g., real-time complex system assurance and big data awareness) exude a pronounced societal need for new signal processing approaches. Unconventional computing platforms inspired by the human brain could simultaneously break performance limitations inherent in traditional von Neumann architectures in solving particular classes of problems.

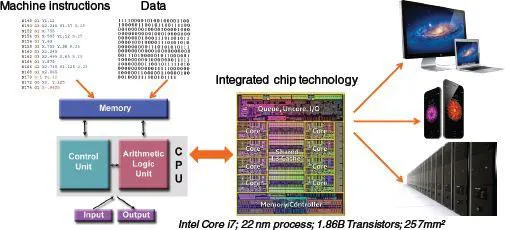

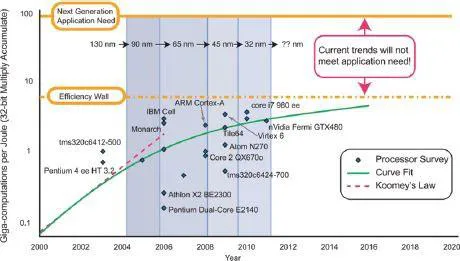

Conventional digital computers are based on the von Neumann architecture [8] (also called the Princeton architecture). As shown in Fig. 1.1, it consists of a memory that stores both data and instructions, a central processing unit (CPU) and inputs and outputs. Instructions and data stored in the memory unit lie behind a shared multiplexed bus which means that both cannot be accessed simultaneously. This leads to the well known von Neumann bottleneck [9] which fundamentally limits the performance of the system—a problem that is aggravated as CPUs become faster and memory units larger. Nonetheless, this computing paradigm has dominated for over 60 years driven in part by the continual progress dictated by Moore’s law1 [10] for CPU scaling and Koomey’s law2 [11] for energy efficiency (multiply-accumulate (MAC) operations per joule) compensating the bottleneck. Over the last several years, though, such scaling has not followed suit, approaching an asymptote (see Fig. 1.2). The computation efficiency levels off below 10 MMAC/mW (or 10 GMAC/W or 100 pJ per MAC) [12]. The reasons behind this trend can be traced to both the representation of information at the physical level and the interaction of processing with memory at the architectural level [13].

At the device level, digital CMOS is reaching physical limits [14, 15]. As the CMOS feature sizes scale down below 90 nm to 65 nm, the voltage, capacitance, and delay no longer scale according to a well-defined rate by Dennard’s law [16]. This leads to a tradeoff between performance (when transistor is on) and subthreshold leakage (when it is off). For example, as the gate oxide (which serves as an insulator between the gate and channel) is made as thin as possible (1.2 nm, around five atoms thick Si) to increase the channel conductivity, a quantum mechanical phenomenon of electron tunneling [17, 18] occurs between the gate and channel leading to increased power consumption. The computational power efficiency for biological systems, on the other hand, is around 1 aJ per MAC operation, which is eight orders of magnitude higher (better) than the power efficiency wall for digital computation (100 pJ/MAC) [12, 13]. There is a widening gap (see Fig. 1.2) between the efficiency wall (supply) and the next generation application need (demand) with the incipient rise of big data and complex systems.

At the architectural level, brain-inspired platforms approach information processing by focusing on substrates that exhibit the interconnected causal structures and dynamics analogous to the complex systems present in our world which virtualized in conventional computing frameworks. Computational tools have been revolutionary in hypothesis testing and simulation and have led to the discovery of innumerable theories in science, and they will be an indispensable aspect of a holistic approach to problems in big data and many body physics; however, huge gaps between information structures in observed systems and standard computing architectures motivates a need for alternative paradigms if computational abilities are to be brought to the growing class of problems associated with complex systems.

Over the last several years, there has been a deeply committed exploration of unconventional computing techniques—neuromorphic engineering—to alleviate the device level and system/architectural level challenges faced by conventional computing platforms [12, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31–32]. Neuromorphic engineering aims to build machines employing basic nervous systems operations by bridging the physics of biology with engineering platforms (see Fig. 1.3) enhancing performance for applications interacting with natural environments such as vision and speech [12]. As such, neuromorphic engineering is going through a very exciting period as it promises to make processors that use low energies while integrating massive amounts of information. These neural-inspired systems are typified by a set of computational principles, including hybrid analog-digital signal representations, co-location of memory and processing, unsupervised statistical learning, and distributed representations of information.

Information representation can have a profound effect on information processing. In what is considered the third generation of neuromorphic electronics, approaches are typified by their use of spiking signals. Spiking is a sparse coding scheme recognized by the neuroscience community as a neural encoding strategy for information processing [33, 34, 35, 36, 37–38], and has firm code-theoretic justifications [39, 40–41]. Digital in amplitude but temporally analog, spike codes exhibit the expressiveness and efficiency of analog processing with the robustness of digital communication. This distri...