- 496 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Distributed System Design

About this book

Future requirements for computing speed, system reliability, and cost-effectiveness entail the development of alternative computers to replace the traditional von Neumann organization. As computing networks come into being, one of the latest dreams is now possible - distributed computing.

Distributed computing brings transparent access to as much computer power and data as the user needs for accomplishing any given task - simultaneously achieving high performance and reliability.

The subject of distributed computing is diverse, and many researchers are investigating various issues concerning the structure of hardware and the design of distributed software. Distributed System Design defines a distributed system as one that looks to its users like an ordinary system, but runs on a set of autonomous processing elements (PEs) where each PE has a separate physical memory space and the message transmission delay is not negligible. With close cooperation among these PEs, the system supports an arbitrary number of processes and dynamic extensions.

Distributed System Design outlines the main motivations for building a distributed system, including:

Presenting basic concepts, problems, and possible solutions, this reference serves graduate students in distributed system design as well as computer professionals analyzing and designing distributed/open/parallel systems.

Chapters discuss:

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Chapter 1

Introduction

1.1 Motivation

- Inherently distributed applications. Distributed systems have come into existence in some very natural ways, e.g., in our society people are distributed and information should also be distributed. Distributed database system information is generated at different branch offices (sub-databases), so that a local access can be done quickly. The system also provides a global view to support various global operations.

- Performance/cost. The parallelism of distributed systems reduces processing bottlenecks and provides improved all-around performance, i.e., distributed systems offer a better price/performance ratio.

- Resource sharing. Distributed systems can efficiently support information and resource (hardware and software) sharing for users at different locations.

- Flexibility and extensibility. Distributed systems axe capable of incxe-mental growth and have the added advantage of facilitating modification or extension of a system to adapt to a changing environment without disrupting its operations.

- Availability and fault tolerance. With the multiplicity of storage units and processing elements, distributed systems have the potential ability to continue operation in the presence of failures in the system.

- Scalability. Distributed systems can be easily scaled to include additional resources (both hardware and software).

1.2 Basic Computer Organizations

Two basic computer structures: (a) physically shared memory structure and (b) physically distributed memory structure.

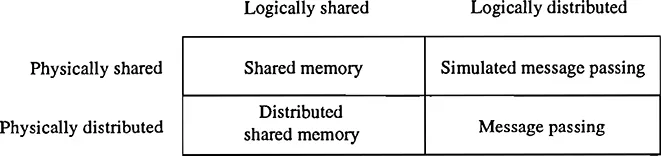

Physically versus logically shared/distributed memory.

- Physically shared memory structure (Figure 1.1 (a)) has a single memory address space shared by all the CPUs. Such a system is also called a tightly coupled system. In a physically shared memory system, communication between CPUs takes place through the shared memory using read and write operations.

- Physically distributed memory structure (Figure 1.1 (b)) does not have shared memory and each CPU has its attached local memory. The CPU and local memory pair is called processing element (PE) or simply processor. Such a system is sometimes referred to as a loosely coupled system. In a physically distributed memory system, communications between the processors are done by passing messages across the interconnection network through a send command at the sending processor and a receive command at the receiving processor.

Table of contents

- Cover Page

- Title Page

- Copyright Page

- Dedication

- Contents

- Preface

- Biography

- Chapter 1 INTRODUCTION

- Chapter 2 DISTRIBUTED PROGRAMMING LANGUAGES

- Chapter 3 FORMAL APPROACHES TO DISTRIBUTED SYSTEMS DESIGN

- Chapter 4 MUTUAL EXCLUSION AND ELECTION ALGORITHMS

- Chapter 5 PREVENTION, AVOIDANCE, AND DETECTION OF DEADLOCK

- Chapter 6 DISTRIBUTED ROUTING ALGORITHMS

- Chapter 7 ADAPTIVE, DEADLOCK-FREE, AND FAULT-TOLERANT ROUTING

- Chapter 8 RELIABILITY IN DISTRIBUTED SYSTEMS

- Chapter 9 STATIC LOAD DISTRIBUTION

- Chapter 10 DYNAMIC LOAD DISTRIBUTION

- Chapter 11 DISTRIBUTED DATA MANAGEMENT

- Chapter 12 DISTRIBUTED SYSTEM APPLICATIONS

- INDEX

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app