- 226 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Nonlinear Psychophysical Dynamics

About this book

Nonlinear Psychophysical Dynamics utilizes new results in systems theory as a foundation for representing sensory channels as a form of recursive loop processes. It demonstrates that a range of phenomena, previously treated as diverse or anomalous, are more readily seen as related and as the natural consequence of self-regulation and nonlinearity. Some cases with appropriate data analysis are reviewed.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

1 General Qualitative Dynamics of some Nonlinear Systems

Nonlinear systems have become a focus of intense and extensive intellectual activity, not only in physics but by derivation in the biological sciences (Schuster, 1984, Hao, 1984, Holden, 1986). An approach to modelling complex and traditionally intractable processes of such generality obviously can be the subject of an overview from a very wide perspective; this has already been done very competently by the authors just cited, and is not our objective here. Rather, within the limited framework of a nonlinear dynamical systems theory approach, we concentrate upon only one topic, the generation of sensory intensity as a response to a physically varying environment.

This approach is a sort of theoretical psychophysics, in which a transfer function representation is written without regard to the specific mechanisms of sensory transduction in a given modality such as vision, hearing, touch, smell, or whatever. It is in contradistinction to the approach taken, for example, by Laming (1986), which focusses on stochastic models of the initial stage in the perceptual process, and so we are here closer to what he has called Sensory Judgment as opposed to Sensory Analysis. Whether the two can be so readily demarcated is not a settled matter, but this author prefers to think of the two approaches as complementary. Most detailed models in psychophysics are very properly concerned with what may be called1 (a) the microfunctional representation of the signal conversion from environmental to neural events, (b) the series-parallel transmission of signal sequences in neural pathways from receptors to higher brain centres, and (c)

1 I am indebted to Prof. Dr. U. Mortensen for fruitful discussions on this topic.

the selection of output representations (responses) of some of the properties of the neural activity stored in the higher centres.

Our focus here is heavily on (c), and we choose to telescope (a) and (b) into a single one ⇒ one or a related noisy mapping of input onto a central state variable. Thus the interest is in the mass action of sensory systems that exhibit some input-output invariances under restricted but identifiable stimulus conditions. These invariances are not necessarily simple, but may be represented only in the structure of nonlinear difference or recursion equations, whereas the parameters reflect in their variability the current condition of the observer as a system.

It is perhaps easiest to begin with a relatively familiar result and work away from it. Traditionally the zero-order ( i.e. time independent ) relationship between physical stimulus magnitudes and psychological response intensity is represented as a psychometric function which is

(a) continuous and everywhere single-valued

(b) bounded both above and below

(c) monotone increasing between the bounds

(d) approximated by a cumulative normal ogive (CNO)

This picture is found in the older textbooks, well-established by the first decade of the 20th century, with some second-order modifications (see Guilford, 1954, for an extensive review of such early curve-fitting) . The ubiquitous normal curve assumption of course pervades the models of Thurstone (1927) and again surfaces in the more usual, but not necessary (Egan, 1975) assumptions of signal detection theory.

Nothwithstanding the very good fits that psychophysical data can yield when plotted as a straight line on log-probability paper, given some meticulousness in controlling the conditions of data collection, repeated attempts do surface from time to time to derive the psychometric function from other process assumptions ( e.g. Freeman, 1975, and a symposium in the British Journal of Mathematical and Statistical Psychology, 1984 ). A possible rationale for accepting the CNO form stems from the idea that sensation is mediated by a vast number of independent elements or channels, and a progressive temporary recruitment from the population operates as the level of input to the system is increased, so that more and more elements are incorporated in the process or channel to carry stronger and stronger signals. The weakness of this conceptualisation quite simply lies in the notion that neural substrate mass action is appropriately modelled by a vast aggregate of independent elements; in fact the channels are in a network and the minimal connectivity of such a network can be shown to support stable dynamic properties which a random collection could not display ( Peretto and Niez, 1986a,b ).

The interest in locally connected networks, where each element has some interaction with its neighbours, and interaction is not necessarily inversely proportional to the functional ( not literal physical ) distances between elements, lies in various properties. For example:

(1) The dynamics of large aggregates in connected networks are independent of the number of elements involved; they do not increase in complexity with the size of the network.

(2) The number of patterns of nonlinear stability and instability, such as catastrophes, limit cycles, hysteresis, is limited and in many cases they can consequently be indentified and modelled.

(3) Large aggregates may function effectively as single recursive processes, and exhibit characteristics of a single feedback loop, though probably with a complicated (high order) transfer function.

All this means that large collections of neurons, which are the substrate of any sensory pathway to consciousness, have their own level of dynamics which are not derivable just by adding up the activity of their components. The behaviour of a collection of idealized cells, each with inhibitory and excitatory inputs and outputs, is not something like a complicated telephone exchange. A network or aggregate of interconnected cells has emergent properties which can be at one moment very simple, at another very complicated, depending upon the level of input and the subsequent rate of energy dissipation. Fortuitously, when such behaviour is simple it almost looks like traditional psychophysics.

Previous examples suggest that there are two main ways to go in exploring a nonlinear psychophysics which can potentially relate back into the modelling of the related neurophysiological substrates at the mass action level; either a recursive complex difference equation of order 3 or above, or three or more linear difference equations (in 3 variables) coupled to each other. The second approach includes the Lorenz (1963) equations which have been the starting-point for many analyses and simulations of physical systems. However, in psychophysics, as distinct from neurophysiology, there is rarely sufficient justification for using systems of coupled equations; we do not know enough about a sensory system to justify such strong hypotheses. It is thus expedient to sketch out the difference between psychophysics and neurophysiology a bit further.

Although psychophysical models which are typically very simple linear input-output equations are written without specifying that they depend on the choice of units and the sensory dimension involved, obviously their solution involves fixing parameter values which are characteristic of the dimension within the modality which is being studied. Even if the representation of sensory intensity is supposed to have some general common basis extending across modalities, which makes it possible to write of and conduct experiments on cross-modal matching of apparently qualitatively diverse dimensions of sensation, such as loudness and stickiness (!!!), as soon as we move to the very detailed study of a specific modality the dominance of questions relating neurophysiologics activity to experienced sensation quality and intensity over any very general formalization is inevitable.

The extensive and detailed models of psychophysics are almost all specific to vision or to hearing, and it is consequently mainly in those two modalities that there exist data bases, derived with separate experimental methodologies, that are sufficiently firmly based in detailed parameter estimation for it to be possible to derive theoretical psychophysical input-output topologies from neurophysiological assumptions and data. Proceeding in the reverse direction is not possible because psychophysical relations are overall input-output gain functions and so cannot logically entail tight constraints on the neurophysical substrate’s functional equations. There are many ways internally of achieving the external properties of a black box. One may express this redundancy by saying that there is a many⇒one mapping from X onto Y, where

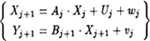

| [1.1] |

in state space notation; the Uj are the stimuli on trial j, the Xj, Xj+1 are the central processes serving as state variables and the Yj+1 are the observable psychophysical outputs or quantified responses. The wj and vj are noise, no constraining assumptions are made about var(w), var(v), covar(w, v), or their autoregressions. Putting suffices to A and B indicates that these operators are themselves potentially time-dependent. Obviously [1.1] is too loose to serve as more than a heuristic, but starting with a generalised nonlinear equation of Volterra form is a comparable exercise which has been pursued (an der Heiden, 1980). In psychophysics a system theory approach which treats some formal representation of the (implicit, unobservable) dynamics of sensation as state variables, to be estimated given some constraints on model structure, is viable.

The properties which neural networks have, as a consequence of their interconnectedness, are exceedingly complex, and suggest that we should expect (as we do indeed get), multistability, and sometimes apparent instability in the time series representations of output. A network has to be regarded as both transmitting signals cleanly (as though an almost noiseless path has been cut through it) , or diffusing the input into an excitation pattern in space and time with high entropy, and perhaps going into reverberations. The most interesting developments in nonlinear systems theory suggest gross analogies between energy transmission in large aggregates of molecules (e.g. the Belousov-Zhabotinsky reaction) and signal transmission in neural assemblies. In both cases the number of “single elements” is 108 or more and the behaviour of single elements is not observable.

The axiomatic structures of complex models of sensory systems commonly presuppose that the distributions of events would be gaussian, and, or, that they would be perturbed by gaussian noise. That is, any transmission process may show moment-to-moment variability in the magnitude of its outputs, and this may be treated as the consequence of the output being the result of a large number of partially uncoupled system elements, each probabilistic in time in its transduction properties.

This assumption of gaussian noise and/or intrinsic variability breaks down if advanced as an unqualified generalisation, because output distributions show statistical forms markedly deviant from the normal distribution. When this happens special additional assumptions about behaviour under limiting cases, such as responding to very weak stimuli , have to be made if we are to retain the assumptions; observed output distributions might then be treated as mixtures of simpler probability functions.

There is yet another problem, which arises from the restriction which may be imposed on the human observer to report only “yes/no” or binary analogues such as “lesser/greater” . If the organism is only capable of detection/non-detection or discrimination/non-discrimination, then the continuous variability of processes within the system has to be degraded in some way when an output is selected by the observer. Commonly some gating mechanism is assumed to operate, if the internal variable X is above a threshold Xc then the output Y is observed. Xc is not itself observable, but is a construct which can be given a stochastic variability in time, with whatever autoregressive structure that seems plausible. Of itself such a structure may be insufficient to capture observed output distribution properties, and so some convolution or integration of the X variable to give

| [1.2] |

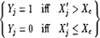

and then use Xj′ as the variable for the stochastic inequality Xj′ ≠ Xc, with

| [1.3] |

as the output decision rule, is advanced.

The consequence of introducing threshold mechanisms like [1.3] is of course to create nonlinearity, which increases the a priori probability that the model would fit behaviour, because psychological systems are typically nonlinear. Introduct...

Table of contents

- Cover

- Full Title

- Copyright

- Preface

- Frontmatter

- Contents

- 1 General Qualitative Dynamics of some Nonlinear Systems

- 2 Choice of a Recursive Core Equation

- 3 A Recursive Loop System Using Γ

- 4 A Subjective Weber Function and Output Uncertainty

- 5 A Generic Dynamic Mapping of Environment onto Γ

- 6 Further Variants on Mappings of Inputs

- 7 Cascading of the Loop

- 8 Elementary Identification of Variants and Parameters

- 9 Matching Data Patterns and Theory Patterns

- 10 Metric or Nonmetric Scaling: Properties of Outputs

- 11 Analogues of SDT and Isocriterion Plots

- 12 Range and Transposition Effects

- 13 Mixing and Attenuation

- 14 Résumé

- Subject Index

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access Nonlinear Psychophysical Dynamics by Robert A.M. Gregson in PDF and/or ePUB format, as well as other popular books in Psychologie & Psychologie expérimentale. We have over one million books available in our catalogue for you to explore.