eBook - ePub

The Cerebral Computer

An Introduction To the Computational Structure of the Human Brain

- 552 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

The Cerebral Computer

An Introduction To the Computational Structure of the Human Brain

About this book

Viewing the human brain as "the most complex and powerful computer known," with a memory capacity and computational power exceeding the largest mainframe systems, Professor Baron sets the groundwork for understanding the computational structure and organization of the human brain. He provides the introductory framework necessary for this new and growing field of investigation and he discusses human vision, mental imagery, sensory-motor functions, audition, affect and behavior.

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription.

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn more here.

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 1000+ topics, we’ve got you covered! Learn more here.

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more here.

Yes! You can use the Perlego app on both iOS or Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Yes, you can access The Cerebral Computer by Robert J. Baron in PDF and/or ePUB format, as well as other popular books in Psychology & Cognitive Psychology & Cognition. We have over one million books available in our catalogue for you to explore.

Information

1

NEURONS: THE COMPUTATIONAL CELLS OF BRAINS

INTRODUCTION

The fundamental assumption that underlies this entire presentation is that the brain is a computer. It is comprised of some hundred billion computational cells called nerve cells or neurons, which interact in a variety of ways. This chapter will focus on neurons and how they interact with one another.

The brain is highly structured, with its major anatomical connections specified genetically. Some connections appear to be regulated by sensory stimulation early in life, but the extent to which the brain’s architecture is determined by sensory stimulation is only beginning to be understood. In any event, once the physical connections between neurons have been established, they appear to remain fixed for the life of the system.

NEURAL NETWORKS AND THEIR ANALYSIS

Neurons are organized into well-defined and highly structured computational networks called neural networks. Neural networks are the principal computational systems of the brain and there are many types, including receptor networks, encoding and decoding networks, storage networks, and control networks. Many of them have been studied anatomically and have a well-known structure or architecture, and many also have a well-understood computational role. It is more often the case, however, that the brain’s computational networks, which are sometimes called “nuclei,” “centers,” or “bodies” by anatomists, have been studied anatomically with their specific computational role not yet being understood. In many cases, certain computational networks are predicted but their anatomical location and structure are not known.

Each neural network receives inputs through specific input pathways, process them in a well-defined way, and responds to them through specific output pathways in a manner that depends on current and past inputs. The ultimate goal of this research is to understand all computational activity of the brain in terms of these constituent networks, how they interact with one another, and for receptor networks, how they react to the environment. We are a long way from achieving this goal.

There are many facets to understanding the human brain and its computations. At the most elemental level we seek to understand the mechanisms of the nerve cell. In time, neural interactions will be understood in terms of cell structure, metabolic activity, transport mechanisms, and so forth, and this level of understanding should lead to descriptions of the networks in which both the statistical properties of the nerve cells (spike train statistics) and the electrical activity (presynaptic and postsynaptic potentials, refractory periods, synaptic delays, axonal conduction, etc.) will be quantitatively related to the activities of other neurons and nonneural elements (notably glial cells) that make inputs to the networks. This level of understanding will not be discussed at any depth here.

At a somewhat less elemental level we seek to understand the logical interconnections of small groups of cells. We seek to understand their input-output or computational behavior in terms of the activities of their cells. This is the black box level of modeling, and there are two different methodologies for studying neural networks at this level. One is to select a particular biological network to study, model the structure and interactions of its neurons, and determine by suitable mathematical analysis or computer simulation how the model network behaves. The validity of the model is then checked by comparing its computed properties with the corresponding properties of the biological system as determined experimentally. This methodology is generally adopted by the theoretical biologist when modeling networks such as the nervous system of the crayfish, the eye of the cat, the retina of the frog, the olfactory bulb, and the cerebellum. A second methodology is to define the desired input–output properties of a network and determine what types of neural interactions and structures are required to realize the desired behavior. Within this methodology, experimental and anatomical considerations are generally used to constrain the models. This is the approach I have adopted for studying information processing in the brain, including learning, visual and auditory recognition, information storage and memory, natural language processing, the control of movement, and the affect systems.

The most general level at which one can study brain function is in terms of its component information-processing networks and interactions between them. Thus one may consider a system that contains a receptor network (retina, cochlea, etc.), information-transforming networks, memory stores, adaptive control networks, and so on, without considering in detail the underlying neural activity. This is the system level of modeling. At this level of analysis one looks primarily at the psychological (behavioral) properties of the entire system in terms of its architecture—its functional components and their organization.

NEURONS

Since the primary concern throughout this book will be with the computational nature of the human brain, I will begin with an overview of the neuron and neural interactions. Two facts should temper this discussion. First, there is an enormous variety of neurons in the brain, with fundamental differences in morphology (structure), patterns of connections, and the way that neurons send and receive information. (See for example my discussions on the retina, the superior olive, and the cerebellum later in this book.) This discussion attempts to convey only those features that are common to most types of neurons—the average neuron, so to speak. Second, our current knowledge of neural interactions is limited in many respects, and although there is a large body of knowledge about certain aspects of neural interactions, other aspects are virtually unknown. This discussion describes only those aspects which are best understood.

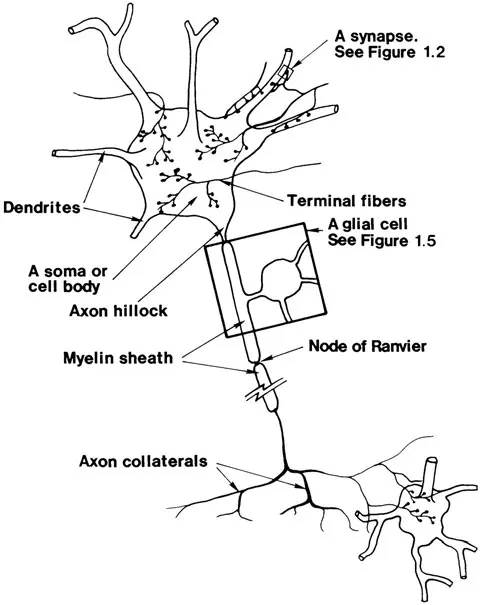

A neuron consists of four parts essential to our understanding: the cell body or soma, dendrites, an axon, and axon branches, collaterals, or terminal fibers. See Figure 1.1. Dendrites are filamentous extensions of the soma which branch many times in the region surrounding the soma, forming the dendrite tree. The region in space occupied by the dendrite tree of a neuron is its dendrite field. The soma and dendrite tree are the receptors of signals from other neurons. A single axon originates at the soma, extends some distance, and divides often many times into a set of axon collaterals. The place on the soma where the axon originates is the axon hillock. Some axon collaterals progress to other parts of the brain where they divide even further before contacting other neurons. The regions in space occupied by the axon collaterals of a neuron are its axon fields. Each terminal fiber ends in a synaptic button, which almost contacts a dendrite branch or soma of another or the same neuron. Each such place of near-contact is called a synapse, and the space separating the two cells is called the synaptic cleft. See Figure 1.2. Synapses are essential for the information processing done by the neurons and will shortly be discussed in more detail.

A neuron normally maintains an ionic concentration gradient across its cell membrane, which produces an electric potential. When the membrane potential at the axon hillock is sufficiently disturbed, a self-sustaining depolarization pulse (sometimes called impulse, pulse, or spike) propagates along the axon and spreads throughout all axon collaterals. When the potential fluctuation caused by a depolarization pulse reaches a synapse, chemical transmitters are released. The chemical transmitters, which are ordinarily held in synaptic vesicles, are released and diffuse across the synaptic cleft to the cell membrane of the postsynaptic neuron where they bind to receptor sites and may excite (tend to depolarize) or inhibit (tend to hyperpolarize) the postsynaptic cell. Within a short time after the chemical transmitters are released from the nerve endings, they are inactivated enzymatically, reabsorbed by the nerve terminals, or removed from the synaptic area by diffusion. The postsynaptic cell soon returns to its normal resting potential.

Figure 1.1. The structure of a typical neuron. Only a few of its synaptic contacts are shown.

There are many different types of synapses as illustrated in Figure 1.3. Synapses of the type just described are axosomatic or axodendritic depending, on whether the contact is to a soma or dendrite. Axodendritic synapses may also terminate on specialized dendritic structures called dendritic spines, as illustrated at the top of the figure. Synapses between two axons are axoaxonic and those between two dendrites are dendrodendritic. Axosynaptic synapses, illustrated in the figure, are specialized structures often found in receptor networks; their intended computations are not yet understood. Finally, some synapses release chemical transmitters which control capillary constriction, muscle contraction (at structures called motor end plates) or in some unknown way the extracellular medium. The latter class of synapses are axoextracellular or free endings.

The influence that the presynaptic neuron has on the firing of a postsynaptic neuron is not exerted at one synapse alone but through many synaptic contacts that are distributed over parts or all of the soma or dendrite tree of the latter. The morphology of a neuron and the geometry and distribution of the contacts between itself and other neurons depends on the particular neuron and varies greatly between neurons in different parts of the brain. Figure 1.4 illustrates a few of the hundreds of varieties of neurons that can be found. Nonetheless, when a presynaptic neuron fires, the depolarization pulse causes the release of chemical transmitters at each of the synaptic contacts that it reaches. In this way one presynaptic cell may simultaneously influence thousands and perhaps tens of thousands of different postsynaptic cells, or in some cases only a single cell. The neurotransmitters that are released at a single synapse cause a slight fluctuation in the membrane potential of the postsynaptic cell in the region immediately surrounding the synapse, and these individual fluctuations spread from each focus of excitation. When a fluctuation arrives at the soma of the postsynaptic cell, its influence combines with all other influences that arrive at the same time from other focuses of excitation and may be of sufficient strength to initiate a pulse in the axon of the cell.

Although some neurons are contacted by a single presynaptic cell, most are contacted by many and often thousands of different presynaptic cells at thousands and perhaps tens of thousands of synapses. The fluctuations in the membrane potential at the soma, therefore, depend on the combined influence of all of the presynaptic cells. Furthermore, at a given synapse and at a particular moment of time, the effect of the neurotransmitters depends both on the quantity and type of neurotransmitter released. The magnitude of the resultant potential fluctuation in the postsynaptic cell also depends on the conductive properti...

Table of contents

- Cover Page

- Title Page

- Copyright Page

- Dedication

- Contents

- Preface

- Acknowledgments

- 1 Neurons: The Computational Cells of Brains

- 2 Information: Its Movement and Transformation

- 3 Information Storage

- 4 The Control of Associative Storage Systems

- 5 Information Encoding and Modality

- 6 Information Storage and Human Memory

- 7 The Visual System

- 8 Visual Experiences and Mental Imagery

- 9 Accessing Visual Memories and Visual Recognition

- 10 The Auditory System

- 11 Cognition, Understanding, and Language

- 12 The Anatomy and Physiology of the Sensory-Motor System

- 13 The Body in Space

- 14 The Control of Configuration and Simple Movements

- 15 The High-Level Control of Movements

- 16 Sensations, Affects, and Behavior

- 17 The Three Cognitive Systems and Learning

- Postscript

- Author Index

- Subject Index