- 152 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Deep Learning for Remote Sensing Images with Open Source Software

About this book

In today's world, deep learning source codes and a plethora of open access geospatial images are readily available and easily accessible. However, most people are missing the educational tools to make use of this resource. Deep Learning for Remote Sensing Images with Open Source Software is the first practical book to introduce deep learning techniques using free open source tools for processing real world remote sensing images. The approaches detailed in this book are generic and can be adapted to suit many different applications for remote sensing image processing, including landcover mapping, forestry, urban studies, disaster mapping, image restoration, etc. Written with practitioners and students in mind, this book helps link together the theory and practical use of existing tools and data to apply deep learning techniques on remote sensing images and data.

Specific Features of this Book:

- The first book that explains how to apply deep learning techniques to public, free available data (Spot-7 and Sentinel-2 images, OpenStreetMap vector data), using open source software (QGIS, Orfeo ToolBox, TensorFlow)

- Presents approaches suited for real world images and data targeting large scale processing and GIS applications

- Introduces state of the art deep learning architecture families that can be applied to remote sensing world, mainly for landcover mapping, but also for generic approaches (e.g. image restoration)

- Suited for deep learning beginners and readers with some GIS knowledge. No coding knowledge is required to learn practical skills.

- Includes deep learning techniques through many step by step remote sensing data processing exercises.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

| | (1.1) |

|---|

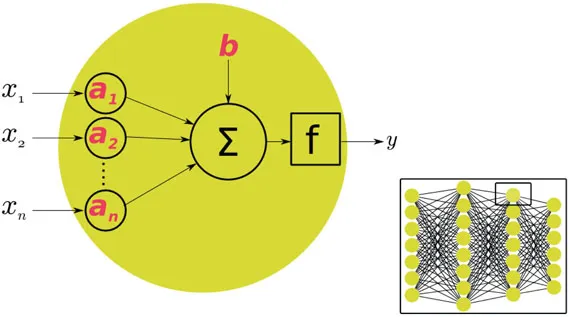

Example of a network of artificial neurons aggregated into layers. In the artificial neuron: xi is the input, y is the neuron output, ai are the values for the gains, b is the offset value, and f is the activation function.

| | (1.2) |

|---|

| | (1.3) |

|---|

Table of contents

- Cover

- Half Title

- Series Page

- Title Page

- Copyright Page

- Contents

- Preface

- Author

- Part I: Backgrounds

- Part II: Patch-based classification

- Part III: Semantic segmentation

- Part IV: Image restoration

- Bibliography

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app