Exploring the Limits in Personnel Selection and Classification

- 637 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Exploring the Limits in Personnel Selection and Classification

About this book

Beginning in the early 1980s and continuing through the middle 1990s, the U.S. Army Research Institute for the Behavioral and Social Sciences (ARI) sponsored a comprehensive research and development program to evaluate and enhance the Army's personnel selection and classification procedures. This was a set of interrelated efforts, collectively known as Project A. Project A had a number of basic and applied research objectives pertaining to selection and classification decision making. It focused on the entire selection and classification system for Army enlisted personnel and addressed research questions that can be generalized to other personnel systems. It involved the development and evaluation of a comprehensive array of predictor and criterion measures using samples of tens of thousands of individuals in a broad range of jobs. The research included a longitudinal sample--from which data were collected at organizational entry--following training, after 1-2 years on the job and after 3-4 years on the job.

This book provides a concise and readable description of the entire Project A research program. The editors share the problems, strategies, experiences, findings, lessons learned, and some of the excitement that resulted from conducting the type of project that comes along once in a lifetime for an industrial/organizational psychologist. This book is of interest to industrial/organizational psychologists, including experienced researchers, consultants, graduate students, and anyone interested in personnel selection and classification research.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

V

Selection Validation, Differential Prediction, Validity Generalization, and Classification Efficiency

13

The Prediction of Multiple Components of Entry-Level Performance

THE “BASIC” VALIDATION

Procedures

Sample

Number of soldiers:

|

| MOS | CVI | LVI (Listwise Deletion Sample) | |

| 11B | Infantryman | 491 | 235 |

| 13B | Cannon Crewmember | 464 | 553 |

| 19Ea | M60 Armor Crewmember | 394 | 73 |

| 19K | M1 Armor Crewmember | — | 446 |

| 31C | Single Channel Radio Operator | 289 | 172 |

| 63B | Light-Wheel Vehicle Mechanic | 478 | 406 |

| 71L | Administrative Specialist | 427 | 252 |

| 88M | Motor Transport Operator | 507 | 221 |

| 91A | Medical Specialist | 392 | 535 |

| 95B | Military Police | 597 | 270 |

| Total | 4,039 | 3,163 |

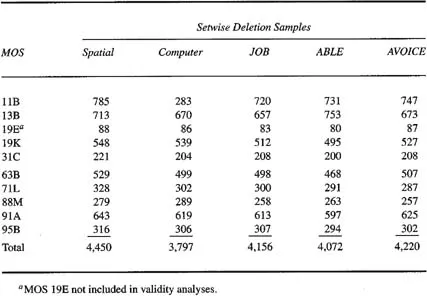

Soldiers in LVI Setwise Deletion Samples for Validation of Spatial, Computer, JOB, ABLE, and AVOICE Experimental Battery Composites by MOS

Predictors

Table of contents

- Cover

- Half Title

- Full Title

- Copyright

- Contents

- List of Figures

- List of Tables

- Preface

- Foreword

- About the Editors and Contributors

- I INTRODUCTION AND MA JOR ISSUES

- II SPECIFICATION AND MEASUREMENT OF INDIVIDUAL DIFFERENCES FOR PREDICTING PERFORMANCE

- III SPECIFICATION AND MEASUREMENT OF INDIVIDUAL DIFFERENCES IN JOB PERFORMANCE

- IV DEVELOPING THE DATABASE AND MODELING PREDICTOR AND CRITERION SCORES

- V SELECTION VALIDATION, DIFFERENTIAL PREDICTION, VALIDITY GENERALIZATION, AND CLASSIFICATION EFFICIENCY

- VI APPLICATION OF FINDINGS: THE ORGANIZATIONAL CONTEXT OF IMPLEMENTATION

- VII EPILOGUE

- References

- Author Index

- Subject Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app