Overview of Heuristic Evaluation

When Should You Use Heuristic Evaluation?

Strengths

Weaknesses

What Resources Do You Need to Conduct a Heuristic Evaluation?

Personnel, Participants, and Training

Hardware and Software

Documents and Materials

Procedures and Practical Advice on the Heuristic Evaluation Method

Planning a Heuristic Evaluation Session

Conducting a Heuristic Evaluation

After the Heuristic Evaluations by Individuals

Variations and Extensions of the Heuristic Evaluation Method

The Group Heuristic Evaluation with Minimal Preparation

Crowdsourced Heuristic Evaluation

Participatory Heuristic Evaluation

Cooperative Evaluation

Heuristic Walkthrough

HE-Plus Method

Major Issues in the Use of the Heuristic Evaluation Method

How Does the UX Team Generate Heuristics When the Basic Set Is Not Sufficient?

Do Heuristic Evaluations Find “Real” Problems?

Does Heuristic Evaluation Lead to Better Products?

How Much Does Expertise Matter?

Should Inspections and Walkthroughs Highlight Positive Aspects of a Product’s UI?

Individual Reliability and Group Thoroughness

Data, Analysis, and Reporting

Conclusions

Overview of Heuristic Evaluation

A heuristic evaluation is a type of user interface (UI) or usability inspection where an individual, or a team of individuals, evaluates a specification, prototype, or product against a brief list of succinct usability or user experience (UX) principles or areas of concern (Nielsen, 1993; Nielsen & Molich, 1990). The heuristic evaluation method is one of the most common methods in user-centered design (UCD) for identifying usability problems (Rosenbaum, Rohn, & Humburg, 2000), although in some cases, what people refer to as a heuristic evaluation might be better categorized as an expert review (Chapter 2) because heuristics were mixed with additional principles and personal beliefs and knowledge about usability.

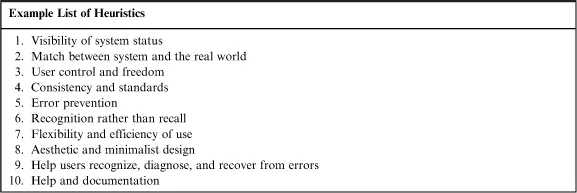

A heuristic is a commonsense rule or a simplified principle. A list of heuristics is meant as an aid or mnemonic device for the evaluators. Table 1.1 is a list of heuristics from Nielsen (1994a) that you might give to your team of evaluators to remind them about potential problem areas.

Table 1.1

A Set of Heuristics from Nielsen (1994a)

There are several general approaches for conducting a heuristic evaluation:

• Object-based. In an object-based heuristic evaluation, evaluators are asked to examine particular UI objects for problems related to the heuristics. These objects can include mobile screens, hardware control panels, web pages, windows, dialog boxes, menus, controls (e.g., radio buttons, push buttons, and text fields), error messages, and keyboard assignments.

• Task-based. In the task-based approach, evaluators are given heuristics and a set of tasks to work through and are asked to report on problems related to heuristics that occur as they perform or simulate the tasks.

• An object–task hybrid. A hybrid approach combines the object and task approaches. Evaluators first work through a set of tasks looking for issues related to heuristics and then evaluate designated UI objects against the same heuristics. The hybrid approach is similar to the heuristic walkthrough (Sears, 1997), which is described later in this book.

In task-based or hybrid approaches, the choice of tasks for the team of evaluators is critical. Questions to consider when choosing tasks include the following:

• Is the task realistic? Simplistic tasks might not reveal serious problems.

• What is the frequency of the task? The frequency of the task might determine whether something is a problem or not. Consider a complex program that you use once a year (e.g., US tax programs). A program intended to be used once a year might require high initial learning support, much feedback, and repeated success messages—all features intended to support the infrequent user. These same features might be considered problems for the daily user of the same program (e.g., a tax accountant or financial advisor) who was interested in efficiency and doesn’t want constant, irritating feedback messages.

• What are the consequences of the task? Will an error during a task result in a minor or major loss of data? Will someone die if there is task failure? If you are working on medical monitoring systems, the consequences of missed problems could be disastrous.

• Are the data used in the task realistic? We often use simple samples of data for usability evaluations because it is convenient, but you might reveal more problems with “dirty data.”

• Are you using data at the right scale? Some tasks are easy with limited data sets (e.g., 100 or 1000 items) but very hard when tens of thousands or millions of items are involved. It is convenient to use small samples for task-based evaluations, but those small samples of test data may hide significant problems.

Multiple evaluators are recommended for heuristic evaluations, because different people who evaluate the same UI often identify quite different problems (Hertzum, Jacobsen, & Molich, 2002; Molich & Dumas, 2008; Nielsen, 1993) and also vary considerably in their ratings of the severity of identical problems (Molich, 2011).

The Evaluator Effect in Usability Evaluation

The common finding that people who evaluate the usability of the same product report different sets of problems is called the “evaluator effect” (Hertzum & Jacobsen, 2001; Jacobsen, Hertzum, & John, 1998a,b). The effect can be seen in both testing and inspection studies. There are many potential causes for this effect including different backgrounds, different levels of expertise, the quality of the instructions for conducting an evaluation, knowledge of heuristics, knowledge of the tasks and environment, knowledge of the user, and the sheer number of problems that a complex system (e.g., creation-oriented applications like PhotoShop and AutoCAD), with many ways to use features and complete tasks, can present to users (Akers, Jeffries, Simpson, & Winograd, 2012). Knowing that evaluators will find different problems, from the practical point of view, can be dealt with by:

• Using multiple evaluators with both UX and domain knowledge.

• Training evaluators on the method and materials used (checklists, heuristics, tasks, etc.). Providing examples of violations of heuristics and training on severity scales can improve the quality of inspections and walkthroughs.

• Providing realistic scenarios, background on the users, and their work or play environments.

• Providing a common form for reporting results and training people on how to report problems.

• Providing evaluators with the UX dimensions (e.g., learnability, memorability efficiency, error prevention, and aesthetics) that are most critical to users.

• Considering how something might be a problem to a novice and a delighter to an expert.

This book discusses the strengths and weaknesses of each approach and provides tips from academic and practical perspectives on how to make inspections and walkthroughs more effective.

During the heuristic evaluation, evaluators can write down problems as they work independently, or they can think aloud while a colleague takes notes about the problems encountered during the evaluation. The results of all the evaluations can then be aggregated into a composite list of usability problems o...