eBook - ePub

Handbook of Fiber Optic Data Communication

A Practical Guide to Optical Networking

- 468 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

About this book

The 4th edition of this popular Handbook continues to provide an easy-to-use guide to the many exciting new developments in the field of optical fiber data communications. With 90% new content, this edition contains all new material describing the transformation of the modern data communications network, both within the data center and over extended distances between data centers, along with best practices for the design of highly virtualized, converged, energy efficient, secure, and flattened network infrastructures.

Key topics include networks for cloud computing, software defined networking, integrated and embedded networking appliances, and low latency networks for financial trading or other time-sensitive applications. Network architectures from the leading vendors are outlined (including Smart Analytic Solutions, Qfabric, FabricPath, and Exadata) as well as the latest revisions to industry standards for interoperable networks, including lossless Ethernet, 16G Fiber Channel, RoCE, FCoE, TRILL, IEEE 802.1Qbg, and more.

- Written by experts from IBM, HP, Dell, Cisco, Ciena, and Sun/ Oracle

- Case studies and 'How to...' demonstrations on a wide range of topics, including Optical Ethernet, next generation Internet, RDMA and Fiber Channel over Ethernet

- Quick reference tables of all the key optical network parameters for protocols like ESCON, FICON, and SONET/ATM and a glossary of technical terms and acronyms

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription.

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn more here.

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 1000+ topics, we’ve got you covered! Learn more here.

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more here.

Yes! You can use the Perlego app on both iOS or Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Yes, you can access Handbook of Fiber Optic Data Communication by Casimer DeCusatis in PDF and/or ePUB format, as well as other popular books in Technology & Engineering & Data Transmission Systems. We have over one million books available in our catalogue for you to explore.

Information

Part I

Technology Building Blocks

Outline

Chapter 1 Transforming the Data Center Network

Chapter 2 Transceivers, Packaging, and Photonic Integration

Chapter 3 Plastic Optical Fibers for Data Communications

Chapter 4 Optical Link Budgets

Case Study Deploying Systems Network Architecture (SNA) in IP-Based Environments

Chapter 5 Optical Wavelength-Division Multiplexing for Data Communication Networks

Case Study A More Reliable, Easier to Manage TS7700 Grid Network

Chapter 6 Passive Optical Networks (PONs)

Chapter 1

Transforming the Data Center Network

Casimer DeCusatis, IBM Corporation, 2455 South Road, Poughkeepsie, NY

In recent years, there have been many fundamental changes in the architecture of modern data centers. New applications have emerged, including cloud computing, big data analytics, real-time stock trading, and more. Workloads have evolved from a predominantly static environment into one that changes over time in response to user demands, often as part of a highly virtualized, multitenant data center. In response to these new requirements, data center networks have also undergone significant change. Conventional network architectures, which use Ethernet access, aggregation, and core tiers with a separate storage area network, are not well suited to modern data center traffic patterns. This chapter reviews the evolution from conventional network architectures into designs better suited to dynamic, distributed workloads. This includes flattening the network, converging Ethernet with storage and other protocols, and virtualizing and scaling the network. Effects of oversubscription, latency, higher data rates, availability, reliability, energy efficiency, and network security will be discussed.

Keywords

network; fabric; cloud; virtualize; Ethernet; SAN

In recent years, there have been many fundamental and profound changes in the architecture of modern data centers, which host the computational power, storage, networking, and applications that form the basis of any modern business [1–7]. New applications have emerged, including cloud computing, big data analytics, real-time stock trading, and more. Workloads have evolved from a predominantly static environment into one which changes over time in response to user demands, often as part of a highly virtualized, multitenant data center. In response to these new requirements, data center hardware and software have also undergone significant changes; perhaps nowhere is this more evident than in the data center network.

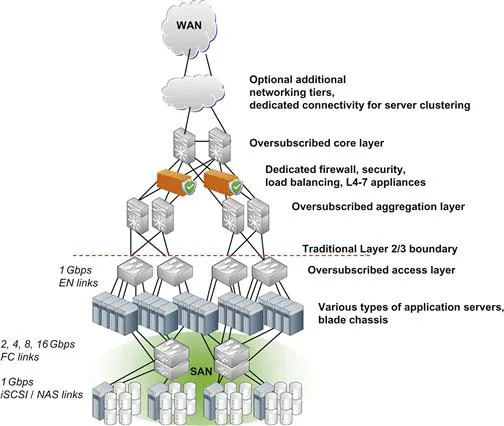

In order to better appreciate these changes, we first consider the traditional data center architecture and compute model, as shown in Figure 1.1. While it is difficult to define a “typical” data center, Figure 1.1 provides an overview illustrating some of the key features deployed in many enterprise class networks and Fortune 1000 companies today, assuming a large number of rack or blade servers using x86-based processors. Figure 1.1 is not intended to be all inclusive, since there are many variations on data center designs. For example, in some applications such as high-performance computing or supercomputing, system performance is the dominant overriding design consideration. Mainframes or large enterprise compute environments have historically used more virtualization and based their designs on continuous availability, with very high levels of reliability and serviceability. Telecommunication networks are also changing, and the networks that interconnect multiple data centers are taking on very different properties from traditional approaches such as frame relay. This includes the introduction of service-aware networks, fiber to the home or small office, and passive optical networks. A new class of ultralow-latency applications has emerged with the advent of real-time financial transactions and related areas such as telemedicine. Further, the past few years have seen the rise of warehouse-scale data centers, which serve large cloud computing applications from Google, Facebook, Amazon, and similar companies; these applications may use custom-designed servers and switches. While many of these data centers have not publicly disclosed details of their designs at this time, it is a good assumption that when the data center grows large enough to consume electrical power equivalent to a small city, energy-efficient design of servers and data center heating/cooling become major considerations. We will consider these and other applications later in this book; for now, we will concentrate on the generic properties of the data center network shown in Figure 1.1. Although data centers employ a mixture of different protocols, including InfiniBand, Fibre Channel, FICON, and more, a large portion of the network infrastructure is based on some variation of Ethernet.

Figure 1.1 Design of a conventional multitier data center network.

Historically, as described in the early work from Metcalf [8], Ethernet was first used to interconnect “stations” (dumb terminals) through repeaters and hubs on a shared data bus. Many stations would listen to a common data link at the same time and make a copy of all frames they heard; frames intended for that station would be kept, while others would be discarded. When a station needed to transmit data, it would first have to check that no other station was transmitting at the same time; once the network was available, a new transmission could begin. Since the frames were broadcast to all stations on the network, the transmitting station would simultaneously listen to the network for the same data it was sending. If a data frame was transmitted onto an available link and then heard by the transmitting station, it was assumed that the frame had been sent to its destination; no further checking was done to insure that the message actually arrived correctly. If two stations accidentally began transmitting at the same time, the packets would collide on the network; the station would then cease transmission, wait for a random time interval (the backoff interval), and then attempt to retransmit the frame.

Over time, Ethernet evolved to support switches, routers, and multiple link segments, with higher level protocols such as TCP/IP to aid in the recovery of dropped or lost packets. Spanning tree protocol (STP) was developed to prevent network loops by blocking some traffic paths, at the expense of network bandwidth. However, this does not change the fact that Ethernet was inherently a “best effort” network, in which a certain amount of lost or out-of-order data packets are expected by design. Retransmission of dropped or misordered packets was a fundamental assumption in the Ethernet protocol, which lacked guaranteed delivery mechanisms and credit-based flow control concepts such as those designed into Fibre Channel and InfiniBand protocols.

Conventional Ethernet data center networks [9] are characterized by access, aggregation, services, and core layers, which could have three, four, or more tiers of switching. Data traffic flows from the bottom tier up through successive tiers as required, and then back down to the bottom tier, providing connectivity between servers. Since the basic switch technology at each tier is the same, the TCP/IP stack is usually processed multiple times at each successive tier of the network. To reduce cost and promote scaling, oversubscription is typically used for all tiers of the network. Layer 2 and 3 functions are separated within the access layer of the network. Services dedicated to each application (firewalls, load balancers, etc.) are placed in vertical silos dedicated to a group of application servers. Finally, the network management is centered in the switch operating system; over time, this has come to include a wide range of complex and often vendor proprietary features and functions. This approach was very successful for campus Local Area Networks (LANs), which led to its adoption in most data centers, despite the fact that this approach was never intended to meet the traffic patterns or performance requirements of a modern data center environment.

There are many problems with applying conventional campus networks to modern data center designs. While some enterprise data centers have used mainframe-class computers, and thus taken advantage of server virtualization, reliability, and scalability, many more use x86-based servers, which have not supported these features until more recently. Conventional data centers have consisted of lightly utilized servers running a bare metal operating system or a hypervisor with a small number of virtual machines (VMs). The servers may be running a mix of different operating systems, including Windows, Linux, and UNIX. The network consists of many tiers, where each layer duplicates many of the IP/Ethernet packet analysis and forwarding functions. This adds cumulative end-to-end latency (each network tier can contribute anywhere from 2 to 25 μs) and requires significant amounts of processing and memory. Oversubscription, in an effort to reduce latency and promote cost-effective scaling, can lead to lost data and is not suitable for storage traffic, which cannot tolerate missing or out of order data frames. Thus, multiple networks are provided for both Ethernet and Fibre Channel (and to a lesser degree for other specialized applications such as server clustering or other protocols such as InfiniBand). Each of these networks may require its own dedicated management tools, in addition to server, storage, and appliance management. Servers typically attach to the data center network using lower bandwidth links, such as 1 Gbit/s Ethernet and either 2, 4, 8, or 16 Gbit/s Fibre Channel storage area n...

Table of contents

- Cover image

- Title page

- Table of Contents

- Copyright

- Preface to the Fourth Edition

- A Historical View of Fiber Data Communications

- Part I: Technology Building Blocks

- Part II: Protocols and Industry Standards

- Part III: Network Architectures and Applications

- Appendix A. Measurement Conversion Tables

- Appendix B. Physical Constants

- Appendix C. The 7-Layer OSI Model

- Appendix D. Network Standards and Data Rates

- Appendix E. Fiber Optic Fundamentals and Laser Safety

- Index