Handbook of Multisensor Data Fusion

Theory and Practice, Second Edition

- 870 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Handbook of Multisensor Data Fusion

Theory and Practice, Second Edition

About this book

In the years since the bestselling first edition, fusion research and applications have adapted to service-oriented architectures and pushed the boundaries of situational modeling in human behavior, expanding into fields such as chemical and biological sensing, crisis management, and intelligent buildings.

Handbook of Multisensor Data Fusion: Theory and Practice, Second Edition represents the most current concepts and theory as information fusion expands into the realm of network-centric architectures. It reflects new developments in distributed and detection fusion, situation and impact awareness in complex applications, and human cognitive concepts. With contributions from the world's leading fusion experts, this second edition expands to 31 chapters covering the fundamental theory and cutting-edge developments that are driving this field.

New to the Second Edition—

· Applications in electromagnetic systems and chemical and biological sensors

· Army command and combat identification techniques

· Techniques for automated reasoning

· Advances in Kalman filtering

· Fusion in a network centric environment

· Service-oriented architecture concepts

· Intelligent agents for improved decision making

· Commercial off-the-shelf (COTS) software tools

From basic information to state-of-the-art theories, this second edition continues to be a unique, comprehensive, and up-to-date resource for data fusion systems designers.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

1

Multisensor Data Fusion

1.1 Introduction

1.2 Multisensor Advantages

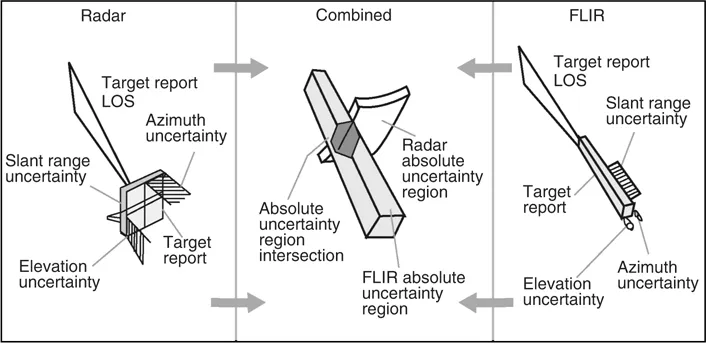

A moving object observed by both a pulsed radar and an infrared imaging sensor.

1.3 Military Applications

Table of contents

- Cover

- Half Title

- Title Page

- Copyright Page

- Dedication

- Contents

- Preface

- Acknowledgment

- Editors

- Contributors

- 1 Multisensor Data Fusion

- 2 Data Fusion Perspectives and Its Role in Information Processing

- 3 Revisions to the JDL Data Fusion Model

- 4 Introduction to the Algorithmics of Data Association in Multiple-Target Tracking

- 5 Principles and Practice of Image and Spatial Data Fusion

- 6 Data Registration

- 7 Data Fusion Automation: A Top-Down Perspective

- 8 Overview of Distributed Decision Fusion

- 9 Introduction to Particle Filtering: The Next Stage in Tracking

- 10 Target Tracking Using Probabilistic Data Association-Based Techniques with Applications to Sonar, Radar, and EO Sensors

- 11 Introduction to the Combinatorics of Optimal and Approximate Data Association

- 12 Bayesian Approach to Multiple-Target Tracking

- 13 Data Association Using Multiple-Frame Assignments

- 14 General Decentralized Data Fusion with Covariance Intersection

- 15 Data Fusion in Nonlinear Systems

- 16 Random Set Theory for Multisource-Multitarget Information Fusion

- 17 Distributed Fusion Architectures, Algorithms, and Performance within a Network-Centric Architecture

- 18 Foundations of Situation and Threat Assessment

- 19 Introduction to Level 5 Fusion: The Role of the User

- 20 Perspectives on the Human Side of Data Fusion: Prospects for Improved Effectiveness Using Advanced Human–Computer Interfaces

- 21 Requirements Derivation for Data Fusion Systems

- 22 Systems Engineering Approach for Implementing Data Fusion Systems

- 23 Studies and Analyses within Project Correlation: An In-Depth Assessment of Correlation Problems and Solution Techniques

- 24 Data Management Support to Tactical Data Fusion

- 25 Assessing the Performance of Multisensor Fusion Processes

- 26 Survey of COTS Software for Multisensor Data Fusion

- 27 Survey of Multisensor Data Fusion Systems

- 28 Data Fusion for Developing Predictive Diagnostics for Electromechanical Systems

- 29 Adapting Data Fusion to Chemical and Biological Sensors

- 30 Fusion of Ground and Satellite Data via Army Battle Command System

- 31 Developing Information Fusion Methods for Combat Identification

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app