eBook - ePub

Doing Digital Humanities

Practice, Training, Research

- 408 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Doing Digital Humanities

Practice, Training, Research

About this book

Digital Humanities is rapidly evolving as a significant approach to/method of teaching, learning and research across the humanities. This is a first-stop book for people interested in getting to grips with digital humanities whether as a student or a professor. The book offers a practical guide to the area as well as offering reflection on the main objectives and processes, including:

- Accessible introductions of the basics of Digital Humanities through to more complex ideas

- A wide range of topics from feminist Digital Humanities, digital journal publishing, gaming, text encoding, project management and pedagogy

- Contextualised case studies

- Resources for starting Digital Humanities such as links, training materials and exercises

Doing Digital Humanities looks at the practicalities of how digital research and creation can enhance both learning and research and offers an approachable way into this complex, yet essential topic.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Foundations

Chapter 1

Thinking-through the history of computer-assisted text analysis1

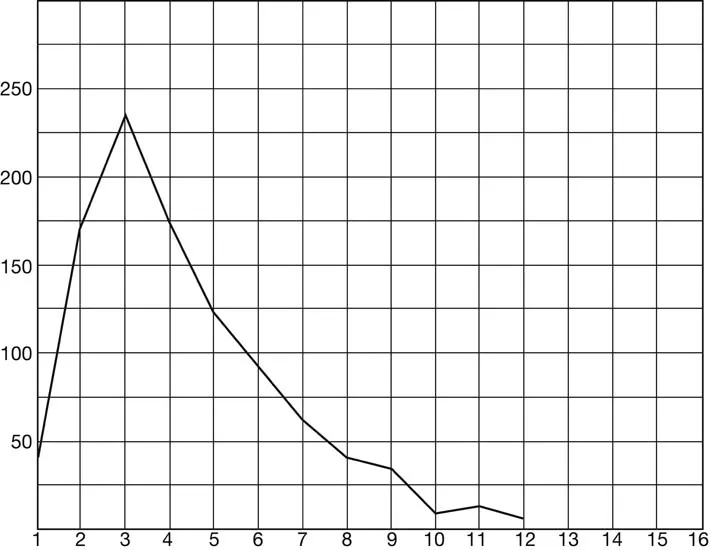

In 1887 the polymath T. C. Mendenhall published an article in Science titled “The Characteristic Curves of Composition,” which is one of the earliest examples of quantitative stylistics but also presents one of the first text visualizations. Mendenhall thought that different authors would have distinctive curves of word length frequencies that could help with authorship attribution, much like a spectroscope could be used to identify elements. In Figure 1.1 you see an example of the characteristic curve of Oliver Twist. Mendenhall counted the length in characters of each of the first 1,000 words and then graphed the number of words of each length. Thus one can see that there are just under fifty words of one letter length in the first one thousand words.

Mendenhall’s paper led to a commission to use his technique to show that Bacon was really the author of Shakespeare’s plays (Mendenhall 1901). A wealthy Bostonian, Augustus Hemingway, paid for the tedious work of two women who counted word lengths only to show that no, Bacon’s prose didn’t have the curve characteristic of Shakespeare’s drama. As Joanne Wagner shows in “Characteristic Curves and Counting Machines: Assessing Style at the Turn of the Century” (1990), mechanistic research processes were as attractive then as they are now. At the time, rhetoric and stylistics were trying to establish themselves as scientific disciplines.

It is not surprising that others have been inspired by or experimented with Mendenhall’s quantitative approach using computers. Anthony Kenny starts his The Computation of Style (1982) not with Father Busa, who is traditionally considered the founding pioneer of humanities computing, but by discussing how Mendenhall and pre-computing contemporaries proposed and tried different stylistic tests, developing a field that then benefited from the availability of electronic computers after WWII. With the computer it became possible not only to do the statistics faster, but also to gather the statistics automatically from electronic texts. With the dramatic increase of available e-texts quantitative studies have exploded, and some have even returned to Mendenhall. As recently as 2009, Marlowe fan Daryl Pinsken in a blog essay replicated Mendenhall’s technique using a computer to show that while Bacon may not look like Shakespeare, Marlowe does.

Figure 1.1 First 1,000 words in Oliver Twist. The vertical (y) axis indicates the number of words and the horizontal (x) axis indicates the number of letters in each word.

Mendenhall, 1887

And that is what this chapter is about – the replication of text analysis techniques as a way of thinking-through the history and possibilities for computer-assisted text analysis. This chapter proposes replication as one way of doing the history of computing that thinks through computing rather than only about computing. We will illustrate our proposal by discussing the replication of two studies, that of Mendenhall and another early analytical method by John B. Smith on Joyce. These two experiments are in turn replicated as interactive IPython notebooks that you can use to try replication (we will explain IPython notebooks later in this article; see the appendix for links to the notebooks).

Historical Replication

One way to understand the history of analytical methods in the digital humanities is to try to replicate them. Historical replication is not the same as scientific reproducibility. Reproducibility is about guaranteeing results by testing them in order to advance science. Historical replication is a way of understanding outcomes, practices, and context of historical works by deliberately following through the activities of research. As such it is a “re-ply” or folding back to engage practices of the past as relevant today and not simply of historical note. It is one of many ways to conjure up the context of historical moments to aid understanding, a fundamental humanistic impulse.

To replicate, or fold back, is more than just an adjustment of direction or a turning. It is to enter into a dialogical relationship with the work of the past. It is to reply to the past by recreating the original study or practice, an impossible act, given that what is past is past. It doesn’t do this to pass judgment so much as to treat the past as interlocutor. Obviously the past can’t speak, which is why we replicate it or reanimate the practices, taking them seriously as we do. What we get are monsters, like Frankenstein’s monster, stitched together from what is at hand.

Replication is important to method, because the way method brings truth to bear on new work is through the replication of a process to new matters. The process is known through re-application, or it might be more accurate to say it is negotiated through replication. In the scientific use of established methods, there is a knowing that comes from reusing methods that have community engagement. Replication, as we imagine it, does not apply established methods to new matters, but seeks to recover, understand, and reflect on past methods by trying them. The analytical methods of Mendenhall and other historical figures are no longer used in the digital humanities, which is why this is also a way of practicing media archaeology, though it might be more accurate to describe this as method archaeology (Zielinski 2008).

We propose a replication as an interpretative practice that can serve heuristic and epistemological purposes that extend our horizons of understanding. Heuristic, because replication is a way of experimenting with old methods made new in a way that can lead to unexpected insights into the everyday practices of computing, both as they were practiced then, and as they could be practiced today. It is as we may think. Heuristic also because such replications, if documented properly, are a way of sharing research tactics so that they can become a subject of further discussion, experimentation, and recombination; a dialog with the future.

Replication serves epistemological purposes because it allows us to better imagine the mix of concepts and methods that constituted knowing at the time rather than impose our paradigms of knowing. We view this as an experimental extension to Ian Hacking’s historical ontology (2002), which builds on Foucault’s archaeology of ideas. The idea is to understand the concepts, practices, and things of a time as they were understood then, as a way of expanding our understanding. Replication thus can contribute to the genealogy of things such as methods, games, and software where their use (application) is what is important. It is thus in a hermeneutical tradition of the humanities where we treat past ideas and practices as if capable of being understood as vital today rather than as merely historical.

What makes replication particularly relevant is that we can consider it a research practice of the digital humanities. In the spirit of interpreting things by following hints for interpretation from the phenomenon itself, we need to be sensitive to the discussions of method in computing tools for humanists, or at least in the documentation surrounding these tools. These discussions of method hint at replication as they describe methods one could use. They are rarely complete or unambiguous, as we will see, but they do describe implementation as a potential activity of digital humanists. To go further, they describe digital practices as the activity of the computing humanist.

Replicating Mendenhall

What does this activity of replication practice? The next two sections of this chapter will walk through what was learned from two replications. Let’s first return to Mendenhall’s “Characteristic Curve.” Our first observation is not about the method itself so much as the agenda of the method. Mendenhall’s very project strikes us today as an unusual choice of way of knowing about texts through quantification. Why this fascination with authorship and style? And it isn’t just Mendenhall. Up until the 1980s, stylistics was what many textual scholars did with computers to texts. One reason for the interest in authorship is that it was an important task in the humanities, going back to the likes of Valla and the attempts by Italian Renaissance humanists to recover a classical textual heritage. If concern with authorship now seems the purview of forensics, it is because of the work of humanists stabilizing the archive. Now we use computers and quantification not to continue the traditional work of humanists, but to extend it and ask new questions.

A second observation has to do with the technology of the day. Today we take interactive visualizations for granted, which makes iterative interpretative practices easier. Before the personal workstation (be it a terminal or computer) the humanist used computers in a batch mode, which privileged different types of practices. Batch work calls for a formalization of the entire (and expensive) process imagined – a practice very different from the throw-the-text-at-a-data-wall-and-see-what-sticks approach possible with powerful modern interactive tools.

It shouldn’t therefore surprise us that stylistics was also presented by Mendenhall and successors as a way to bring the scientific reliability of mechanistic processes to the study of rhetoric. Mendenhall compared his visualizations to the curves produced by the spectrographic analysis of materials. It wasn’t just scientific method that was brought to stylistics; Mendenhall also brought the rhetoric of visualization as evidence.

That said, one thing we did not do in our efforts to replicate was to actually count words by hand. Instead we treated Mendenhall as being at the beginning of a tradition of quantitative stylistics that saw fulfillment in automation. Replicating Mendenhall on computer can seem like a way of appropriating his work to computing, and that is also one of the points we want to make about the history of computing – it stretches back beyond the development of electronic computers. If you want to understand the computing humanities you need to understand the importance of stylistics, especially in the early decades of humanities computing. If you want to understand stylistics you need to understand the methods that were developed before the advent of the computer and then how the computer automated these mechanistic methods. The same is true of the other major use of computers in the humanities in the decades after WWII: concording. These liminal practices connect computing to the humanities.

If we consider Mendenhall as being a founder of quantitative stylistics, it changes our founding story for humanities computing.2 The cosmology story of humanities computing usually starts with Father Busa’s mid-twentieth-century efforts to produce a computerized concordance of the works of Thomas Acquinas,3 but Mendenhall and others predate Busa by half a century.

Mendenhall’s version of “the style is the man,” however, was more severe. Rather than style being the result of the choices of a human agent, choices that were made repeatedly and thus constituted personality, personality was “inescapably” revealed in style. Style became the tell-tale signifier of character, and since style could not be changed, the implication was that character could not either. (Wagner 1990, 40–41)

A third point to be made about Mendenhall is one Joanne Wagner points out: that one of the assumptions behind stylistics as practiced by Mendenhall is that style is character in the strong sense that it is “the man,” and further, that an author typically can’t alter their fundamental characteristics consciously. There are all sorts of problems with the assumptions about the human character of stylistics, starting with the problem of the stability and unconscious nature of style when it can be measured. In fact we get a different curve when we plot all of Oliver Twist as opposed to just the first 1,000 words as Mendenhall did. Even more problematic is drawing inferences from stylistic differences. Stylistics, and for that matter any type of computer-assisted analysis, produces measures that still need to be interpreted. What does it mean if an author has a different curve, or uses two-letter words more often? It would probably be more accurate to think of stylistics as being about discourse, not people; or as Sedelow put in 1969, “characterizing the language usage of any writer or speaker” (1969, 1).

The final point, and one that anyone who has done programming in text analysis knows, is that it is remarkably hard to formally describe a word. In our notebook we used the Natural Language Toolkit (NLTK) for Python and its method for recognizing words in a text (tokenization), like so many of the decisions as to what are the boundaries for a word, what to do with hyphens and apostrophes, and what to do with abbreviations, are hidden. Tokenizing into words is, nonetheless, a fundamental step in almost all text analysis processes. It is a step that is language-specific. In English, text analysis benefits from the correlation between words as concepts and the orthographic words or strings that we can use computers to separate. Mendenhall doesn’t say if they had to think about what a word really is, but writing analytical code for historical replication gives one pause when such an apparently simple thing gets slippery.

Smith and Imagery

The second study we replicated is from John B. Smith’s “Image and Imagery in Joyce’s Portrait” (1973). Smith’s article is a gem of a paper that uses text analysis to test an aesthetic theory drawn from the novel back on the novel itself. Smith takes a theory about what makes a work of art (like a novel) from A Portrait of the Artist as a Young Man and then formalizes it so it can be used to interpret the Portrait with a computer. Smith’s paper, unlike Mendenhall’s paper, is not a work of quantitative stylistics, but a hermeneutical use of computing. Smith draws his theory of interpretation from that being interpreted and applies it back. Smith was a pioneer in developing and theorizing interactive analytical tools meant for interpretative use; in the 1970s he developed the RATS utilities (1972), in 1978 he published a theory of “Computer Criticism,” and in the 1980s he developed ARRAS, an influential interactive tool f...

Table of contents

- Cover

- Title

- Copyright

- Contents

- List of figures

- List of tables

- Notes on contributors

- Preface: communities of practice, the methodological commons, and digital self-determination in the humanities

- Acknowledgements

- Introduction

- PART 1 Foundations

- PART 2 Core concepts and skills

- PART 3 Creation, remediation and curation

- PART 4 Administration, dissemination and teaching

- Index

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access Doing Digital Humanities by Constance Crompton, Richard Lane, Ray Siemens, Constance Crompton,Richard J Lane,Ray Siemens,Richard Lane, Constance Crompton, Richard J Lane, Ray Siemens in PDF and/or ePUB format, as well as other popular books in Literature & Literary Criticism. We have over one million books available in our catalogue for you to explore.