- 276 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

About this book

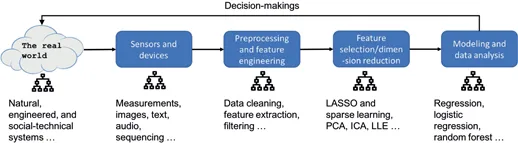

Data Analytics: A Small Data Approach is suitable for an introductory data analytics course to help students understand some main statistical learning models. It has many small datasets to guide students to work out pencil solutions of the models and then compare with results obtained from established R packages. Also, as data science practice is a process that should be told as a story, in this book there are many course materials about exploratory data analysis, residual analysis, and flowcharts to develop and validate models and data pipelines.

The main models covered in this book include linear regression, logistic regression, tree models and random forests, ensemble learning, sparse learning, principal component analysis, kernel methods including the support vector machine and kernel regression, and deep learning. Each chapter introduces two or three techniques. For each technique, the book highlights the intuition and rationale first, then shows how mathematics is used to articulate the intuition and formulate the learning problem. R is used to implement the techniques on both simulated and real-world dataset. Python code is also available at the book's website: http://dataanalyticsbook.info.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Chapter 1: Introduction

Who will benefit from this book?

Overview of a data analytics pipeline

Topics in a nutshell

Data models (i.e., regression-based techniques)

- Chapter 2: Linear regression, least squares estimation, hypothesis testing, R-squared, First Derivative Test, connection with experimental design, data-generating mechanism, history of adventures in understanding errors, exploratory data analysis (EDA)

- Chapter 3: Logistic regression, generalized least squares estimation, iterative reweighted least squares (IRLS) algorithm, ranking (formulated as a regression problem)

- Chapter 4: Bootstrap, data resampling, nonparametric hypothesis testing, nonparametric confidence interval estimation

- Chapter 5: Overfitting and underfitting, limitation of R-squared, training dataset and testing dataset, random sampling, K-fold cross-validation, the confusion matrix, false positive and false negative, the Receiver Operating Characteristics (ROC) curve, the law of errors, how data scientists work with clients

- Chapter 6: Residual analysis, normal Q-Q plot, Cook’s distance, leverage, multicollinearity, heterogeneity, clustering, Gaussian mixture model (GMM), the Expectation-Maximization (EM) algorithm, Jensen Inequality

- Chapter 7: Support Vector Machine (SVM), generalize data versus memorize data, maximum margin, support vectors, model complexity and regularization, primal-dual formulation, quadratic programming, KKT condition, kernel trick, kernel machines, SVM as a neural network model

- Chapter 8: LASSO, sparse learning, L1-norm and L2-norm regularization, Ridge regression, feature selection, shooting algorithm, Principal Component Analysis (PCA), eigenvalue decomposition, scree plot

- Chapter 9: Kernel regression as generalization of linear regression model, local smoother regression model, k-nearest neighbor (KNN) regression model, conditional variance regression model, heteroscedasticity, weighted least squares estimation, model extension and stacking

- Chapter 10: Deep learning, neural network, activation function, model primitives, convolution, max pooling, convolutional neural network (CNN)

Algorithmic models (i.e., tree-based techniques)

- Chapter 2: Decision tree, entropy, information gain (IG), node splitting, pre- and post-pruning, empirical error, generalization error, pessimistic error by binomial approximation, greedy recursive splitting

- Chapter 3: System monitoring reformulated as classification problem, real-time contrasts method (RTC), design of monitoring statistics, sliding window, anomaly detection, false alarm

- Chapter 4: Random forest, Gini index, weak classifiers, the probabilistic mechanism about why random forests can create a strong classifier out of many weak classifiers, importance score, partial dependency plot

- Chapter 5: Out-of-bag (OOB) error in random forest

- Chapter 6: Residual analysis, clustering by random forests

- Chapter 7: Ensemble learning, Adaboost, analysis of ensemble learning from statistical, computational, and representational perspectives

- Chapter 10: Automations of pipelines, integration of tree models, feature selection, and regression models in ‘inTrees‘, random forest as a rule generator, rule extraction, pruning, selection, and summarization, confidence and support of rules, variable interactions, rule-based prediction

Table of contents

- Cover

- Half Title

- Series Page

- Title Page

- Copyright Page

- Dedication

- Contents

- Preface

- Acknowledgments

- Chapter 1: Introduction

- Chapter 2: Abstraction Regression & Tree Models

- Chapter 3: Recognition Logistic Regression & Ranking

- Chapter 4: Resonance Bootstrap & Random Forests

- Chapter 5: Learning (I) Cross-validation & OOB

- Chapter 6: Diagnosis Residuals & Heterogeneity

- Chapter 7: Learning (II) SVM & Ensemble Learning

- Chapter 8: Scalability LASSO & PCA

- Chapter 9: Pragmatism Experience & Experimental

- Chapter 10: Synthesis Architecture & Pipeline

- Conclusion

- Appendix: A Brief Review of Background Knowledge

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app