Engineering MLOps

Rapidly build, test, and manage production-ready machine learning life cycles at scale

- 370 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Engineering MLOps

Rapidly build, test, and manage production-ready machine learning life cycles at scale

About this book

Get up and running with machine learning life cycle management and implement MLOps in your organization

Key Features

- Become well-versed with MLOps techniques to monitor the quality of machine learning models in production

- Explore a monitoring framework for ML models in production and learn about end-to-end traceability for deployed models

- Perform CI/CD to automate new implementations in ML pipelines

Book Description

Engineering MLps presents comprehensive insights into MLOps coupled with real-world examples in Azure to help you to write programs, train robust and scalable ML models, and build ML pipelines to train and deploy models securely in production.

The book begins by familiarizing you with the MLOps workflow so you can start writing programs to train ML models. Then you'll then move on to explore options for serializing and packaging ML models post-training to deploy them to facilitate machine learning inference, model interoperability, and end-to-end model traceability. You'll learn how to build ML pipelines, continuous integration and continuous delivery (CI/CD) pipelines, and monitor pipelines to systematically build, deploy, monitor, and govern ML solutions for businesses and industries. Finally, you'll apply the knowledge you've gained to build real-world projects.

By the end of this ML book, you'll have a 360-degree view of MLOps and be ready to implement MLOps in your organization.

What you will learn

- Formulate data governance strategies and pipelines for ML training and deployment

- Get to grips with implementing ML pipelines, CI/CD pipelines, and ML monitoring pipelines

- Design a robust and scalable microservice and API for test and production environments

- Curate your custom CD processes for related use cases and organizations

- Monitor ML models, including monitoring data drift, model drift, and application performance

- Build and maintain automated ML systems

Who this book is for

This MLOps book is for data scientists, software engineers, DevOps engineers, machine learning engineers, and business and technology leaders who want to build, deploy, and maintain ML systems in production using MLOps principles and techniques. Basic knowledge of machine learning is necessary to get started with this book.

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Information

Section 1: Framework for Building Machine Learning Models

- Chapter 1, Fundamentals of MLOps WorkFlow

- Chapter 2, Characterizing Your Machine Learning Problem

- Chapter 3, Code Meets Data

- Chapter 4, Machine Learning Pipelines

- Chapter 5, Model Evaluation and Packaging

Chapter 1: Fundamentals of an MLOps Workflow

- The evolution of infrastructure and software development

- Traditional software development challenges

- Trends of ML adoption in software development

- Understanding MLOps

- Concepts and workflow of MLOps

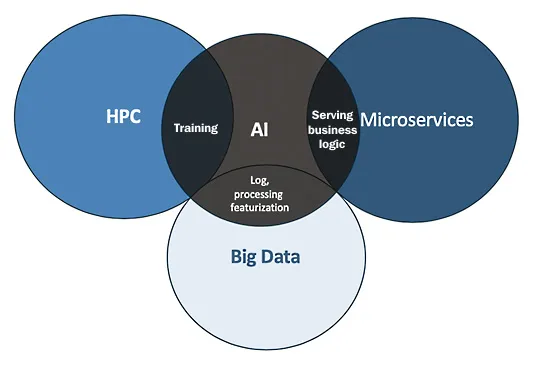

The evolution of infrastructure and software development

The rise of machine learning and deep learning

The end of Moore's law

AI-centric applications

Table of contents

- Engineering MLOps

- Contributors

- Preface

- Section 1: Framework for Building Machine Learning Models

- Chapter 1: Fundamentals of an MLOps Workflow

- Chapter 2: Characterizing Your Machine Learning Problem

- Chapter 3: Code Meets Data

- Chapter 4: Machine Learning Pipelines

- Chapter 5: Model Evaluation and Packaging

- Section 2: Deploying Machine Learning Models at Scale

- Chapter 6: Key Principles for Deploying Your ML System

- Chapter 7: Building Robust CI/CD Pipelines

- Chapter 8: APIs and Microservice Management

- Chapter 9: Testing and Securing Your ML Solution

- Chapter 10: Essentials of Production Release

- Section 3: Monitoring Machine Learning Models in Production

- Chapter 11: Key Principles for Monitoring Your ML System

- Chapter 12: Model Serving and Monitoring

- Chapter 13: Governing the ML System for Continual Learning

- Other Books You May Enjoy