Practical Program Evaluation

Theory-Driven Evaluation and the Integrated Evaluation Perspective

- 464 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Practical Program Evaluation

Theory-Driven Evaluation and the Integrated Evaluation Perspective

About this book

The Second Edition of Practical Program Evaluation shows readers how to systematically identify stakeholders' needs in order to select the evaluation options best suited to meet those needs. Within his discussion of the various evaluation types, Huey T. Chen details a range of evaluation approaches suitable for use across a program's life cycle. At the core of program evaluation is its body of concepts, theories, and methods. This revised edition provides an overview of these, and includes expanded coverage of both introductory and more cutting-edge techniques within six new chapters. Illustrated throughout with real-world examples that bring the material to life, the Second Edition provides many new tools to enrich the evaluator's toolbox.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Part I Introduction

Chapter 1 Fundamentals of Program Evaluation

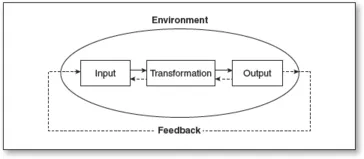

The Nature of Intervention Programs and Evaluation: A Systems View

Inputs.

Transformation.

Outputs.

Environment.

Feedback.

Classic Evaluation Concepts, Theories, and Methodologies: Contributions and Beyond

Evaluation Typologies

The Distinction Between Formative and Summative Evaluation

Table of contents

- Cover

- Half Title

- Acknowledgements

- Title Page

- Copyright Page

- Contents

- Preface

- Acknowledgements

- About the Author

- Part I Introduction

- Chapter 1 Fundamentals of Program Evaluation

- Chapter 2 Understand Approaches to Evaluation and Select Ones That Work The Comprehensive Evaluation Typology

- Chapter 3 Logic Models and the Action Model/Change Model Schema (Program Theory)

- Part II Program Evaluation to Help Stakeholders Develop a Program Plan

- Chapter 4 Helping Stakeholders Clarify a Program Plan Program Scope

- Chapter 5 Helping Stakeholders Clarify a Program Plan Action Plan

- Part III Evaluating Implementation

- Chapter 6 Constructive Process Evaluation Tailored for the Initial Implementation

- Chapter 7 Assessing Implementation in the Mature Implementation Stage

- Part IV Program Monitoring and Outcome Evaluation

- Chapter 8 Program Monitoring and the Development of a Monitoring System

- Chapter 9 Constructive Outcome Evaluations

- Chapter 10 The Experimentation Evaluation Approach to Outcome Evaluation

- Chapter 11 The Holistic Effectuality Evaluation Approach to Outcome Evaluation

- Chapter 12 The Theory-Driven Approach to Outcome Evaluation

- Part V Advanced Issues in Program Evaluation

- Chapter 13 What to Do if Your Logic Model Does Not Work as Well as Expected

- Chapter 14 Formal Theories Versus Stakeholder Theories in Interventions Relative Strengths and Limitations

- Chapter 15 Evaluation and Dissemination Top-Down Approach Versus Bottom-Up Approach

- References

- Index

- Publisher Note

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app