- 504 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Deep Learning with Python, Second Edition

About this book

Unlock the groundbreaking advances of deep learning with this extensively revised edition of the bestselling original. Learn directly from the creator of Keras and master practical Python deep learning techniques that are easy to apply in the real world. In Deep Learning with Python, Second Edition you will learn: Deep learning from first principles

Image classification & image segmentation

Timeseries forecasting

Text classification and machine translation

Text generation, neural style transfer, and image generation Deep Learning with Python has taught thousands of readers how to put the full capabilities of deep learning into action. This extensively revised second edition introduces deep learning using Python and Keras, and is loaded with insights for both novice and experienced ML practitioners. You'll learn practical techniques that are easy to apply in the real world, and important theory for perfecting neural networks. Purchase of the print book includes a free eBook in PDF, Kindle, and ePub formats from Manning Publications. About the technology

Recent innovations in deep learning unlock exciting new software capabilities like automated language translation, image recognition, and more. Deep learning is becoming essential knowledge for every software developer, and modern tools like Keras and TensorFlow put it within your reach, even if you have no background in mathematics or data science. About the book

Deep Learning with Python, Second Edition introduces the field of deep learning using Python and the powerful Keras library. In thisnew edition, Keras creator François Chollet offers insights for both novice and experienced machine learning practitioners. As you move through this book, you'll build your understanding through intuitive explanations, crisp illustrations, and clear examples. You'll pick up the skills to start developing deep-learning applications. What's inside Deep learning from first principles

Image classification and image segmentation

Time series forecasting

Text classification and machine translation

Text generation, neural style transfer, and image generation About the reader

For readers with intermediate Python skills. No previous experience with Keras, TensorFlow, or machine learning is required. About the author

François Chollet is a software engineer at Google and creator of the Keras deep-learning library. Table of Contents

1 What is deep learning?

2 The mathematical building blocks of neural networks

3 Introduction to Keras and TensorFlow

4 Getting started with neural networks: Classification and regression

5 Fundamentals of machine learning

6 The universal workflow of machine learning

7 Working with Keras: A deep dive

8 Introduction to deep learning for computer vision

9 Advanced deep learning for computer vision

10 Deep learning for timeseries

11 Deep learning for text

12 Generative deep learning

13 Best practices for the real world

14 Conclusions

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

1 What is deep learning?

- High-level definitions of fundamental concepts

- Timeline of the development of machine learning

- Key factors behind deep learning’s rising popularity and future potential

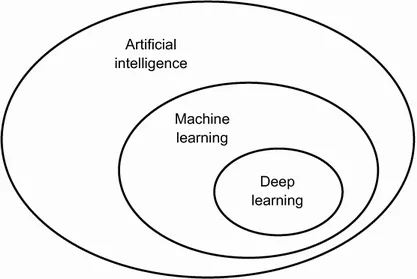

1.1 Artificial intelligence, machine learning, and deep learning

1.1.1 Artificial intelligence

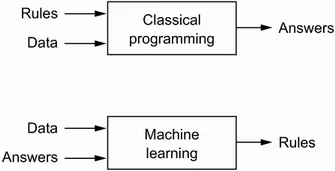

1.1.2 Machine learning

1.1.3 Learning rules and representations from data

- Input data points—For instance, if the task is speech recognition, these data points could be sound files of people speaking. If the task is image tagging, they could be pictures.

- Examples of the expected output—In a speech-recognition task, these could be human-generated transcripts of sound files. In an image task, expected outputs could be tags...

Table of contents

- Deep Learning with Python

- Copyright

- dedication

- brief contents

- contents

- front matter

- 1 What is deep learning?

- 2 The mathematical building blocks of neural networks

- 3 Introduction to Keras and TensorFlow

- 4 Getting started with neural networks: Classification and regression

- 5 Fundamentals of machine learning

- 6 The universal workflow of machine learning

- 7 Working with Keras: A deep dive

- 8 Introduction to deep learning for computer vision

- 9 Advanced deep learning for computer vision

- 10 Deep learning for timeseries

- 11 Deep learning for text

- 12 Generative deep learning

- 13 Best practices for the real world

- 14 Conclusions

- index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app