- 344 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

MLOps Engineering at Scale

About this book

Dodge costly and time-consuming infrastructure tasks, and rapidly bring your machine learning models to production with MLOps and pre-built serverless tools! In MLOps Engineering at Scale you will learn: Extracting, transforming, and loading datasets

Querying datasets with SQL

Understanding automatic differentiation in PyTorch

Deploying model training pipelines as a service endpoint

Monitoring and managing your pipeline's life cycle

Measuring performance improvements MLOps Engineering at Scale shows you how to put machine learning into production efficiently by using pre-built services from AWS and other cloud vendors. You'll learn how to rapidly create flexible and scalable machine learning systems without laboring over time-consuming operational tasks or taking on the costly overhead of physical hardware. Following a real-world use case for calculating taxi fares, you will engineer an MLOps pipeline for a PyTorch model using AWS server-less capabilities. About the technology

A production-ready machine learning system includes efficient data pipelines, integrated monitoring, and means to scale up and down based on demand. Using cloud-based services to implement ML infrastructure reduces development time and lowers hosting costs. Serverless MLOps eliminates the need to build and maintain custom infrastructure, so you can concentrate on your data, models, and algorithms. About the book

MLOps Engineering at Scale teaches you how to implement efficient machine learning systems using pre-built services from AWS and other cloud vendors. This easy-to-follow book guides you step-by-step as you set up your serverless ML infrastructure, even if you've never used a cloud platform before. You'll also explore tools like PyTorch Lightning, Optuna, and MLFlow that make it easy to build pipelines and scale your deep learning models in production. What's inside Reduce or eliminate ML infrastructure management

Learn state-of-the-art MLOps tools like PyTorch Lightning and MLFlow

Deploy training pipelines as a service endpoint

Monitor and manage your pipeline's life cycle

Measure performance improvementsAbout the reader

Readers need to know Python, SQL, and the basics of machine learning. No cloud experience required. About the author

Carl Osipov implemented his first neural net in 2000 and has worked on deep learning and machine learning at Google and IBM. Table of ContentsPART 1 - MASTERING THE DATA SET

1 Introduction to serverless machine learning

2 Getting started with the data set

3 Exploring and preparing the data set

4 More exploratory data analysis and data preparation

PART 2 - PYTORCH FOR SERVERLESS MACHINE LEARNING

5 Introducing PyTorch: Tensor basics

6 Core PyTorch: Autograd, optimizers, and utilities

7 Serverless machine learning at scale

8 Scaling out with distributed training

PART 3 - SERVERLESS MACHINE LEARNING PIPELINE

9 Feature selection

10 Adopting PyTorch Lightning

11 Hyperparameter optimization

12 Machine learning pipeline

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Part 1 Mastering the data set

1 Introduction to serverless machine learning

- What serverless machine learning is and why you should care

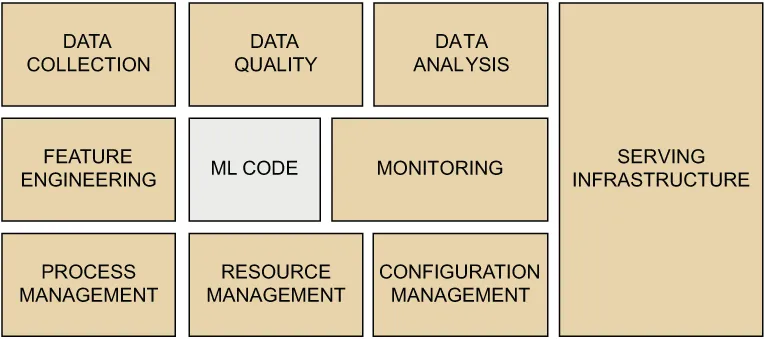

- The difference between machine learning code and a machine learning platform

- How this book teaches about serverless machine learning

- The target audience for this book

- What you can learn from this book

- How to use and integrate public cloud services, including the ones from Amazon Web Services (AWS), for machine learning, including data ingest, storage, and processing

- How to assess and achieve data quality standards for machine learning from structured data

- How to engineer synthetic features to improve machine learning effectiveness

- How to reproducibly sample structured data into experimental subsets for exploration and analysis

- How to implement machine learning models using PyTorch and Python in a Jupyter notebook environment

- How to implement data processing and machine learning pipelines to achieve both high throughput and low latency

- How to train and deploy machine learning models that depend on data processing pipelines

- How to monitor and manage the life cycle of your machine learning system once it is put in production

1.1 What is a machine learning platform?

1.2 Challenges when designing a machine learning platform

Table of contents

- MLOps Engineering at Scale

- Copyright

- contents

- front matter

- Part 1 Mastering the data set

- 1 Introduction to serverless machine learning

- 2 Getting started with the data set

- 3 Exploring and preparing the data set

- 4 More exploratory data analysis and data preparation

- Part 2 PyTorch for serverless machine learning

- 5 Introducing PyTorch: Tensor basics

- 6 Core PyTorch: Autograd, optimizers, and utilities

- 7 Serverless machine learning at scale

- 8 Scaling out with distributed training

- Part 3 Serverless machine learning pipeline

- 9 Feature selection

- 10 Adopting PyTorch Lightning

- 11 Hyperparameter optimization

- 12 Machine learning pipeline

- Appendix A. Introduction to machine learning

- Appendix B. Getting started with Docker

- index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app