- 440 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Data Engineering with Google Cloud Platform

About this book

Build and deploy your own data pipelines on GCP, make key architectural decisions, and gain the confidence to boost your career as a data engineer

Key Features

- Understand data engineering concepts, the role of a data engineer, and the benefits of using GCP for building your solution

- Learn how to use the various GCP products to ingest, consume, and transform data and orchestrate pipelines

- Discover tips to prepare for and pass the Professional Data Engineer exam

Book Description

With this book, you'll understand how the highly scalable Google Cloud Platform (GCP) enables data engineers to create end-to-end data pipelines right from storing and processing data and workflow orchestration to presenting data through visualization dashboards.Starting with a quick overview of the fundamental concepts of data engineering, you'll learn the various responsibilities of a data engineer and how GCP plays a vital role in fulfilling those responsibilities. As you progress through the chapters, you'll be able to leverage GCP products to build a sample data warehouse using Cloud Storage and BigQuery and a data lake using Dataproc. The book gradually takes you through operations such as data ingestion, data cleansing, transformation, and integrating data with other sources. You'll learn how to design IAM for data governance, deploy ML pipelines with the Vertex AI, leverage pre-built GCP models as a service, and visualize data with Google Data Studio to build compelling reports. Finally, you'll find tips on how to boost your career as a data engineer, take the Professional Data Engineer certification exam, and get ready to become an expert in data engineering with GCP.By the end of this data engineering book, you'll have developed the skills to perform core data engineering tasks and build efficient ETL data pipelines with GCP.

What you will learn

- Load data into BigQuery and materialize its output for downstream consumption

- Build data pipeline orchestration using Cloud Composer

- Develop Airflow jobs to orchestrate and automate a data warehouse

- Build a Hadoop data lake, create ephemeral clusters, and run jobs on the Dataproc cluster

- Leverage Pub/Sub for messaging and ingestion for event-driven systems

- Use Dataflow to perform ETL on streaming data

- Unlock the power of your data with Data Studio

- Calculate the GCP cost estimation for your end-to-end data solutions

Who this book is for

This book is for data engineers, data analysts, and anyone looking to design and manage data processing pipelines using GCP. You'll find this book useful if you are preparing to take Google's Professional Data Engineer exam. Beginner-level understanding of data science, the Python programming language, and Linux commands is necessary. A basic understanding of data processing and cloud computing, in general, will help you make the most out of this book.

]]>

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Section 1: Getting Started with Data Engineering with GCP

- Chapter 1, Fundamentals of Data Engineering

- Chapter 2, Big Data Capabilities on GCP

Chapter 1: Fundamentals of Data Engineering

- Understanding the data life cycle

- Know the roles of a data engineer before starting

- Foundational concepts for data engineering

Understanding the data life cycle

- Who will consume the data?

- What data sources should I use?

- Where should I store the data?

- When should the data arrive?

- Why does the data need to be stored in this place?

- How should the data be processed?

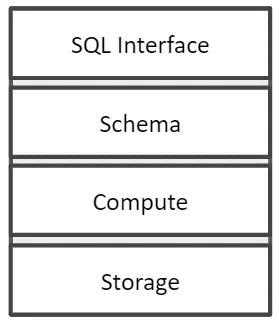

Understanding the need for a data warehouse

- Data silos have always occurred in large organizations, even back in the 1980s.

- Data comes from many operating systems.

- In order to process the data, we need to store the data in one place.

Getting familiar with the differences between a data warehouse and a data lake

Table of contents

- Data Engineering with Google Cloud Platform

- Contributors

- Preface

- Section 1: Getting Started with Data Engineering with GCP

- Chapter 1: Fundamentals of Data Engineering

- Chapter 2: Big Data Capabilities on GCP

- Section 2: Building Solutions with GCP Components

- Chapter 3: Building a Data Warehouse in BigQuery

- Chapter 4: Building Orchestration for Batch Data Loading Using Cloud Composer

- Chapter 5: Building a Data Lake Using Dataproc

- Chapter 6: Processing Streaming Data with Pub/Sub and Dataflow

- Chapter 7: Visualizing Data for Making Data-Driven Decisions with Data Studio

- Chapter 8: Building Machine Learning Solutions on Google Cloud Platform

- Section 3: Key Strategies for Architecting Top-Notch Data Pipelines

- Chapter 9: User and Project Management in GCP

- Chapter 10: Cost Strategy in GCP

- Chapter 11: CI/CD on Google Cloud Platform for Data Engineers

- Chapter 12: Boosting Your Confidence as a Data Engineer

- Other Books You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app