- 270 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Python High Performance - Second Edition

About this book

Learn how to use Python to create efficient applicationsAbout This Book• Identify the bottlenecks in your applications and solve them using the best profiling techniques• Write efficient numerical code in NumPy, Cython, and Pandas• Adapt your programs to run on multiple processors and machines with parallel programmingWho This Book Is ForThe book is aimed at Python developers who want to improve the performance of their application. Basic knowledge of Python is expectedWhat You Will Learn• Write efficient numerical code with the NumPy and Pandas libraries• Use Cython and Numba to achieve native performance• Find bottlenecks in your Python code using profilers• Write asynchronous code using Asyncio and RxPy• Use Tensorflow and Theano for automatic parallelism in Python• Set up and run distributed algorithms on a cluster using Dask and PySparkIn DetailPython is a versatile language that has found applications in many industries. The clean syntax, rich standard library, and vast selection of third-party libraries make Python a wildly popular language.Python High Performance is a practical guide that shows how to leverage the power of both native and third-party Python libraries to build robust applications.The book explains how to use various profilers to find performance bottlenecks and apply the correct algorithm to fix them. The reader will learn how to effectively use NumPy and Cython to speed up numerical code. The book explains concepts of concurrent programming and how to implement robust and responsive applications using Reactive programming. Readers will learn how to write code for parallel architectures using Tensorflow and Theano, and use a cluster of computers for large-scale computations using technologies such as Dask and PySpark.By the end of the book, readers will have learned to achieve performance and scale from their Python applications.Style and approachA step-by-step practical guide filled with real-world use cases and examples

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Implementing Concurrency

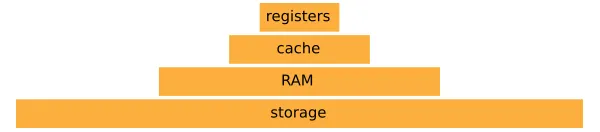

- The memory hierarchy

- Callbacks

- Futures

- Event loops

- Writing coroutines with asyncio

- Converting synchronous code to asynchronous code

- Reactive programming with RxPy

- Working with observables

- Building a memory monitor with RxPY

Asynchronous programming

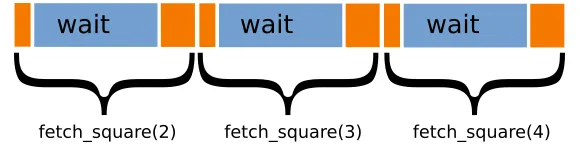

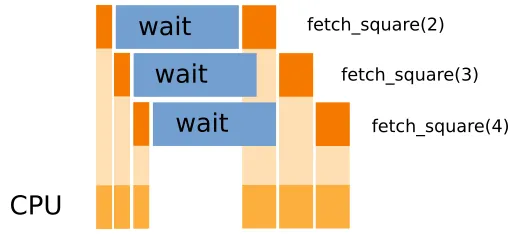

Waiting for I/O

Concurrency

import time

def network_request(number):

time.sleep(1.0)

return {"success": True, "result": number ** 2}

def fetch_square(number):

response = network_request(number)

if response["success"]:

print("Result is: {}".format(response["result"]))

fetch_square(2)

# Output:

# Result is: 4

fetch_square(2)

fetch_square(3)

fetch_square(4)

# Output:

# Result is: 4

# Result is: 9

# Result is: 16

Callbacks

Table of contents

- Title Page

- Copyright

- Credits

- About the Author

- About the Reviewer

- www.PacktPub.com

- Customer Feedback

- Preface

- Benchmarking and Profiling

- Pure Python Optimizations

- Fast Array Operations with NumPy and Pandas

- C Performance with Cython

- Exploring Compilers

- Implementing Concurrency

- Parallel Processing

- Distributed Processing

- Designing for High Performance

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app