eBook - ePub

Researching Language Teacher Cognition and Practice

International Case Studies

- 208 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Researching Language Teacher Cognition and Practice

International Case Studies

About this book

This book presents a novel approach to discussing how to research language teacher cognition and practice. An introductory chapter by the editors and an overview of the research field by Simon Borg precede eight case studies written by new researchers, each of which focuses on one approach to collecting data. These approaches range from questionnaires and focus groups to think aloud, stimulated recall, and oral reflective journals. Each case study is commented on by a leading expert in the field - JD Brown, Martin Bygate, Donald Freeman, Alan Maley, Jerry Gebhard, Thomas Farrell, Susan Gass, and Jill Burton. Readers are encouraged to enter the conversation by reflecting on a set of questions and tasks in each chapter.

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription.

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn more here.

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 1000+ topics, we’ve got you covered! Learn more here.

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more here.

Yes! You can use the Perlego app on both iOS or Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Yes, you can access Researching Language Teacher Cognition and Practice by Roger Barnard, Anne Burns, Roger Barnard,Anne Burns in PDF and/or ePUB format, as well as other popular books in Languages & Linguistics & Education Teaching Methods. We have over one million books available in our catalogue for you to explore.

Information

1 Questionnaires

Case Study: Judy Ng

Commentary: J.D. Brown

Commentary: J.D. Brown

CASE STUDY

Introduction

This chapter reports on aspects of an in-depth study I conducted at a private university in Malaysia which sought to investigate lecturers’ beliefs about the value of feedback on their students’ written work and the extent to which the lecturers’ practices converged with their self-reported beliefs. The study adopted various procedures for data collection – including interviews (see Chapter 4), think aloud (see Chapter 6), stimulated recall (see Chapter 7) and focus groups (see Chapter 3) – but the specific focus of the chapter is the design and administration of the questionnaires issued to the lecturers.

The value of teachers’ feedback on students’ writing has always been controversial, some researchers advocating various forms of feedback (e.g. Ferris, 1995, 2009), while others (e.g. Truscott, 1996, 2007) deny its usefulness. It has long been recognised that what teachers do in practice is largely influenced by their beliefs and values (Clarke & Peterson, 1986) and one of the most vital aspects of this issue is the study of the contextual factors that shape teachers’ beliefs (Borg, 2006; Goldstein, 2005). While there have been wide-ranging surveys of aspects of teacher beliefs in some Asian educational contexts (Littlewood, 2007; Nunan, 2003), there is a lack of in-depth studies, and this is particularly true of tertiary education in Malaysia. In his comprehensive overview of studies of language teachers’ beliefs, Borg (2006) pointed to the need for more studies in areas such as second-language writing. He also emphasised the need to examine the extent to which teachers’ reported beliefs are actually put into practice.

Methodological Focus

Brown (2001: 6) defines questionnaires as ‘any written instruments that present respondents with a series of questions or statements to which they are to react either by writing out their answers or selecting from among existing answers’ and he points out that questionnaires can elicit individuals’ reactions to, perceptions of and opinions of an issue or issues. Other functions of questionnaires include: obtaining demographic information about respondents (Creswell, 2005); scoping the respondents (Cohen et al., 2007); and eliciting and measuring abstract, cognitive processes, individual preferences and values (Brown, 2001; Dörnyei & Taguchi, 2010; Wagner, 2010).

The issue of sampling is essential in a questionnaire study (Brown, 2001; Creswell, 2005; Dörnyei & Taguchi, 2010). The major difficulty with sampling is in the accurate representation of the target population (Dörnyei & Taguchi, 2010; Gillham, 2000). It is usually very difficult and expensive to obtain a probability sample (Dörnyei & Taguchi, 2010: 60) that reflects the actual population. However, individual researchers can get reliable results using various non-probability sampling strategies. Some of the procedures for choosing the samples include random sampling, stratified random sampling, convenience sampling, quota sampling and snowball sampling (in which the researcher identifies key respondents who meet the criteria for the research and with their help is able to contact and recruit other participants).

A questionnaire can be administered in a number of ways, such as via post, email or online (Creswell, 2005; Dörnyei & Taguchi, 2010). However, there is a risk of getting a low return rate (Brown, 2001). There are, though, two approaches that give high return rates: one is one-to-one administration, whereby the questionnaires are personally delivered to the respondents and collected either there and then or later; another way is through group administration, when all the respondents are gathered together in the same place.

Most questionnaires contain both closed- and open-ended items. The former require respondents to choose from predetermined responses to various (Likert-type) scales, to select multiple-choice items or to rank order various alternatives (Brown, 2009; Dörnyei & Taguchi, 2010; Gillham, 2000). Although the range of possible responses is restricted, such close-ended items enable a straightforward analysis of the data. Several authors (Brown, 2001, 2009; Cohen et al., 2007; Dörnyei & Taguchi, 2010; Gillham, 2000) have suggested the use of more open-ended questions for smaller numbers of respondents and those who are doing qualitative research. Open-ended items typically include short-answer questions to elicit information which might otherwise have been overlooked; they can also serve to cross-check the validity of responses (Brown, 2009; Dörnyei & Taguchi, 2010).

It is necessary to pilot the questionnaire to ensure that the questions are not ambiguous and to check the feasibility of the procedures (Gillham, 2000; Wilson & McLean, 1994).

Once the questionnaires are completed and collected, the data need to be analysed. The type of analysis depends on the nature of the research and the number of respondents. If the number of respondents and/or items is large, Dörnyei and Taguchi (2010) recommend the use of a spreadsheet program such as Microsoft Excel or the Statistical Package for the Social Sciences (SPSS). Open-ended questions are more difficult to analyse due to the potential wide range of response types. In order to solve this problem, Brown (2001) suggests grouping similar responses together with the aid of a software program; for instance, recent versions of NVivo (Bazeley, 2007) can assist with the collation, management, coding and triangulation of data from multiple sources, including questionnaires.

Two recently published studies were particularly relevant to my research; Lee (2003, 2009) used questionnaires to examine whether teachers’ beliefs converged with their reported practices. The relevance of these two studies lies less in their findings (which, will, however, be briefly discussed below) than in their use of questionnaires to provide baseline date for subsequent phases of data collection; Lee’s (2009) study followed up an initial survey of 206 teachers by telephone interviews with a convenience sample of 19 participants. Lee (2009) went on to indicate 10 mismatches between teachers’ beliefs and written feedback practice. In my view, such findings, although plausible, cannot be accurately inferred from the participants’ responses. The following might be considered a typical example from the report:

In the questionnaire survey, about 70 per cent of the teachers said they usually mark errors comprehensively. Such practice, however, does not seem to be in line with their beliefs, since the majority of the teachers practising comprehensive marking (12 out of 19) said in the interview that they prefer selective marking. (Lee, 2009: 15)

The point is that it is at least possible that the 12 interviewees were among the 30% who disagreed with the item about comprehensive marking, and if this were the case then there would not be a mismatch about this particular belief. The lesson I drew from this is the need to be very careful in extrapolating data from two sources and make generalisations based on them. It is more accurate, wherever possible, to analyse the findings from individual respondents, as will be shown below.

The Study

The overall aim of this research was to elicit lecturers’ beliefs about the value of feedback and to compare them with their own observed practices at a private university in Malaysia. As a first step in the multi-method data-gathering strategy, I based many of my questionnaire items on Lee’s (2003) study because her research was also conducted in Asia and, like Lee, I wanted to study the extent of mismatch between the English-language lecturers’ beliefs and their actual practices of providing feedback. However, my questionnaire covered more ground than Lee’s. In addition to background data, my questionnaire included:

(1) the types of assignments assessed;

(2) a five-point Likert-scale frequency check about the respondents’ use of criteria;

(3) a similar check on features focused on when providing feedback;

(4) a four-point agreement scale relating to their purposes in giving feedback;

(5) a checklist to be completed regarding who makes the decisions about the types of feedback to be used;

(6) three open-ended questions.

The questionnaire was intended to serve three purposes: to obtain some background information on the lecturers; to elicit the lecturers’ general attitudes towards feedback and their self-report of their practices of providing feedback (to inform my subsequent data collection); and to invite volunteers to participate in the subsequent phases of the research. As will be noted below, although I had to adjust my original plans for this questionnaire, the three aims were broadly achieved, especially in terms of obtaining participants and the general attitudes towards feedback. Even though some of the data gained from the self-reported beliefs contradict the findings from the other data-gathering methods, this shows that relying on a single data-gathering tool raises the issue of validity, which will be explained in detail below.

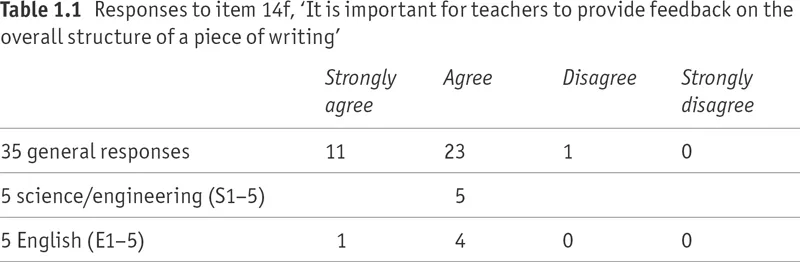

Questionnaire data samples

After a preliminary analysis of the data from a total of 35 participants, I decided to restrict the number of volunteers in the subsequent phases of data collection to 10: five English-language lecturers (coded E1–5), three (S1–3) from the engineering faculty and two (S4 and S5) from the applied science faculty. I made this decision either because the number of potential participants from the other departments was too small or because the type of courses taught were too varied to enable a coherent pattern of beliefs and practices to emerge. To enable a brief discussion of some of the findings, Tables 1.1 and 1.2 show the range of responses to two of the questionnaire items with a four-point agreement scale relating to their purposes in giving feedback.

Table 1.1 shows convergence of views among all 35 respondents: all but one agreed or strongly agreed that feedback ought to consider the overall structure of an assignment. More specifically, in their subsequent interviews, all five science lecturers (S1–5) considered content an important aspect in their feedback, and in their think aloud sessions (see Chapter 6) S4 and S5 checked the content by searching for correct use of scientific terminology. Although accurate referencing was not formally assessed, when they were actually marking the assignments, S3 and S4 noted students’ use of citations. When asked in the stimulated recall sessions about their beliefs about this, both mentioned that citations and referencing are important because their students need to do research when completing their studies abroad. In their interviews, the three science lecturers who marked lab reports (S1, S3 and S5) said they placed considerable importance on the discussion section because they needed to evaluate the students’ ability to critically discuss their findings. Also, S3 and S5 were extremely concerned that students followed the correct format in their lab reports. S5 mentioned that she allocated marks for each section because the format is a standard practice among all scientists. When S3 was asked in the stimulated recall session (see Chapter 7) why format was so important, he said that he liked everything to be standardised as it made it easier for him to read the report.

Some discrepancies emerged. For example, S4 mentioned in the interview that he did not really check or make any corrections to students’ errors in language. However, during the think aloud session, he corrected some spelling and grammatical errors or wrong choice of words. During the stimulated recall session, when this issue was raised, he said he needed to make the corrections because wrong grammar or misuse of words might change the intended meaning.

All five English lecturers focused in their think aloud and stimulated recall sessions on lower-order concerns of writing – such as sentence structure and choice of words – with a lesser emphasis on format, problem solving or critical and creative thinking. Two interesting observations were made when the English specialists were marking their students’ assignments: four of them tended to use linguistic terms which students might not understand (e.g. ‘fragment’, ‘run-on sentence’, ‘topic sentence’); and the same four used marking codes to indicate sentence-level errors (G for grammar errors and SP for spelling, etc.). These practices seem inconsistent with their concern, as reported in the questionnaire, for the overall structure of the assignment.

There was similarly overall convergence among the 35 responses to the second issue, regarding multiple drafts (Table 1.2). Within the subset of 10, S1 was the only lecturer who responded in the interview that multiple drafts did not help his students. By contrast, S4 not only strongly believed that multiple drafts helped students to produce the correct referencing system but actually marked students’ drafts in the think aloud sessions. However, although S5 responded positively to the questionnaire item, in the interview she said that she felt that multiple drafts took too much of both the lecturers’ and the students’ time. She allowed students to submit drafts only of the research project paper. In the interviews, S2 was non-committal about the benefit of multiple drafts and S3 shifted from his questionnaire response, saying that he did not in practice accept multiple drafts.

In their interviews, three of the five English lecturers (E1, E3, E4) diverged from their questionnaire responses by saying that they did not believe that multiple drafts helped students to improve their writing skills. E3 made the following comment:

you make the corrections for them, of course the end product it will be very beautiful in the sense that all the grammar mistakes will disappear and you will have an easy time to mark it but I feel that that’s not very good because the students are not learning from their mistakes.

Even though E5 responded positively in the questionnaire, she chose to be neutral in the interview. She did not make it clear in what way she thought multiple drafts were beneficial and did not mention her views in her think aloud session. E2 was more consistent than her colleagues; in her interview, she repeated that multiple drafts were useful, saying that ‘practice makes perfect’ and although she did not actually mark them in her think aloud session, she showed me samples of her students’ drafts.

The most important thing I learned from this experience was to not to take data elicited in questionnaires and interviews at face value. There are many reasons why people might not answer questions reliably and this uncertainty points to the utmost need to triangulate self-reported data with data from other sources. This view justifies not only my own multi-method approach to exploring teachers’ beliefs but is also in line with the perspectives offered in all the other chapters in this volume.

Methodological Implications

Six main issues about questionnaires are discussed in this section: item writing; piloting; gaining and maintaining access; administering the questionnaire; gaining further participation; and the role of the researcher as insider.

A variety of ways of writing items is essential in designing a questionnaire. According to Brown (2009), broad open-ended questions enable respondents to provide answers which are in line with their own perceptions, and are especially appropriate for small numbers of respondents (Cohen et al., 2007; Dörnyei & Taguchi, 2010). However, I decided to include more closed-ended questions than open-ended questions because I was able to obtain and triangulate further information subsequently. Lee (2003, 2009) used a three-point Likert frequency scale, collapsing ‘rarely’ and ‘never’ into one category, but I felt that five points would produce finer-grained results. For the agree–disagree items, I decided that a four-point scale would make analysis of the data easier, by eliminating the possibility that some respondents might merely opt for a ‘safe’ middle gro...

Table of contents

- Cover

- Title Page

- Copyright

- Contents

- Contributors

- Introduction

- Current Approaches to Language Teacher Cognition Research: A Methodological Analysis

- 1 Questionnaires

- 2 Narrative Frames

- 3 Focus Groups

- 4 Interviews

- 5 Observation

- 6 Think Aloud

- 7 Stimulated Recall

- 8 Oral Reflective Journals

- Final Thoughts

- Index