- 470 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Scala Machine Learning Projects

About this book

Powerful smart applications using deep learning algorithms to dominate numerical computing, deep learning, and functional programming.

Key Features

- Explore machine learning techniques with prominent open source Scala libraries such as Spark ML, H2O, MXNet, Zeppelin, and DeepLearning4j

- Solve real-world machine learning problems by delving complex numerical computing with Scala functional programming in a scalable and faster way

- Cover all key aspects such as collection, storing, processing, analyzing, and evaluation required to build and deploy machine models on computing clusters using Scala Play framework.

Book Description

Machine learning has had a huge impact on academia and industry by turning data into actionable information. Scala has seen a steady rise in adoption over the past few years, especially in the fields of data science and analytics. This book is for data scientists, data engineers, and deep learning enthusiasts who have a background in complex numerical computing and want to know more hands-on machine learning application development.

If you're well versed in machine learning concepts and want to expand your knowledge by delving into the practical implementation of these concepts using the power of Scala, then this book is what you need! Through 11 end-to-end projects, you will be acquainted with popular machine learning libraries such as Spark ML, H2O, DeepLearning4j, and MXNet.

At the end, you will be able to use numerical computing and functional programming to carry out complex numerical tasks to develop, build, and deploy research or commercial projects in a production-ready environment.

What you will learn

- Apply advanced regression techniques to boost the performance of predictive models

- Use different classification algorithms for business analytics

- Generate trading strategies for Bitcoin and stock trading using ensemble techniques

- Train Deep Neural Networks (DNN) using H2O and Spark ML

- Utilize NLP to build scalable machine learning models

- Learn how to apply reinforcement learning algorithms such as Q-learning for developing ML application

- Learn how to use autoencoders to develop a fraud detection application

- Implement LSTM and CNN models using DeepLearning4j and MXNet

Who this book is for

If you want to leverage the power of both Scala and Spark to make sense of Big Data, then this book is for you. If you are well versed with machine learning concepts and wants to expand your knowledge by delving into the practical implementation using the power of Scala, then this book is what you need! Strong understanding of Scala Programming language is recommended. Basic familiarity with machine Learning techniques will be more helpful.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Options Trading Using Q-learning and Scala Play Framework

- Using Q-learning—an RL algorithm

- Options trading—what is it all about?

- Overview of technologies

- Implementing Q-learning for options trading

- Wrapping up the application as a web app using Scala Play Framework

- Model deployment

Reinforcement versus supervised and unsupervised learning

Using RL

Notation, policy, and utility in RL

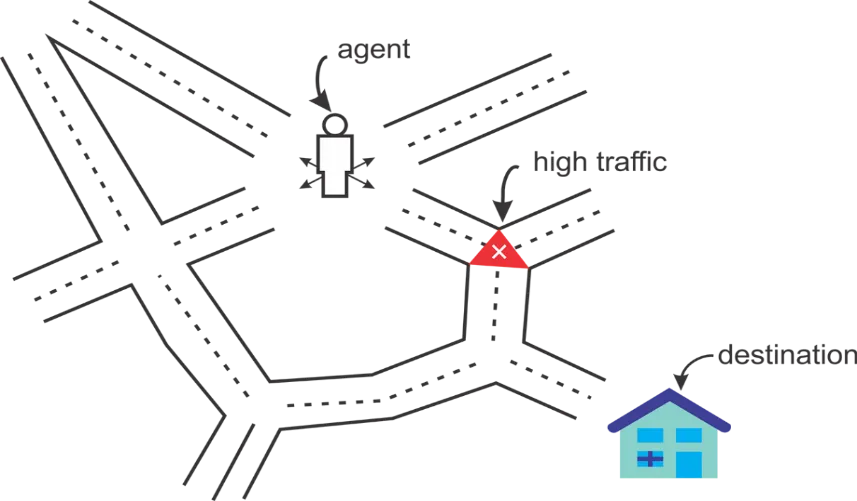

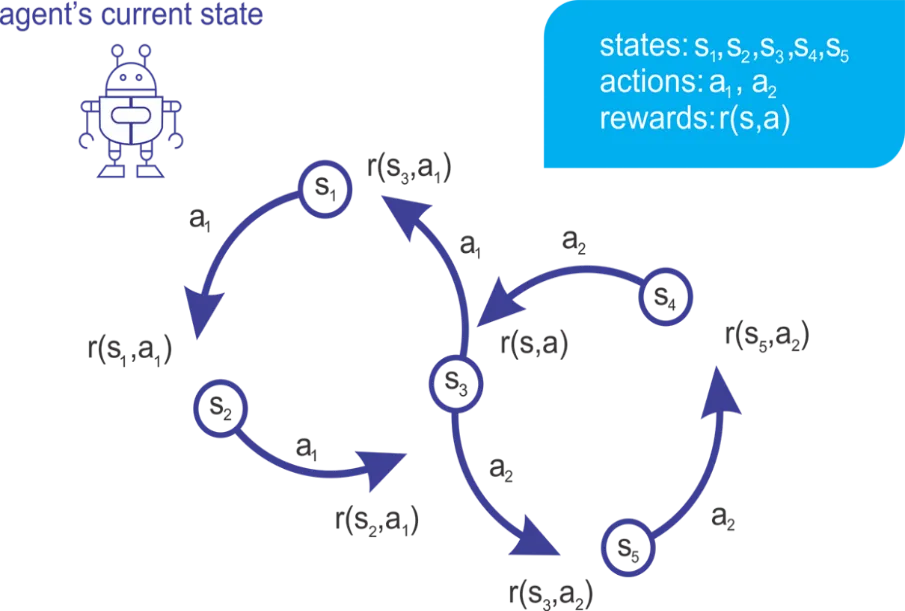

- Environment: An environment is any system having states and mechanisms to transition between different states. For example, the environment for a robot is the landscape or facility it operates in.

- Agent: An agent is an automated system that interacts with the environment.

- State: The state of the environment or system is the set of variables or features that fully describe the environment.

- Goal: A goal is a state that provides a higher discounted cumulative reward than any other state. A high cumulative reward prevents the best policy from being dependent on the initial state during training.

- Action: An action defines the transition between states, where an agent is responsible for performing, or at least recommending, an action. Upon execution of an action, the agent collects a reward (or punishment) from the environment.

- Policy: The policy defines the action to be performed and executed for any state of the environment.

- Reward: A reward quantifies the positive or negative interaction of the agent with the environment. Rewards are essentially the training set for the learning engine.

- Episode (also known as trials): This defines the number of steps necessary to reach the goal state from an initial state.

Policy

Table of contents

- Title Page

- Copyright and Credits

- Packt Upsell

- Contributors

- Preface

- Analyzing Insurance Severity Claims

- Analyzing and Predicting Telecommunication Churn

- High Frequency Bitcoin Price Prediction from Historical and Live Data

- Population-Scale Clustering and Ethnicity Prediction

- Topic Modeling - A Better Insight into Large-Scale Texts

- Developing Model-based Movie Recommendation Engines

- Options Trading Using Q-learning and Scala Play Framework

- Clients Subscription Assessment for Bank Telemarketing using Deep Neural Networks

- Fraud Analytics Using Autoencoders and Anomaly Detection

- Human Activity Recognition using Recurrent Neural Networks

- Image Classification using Convolutional Neural Networks

- Other Books You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app