Apache Spark Deep Learning Cookbook

Over 80 recipes that streamline deep learning in a distributed environment with Apache Spark

- 474 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Apache Spark Deep Learning Cookbook

Over 80 recipes that streamline deep learning in a distributed environment with Apache Spark

About this book

A solution-based guide to put your deep learning models into production with the power of Apache Spark

Key Features

- Discover practical recipes for distributed deep learning with Apache Spark

- Learn to use libraries such as Keras and TensorFlow

- Solve problems in order to train your deep learning models on Apache Spark

Book Description

With deep learning gaining rapid mainstream adoption in modern-day industries, organizations are looking for ways to unite popular big data tools with highly efficient deep learning libraries. As a result, this will help deep learning models train with higher efficiency and speed.

With the help of the Apache Spark Deep Learning Cookbook, you'll work through specific recipes to generate outcomes for deep learning algorithms, without getting bogged down in theory. From setting up Apache Spark for deep learning to implementing types of neural net, this book tackles both common and not so common problems to perform deep learning on a distributed environment. In addition to this, you'll get access to deep learning code within Spark that can be reused to answer similar problems or tweaked to answer slightly different problems. You will also learn how to stream and cluster your data with Spark. Once you have got to grips with the basics, you'll explore how to implement and deploy deep learning models, such as Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN) in Spark, using popular libraries such as TensorFlow and Keras.

By the end of the book, you'll have the expertise to train and deploy efficient deep learning models on Apache Spark.

What you will learn

- Set up a fully functional Spark environment

- Understand practical machine learning and deep learning concepts

- Apply built-in machine learning libraries within Spark

- Explore libraries that are compatible with TensorFlow and Keras

- Explore NLP models such as Word2vec and TF-IDF on Spark

- Organize dataframes for deep learning evaluation

- Apply testing and training modeling to ensure accuracy

- Access readily available code that may be reusable

Who this book is for

If you're looking for a practical and highly useful resource for implementing efficiently distributed deep learning models with Apache Spark, then the Apache Spark Deep Learning Cookbook is for you. Knowledge of the core machine learning concepts and a basic understanding of the Apache Spark framework is required to get the best out of this book. Additionally, some programming knowledge in Python is a plus.

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Information

Creating a Neural Network in Spark

- Creating a dataframe in PySpark

- Manipulating columns in a PySpark dataframe

- Converting a PySpark dataframe into an array

- Visualizing the array in a scatterplot

- Setting up weights and biases for input into the neural network

- Normalizing the input data for the neural network

- Validating array for optimal neural network performance

- Setting up the activation function with sigmoid

- Creating the sigmoid derivative function

- Calculating the cost function in a neural network

- Predicting gender based on height and weight

- Visualizing prediction scores

Introduction

Creating a dataframe in PySpark

Getting ready

sparknotebook

How to do it...

- Import a SparkSession using the following script:

from pyspark.sql import SparkSession

- Configure a SparkSession:

spark = SparkSession.builder \

.master("local") \

.appName("Neural Network Model") \

.config("spark.executor.memory", "6gb") \

.getOrCreate()

sc = spark.sparkContext

- In this situation, the SparkSession appName has been named Neural Network Model and 6gb has been assigned to the session memory.

How it works...

- In Spark, we use .master() to specify whether we will run our jobs on a distributed cluster or locally. For the purposes of this chapter and the remaining chapters, we will be executing Spark locally with one worker thread as specified with .master('local'). This is fine for testing and development purposes as we are doing in this chapter; however, we may run into performance issues if we deployed this to production. In production, it is recommended to use .master('local[*]') to set Spark to run on as many worker nodes that are available locally as possible. If we had 3 cores on our machine and we wanted to set our node count to match that, we would then specify .master('local[3]').

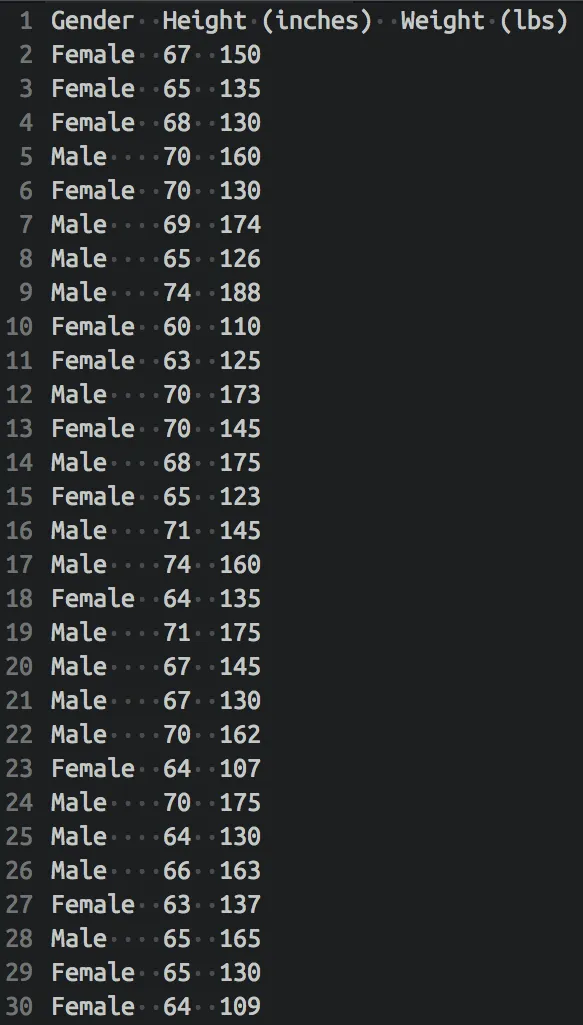

- The dataframe variable, df, is first created by inserting the row values for each column and then by inserting the column header names using the following script:

df = spark.createDataFrame([('Male', 67, 150), # insert column values

('Female', 65, 135),

('Female', 68, 130),

('Male', 70, 160),

('Female', 70, 130),

('Male', 69, 174),

('Female', 65, 126),

('Male', 74, 188),

('Female', 60, 110),

('Female', 63, 125),

('Male', 70, 173),

('Male', 70, 145),

('Male', 68, 175),

('Female', 65, 123),

('Male', 71, 145),

('Male', 74, 160),

('Female', 64, 135),

('Male', 71, 175),

('Male', 67, 145),

('Female', 67, 130),

('Male', 70, 162),

('Female', 64, 107),

('Male', 70, 175),

('Female', 64, 130),

('Male', 66, 16...Table of contents

- Title Page

- Copyright and Credits

- Packt Upsell

- Foreword

- Contributors

- Preface

- Setting Up Spark for Deep Learning Development

- Creating a Neural Network in Spark

- Pain Points of Convolutional Neural Networks

- Pain Points of Recurrent Neural Networks

- Predicting Fire Department Calls with Spark ML

- Using LSTMs in Generative Networks

- Natural Language Processing with TF-IDF

- Real Estate Value Prediction Using XGBoost

- Predicting Apple Stock Market Cost with LSTM

- Face Recognition Using Deep Convolutional Networks

- Creating and Visualizing Word Vectors Using Word2Vec

- Creating a Movie Recommendation Engine with Keras

- Image Classification with TensorFlow on Spark

- Other Books You May Enjoy