Machine Learning Algorithms

Popular algorithms for data science and machine learning, 2nd Edition

- 522 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Machine Learning Algorithms

Popular algorithms for data science and machine learning, 2nd Edition

About this book

An easy-to-follow, step-by-step guide for getting to grips with the real-world application of machine learning algorithms

Key Features

- Explore statistics and complex mathematics for data-intensive applications

- Discover new developments in EM algorithm, PCA, and bayesian regression

- Study patterns and make predictions across various datasets

Book Description

Machine learning has gained tremendous popularity for its powerful and fast predictions with large datasets. However, the true forces behind its powerful output are the complex algorithms involving substantial statistical analysis that churn large datasets and generate substantial insight.

This second edition of Machine Learning Algorithms walks you through prominent development outcomes that have taken place relating to machine learning algorithms, which constitute major contributions to the machine learning process and help you to strengthen and master statistical interpretation across the areas of supervised, semi-supervised, and reinforcement learning. Once the core concepts of an algorithm have been covered, you'll explore real-world examples based on the most diffused libraries, such as scikit-learn, NLTK, TensorFlow, and Keras. You will discover new topics such as principal component analysis (PCA), independent component analysis (ICA), Bayesian regression, discriminant analysis, advanced clustering, and gaussian mixture.

By the end of this book, you will have studied machine learning algorithms and be able to put them into production to make your machine learning applications more innovative.

What you will learn

- Study feature selection and the feature engineering process

- Assess performance and error trade-offs for linear regression

- Build a data model and understand how it works by using different types of algorithm

- Learn to tune the parameters of Support Vector Machines (SVM)

- Explore the concept of natural language processing (NLP) and recommendation systems

- Create a machine learning architecture from scratch

Who this book is for

Machine Learning Algorithms is for you if you are a machine learning engineer, data engineer, or junior data scientist who wants to advance in the field of predictive analytics and machine learning. Familiarity with R and Python will be an added advantage for getting the best from this book.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Linear Classification Algorithms

- The general structure of a linear classification problem

- Logistic regression (with and without regularization)

- SGD algorithms and perceptron

- Passive-aggressive algorithms

- Grid search of optimal hyperparameters

- The most important classification metrics

- The Receiver Operating Characteristic (ROC) curve

Linear classification

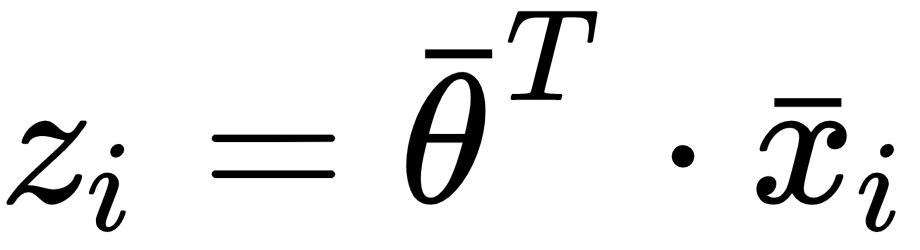

Logistic regression

Table of contents

- Title Page

- Copyright and Credits

- Dedication

- Packt Upsell

- Contributors

- Preface

- A Gentle Introduction to Machine Learning

- Important Elements in Machine Learning

- Feature Selection and Feature Engineering

- Regression Algorithms

- Linear Classification Algorithms

- Naive Bayes and Discriminant Analysis

- Support Vector Machines

- Decision Trees and Ensemble Learning

- Clustering Fundamentals

- Advanced Clustering

- Hierarchical Clustering

- Introducing Recommendation Systems

- Introducing Natural Language Processing

- Topic Modeling and Sentiment Analysis in NLP

- Introducing Neural Networks

- Advanced Deep Learning Models

- Creating a Machine Learning Architecture

- Other Books You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app