Differential Evolution in Chemical Engineering

Developments and Applications

- 452 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Differential Evolution in Chemical Engineering

Developments and Applications

About this book

-->

Optimization plays a key role in the design, planning and operation of chemical and related processes for several decades. Techniques for solving optimization problems are of deterministic or stochastic type. Of these, stochastic techniques can solve any type of optimization problems and can be adapted for multiple objectives. Differential evolution (DE), proposed about two decades ago, is one of the stochastic techniques. Its algorithm is simple to understand and use. DE has found many applications in chemical engineering.

This unique compendium focuses on DE, its recent developments and applications in chemical engineering. It will cover both single and multi-objective optimization. The book contains a number of chapters from experienced editors, and also several chapters from active researchers in this area.

--> Contents:

- Part I:

- Introduction (Shivom Sharma and Gade Pandu Rangaiah)

- Part II:

- Differential Evolution: Method, Developments and Chemical Engineering Applications (Shaoqiang Chen, Gade Pandu Rangaiah and Mekapati Srinivas)

- Application of Differential Evolution in Chemical Reaction Engineering (Mohammad Reza Rahimpour and Nazanin Hamedi)

- Differential Evolution with Tabu List for Global Optimization: Evaluation of Two Versions on Benchmark and Phase Stability Problems (Mekapati Srinivas and Gade Pandu Rangaiah)

- Integrated Multi-Objective Differential Evolution and Its Application to Amine Absorption Process for Natural Gas Sweetening (Shivom Sharma, Gade Pandu Rangaiah and François Maréchal)

- Part III:

- Heat Exchanger Network Retrofitting Using Multi-Objective Differential Evolution (Bhargava Krishna Sreepathi, Shivom Sharma and Gade Pandu Rangaiah)

- Phase Stability and Equilibrium Calculations in Reactive Systems Using Differential Evolution and Tabu Search (Adrián Bonilla-Petriciolet, Gade Pandu Rangaiah, Juan Gabriel Segovia-Hernández and José Enrique Jaime-Leal)

- Integrated Synthesis and Differential Evolution Methodology for Design and Optimization of Distillation Processes (Massimiliano Errico, Carlo Edgar Torres-Ortega and Ben-Guang Rong)

- Optimization of Intensified Separation Processes Using Differential Evolution with Tabu List (Eduardo Sánchez-Ramírez, Juan José Quiroz-Ramírez, César Ramírez-Márquez, Gabriel Contreras-Zarazúa, Juan Gabriel Segovia-Hernández and Adrián Bonilla-Petriciolet)

- Process Development and Optimization of Bioethanol Recovery and Dehydration by Distillation and Vapor Permeation for Multiple Objectives (Ashish Singh and Gade Pandu Rangaiah)

- Optimal Control of a Fermentation Process for Xylitol Production Using Differential Evolution (Laís Koop, Marcos Lúcio Corazza, Fernando Augusto Pedersen Voll and Adrián Bonilla-Petriciolet)

- Nested Differential Evolution for Mixed-Integer Bi-Level Optimization for Genome-Scale Metabolic Networks (Feng-Sheng Wang)

- Applications of Differential Evolution in Polymerization Reaction Engineering (Elena-Niculina Dragoi and Silvia Curteanu)

-->

--> Readership: Researchers, academics, professionals and graduate students in chemical engineering and optimization. -->

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Part I

Chapter 1

Introduction

École Polytechnique Fédérale de Lausanne,

CH-1951 Sion, Switzerland

2Department of Chemical and Biomolecular Engineering

National University of Singapore, 117585 Singapore

1.1Process Optimization

1.2Classification of Optimization Methods

| Characteristic | Classification |

|---|---|

| Number of variables: one or more | Single variable or multivariable optimization |

| Type of variables: real, integer or mixed | Nonlinear, integer or mixed (nonlinear) integer programming |

| Nature of equations: liner or nonlinear | Linear or nonlinear programming |

| Constraints: no constraints (besides bounds) or with constraints | Unconstrained or constrained optimization |

| Number of objectives: one or more | Single-objective or multi-objective optimization |

| Derivatives: without or using derivatives | Direct or gradient search optimization |

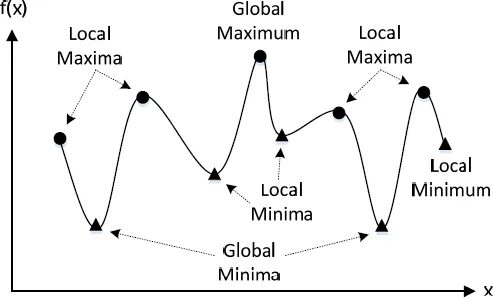

| Optimum: local or global in the search space | Local or global optimization |

| Random numbers: without or using random numbers | Deterministic or stochastic optimization methods |

| Trial points/solutions: one or more in each iteration | Single point (also known as trajectory) or population based methods |

1.2.1Use of derivatives

1.2.2Local and global methods

1.2.3Deterministic or stochastic methods

Table of contents

- Cover Page

- Title

- Copyright

- Preface

- About the Editors

- List of Contributors

- Contents

- Supplementary Materials

- Part I

- Part II

- Part III

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app