Hands-On Neural Networks with Keras

Design and create neural networks using deep learning and artificial intelligence principles

- 462 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Hands-On Neural Networks with Keras

Design and create neural networks using deep learning and artificial intelligence principles

About this book

Your one-stop guide to learning and implementing artificial neural networks with Keras effectively

Key Features

- Design and create neural network architectures on different domains using Keras

- Integrate neural network models in your applications using this highly practical guide

- Get ready for the future of neural networks through transfer learning and predicting multi network models

Book Description

Neural networks are used to solve a wide range of problems in different areas of AI and deep learning.

Hands-On Neural Networks with Keras will start with teaching you about the core concepts of neural networks. You will delve into combining different neural network models and work with real-world use cases, including computer vision, natural language understanding, synthetic data generation, and many more. Moving on, you will become well versed with convolutional neural networks (CNNs), recurrent neural networks (RNNs), long short-term memory (LSTM) networks, autoencoders, and generative adversarial networks (GANs) using real-world training datasets. We will examine how to use CNNs for image recognition, how to use reinforcement learning agents, and many more. We will dive into the specific architectures of various networks and then implement each of them in a hands-on manner using industry-grade frameworks.

By the end of this book, you will be highly familiar with all prominent deep learning models and frameworks, and the options you have when applying deep learning to real-world scenarios and embedding artificial intelligence as the core fabric of your organization.

What you will learn

- Understand the fundamental nature and workflow of predictive data modeling

- Explore how different types of visual and linguistic signals are processed by neural networks

- Dive into the mathematical and statistical ideas behind how networks learn from data

- Design and implement various neural networks such as CNNs, LSTMs, and GANs

- Use different architectures to tackle cognitive tasks and embed intelligence in systems

- Learn how to generate synthetic data and use augmentation strategies to improve your models

- Stay on top of the latest academic and commercial developments in the field of AI

Who this book is for

This book is for machine learning practitioners, deep learning researchers and AI enthusiasts who are looking to get well versed with different neural network architecture using Keras. Working knowledge of Python programming language is mandatory.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Section 1: Fundamentals of Neural Networks

- Chapter 1, Overview of Neural Networks

- Chapter 2, Deeper Dive into Neural Networks

- Chapter 3, Signal Processing – Data Analysis with Neural Networks

Overview of Neural Networks

- Defining our goal

- Knowing our tools

- Understanding neural networks

- Observing the brain

- Information modeling and functional representations

- Some fundamental refreshers in data science

Defining our goal

Knowing our tools

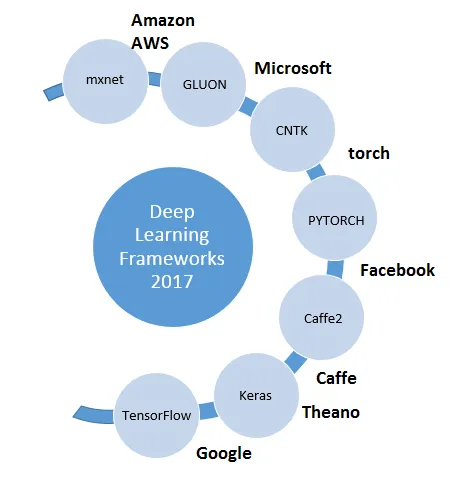

Keras

- Easy and fast prototyping

- Supports implementation of several of the latest neural network architectures, as well as pretrained models and exercise datasets

- Executes impeccably on CPUs and GPUs

TensorFlow

The fundamentals of neural learning

What is a neural network?

Observing the brain

Table of contents

- Title Page

- Copyright and Credits

- About Packt

- Contributors

- Preface

- Section 1: Fundamentals of Neural Networks

- Overview of Neural Networks

- A Deeper Dive into Neural Networks

- Signal Processing - Data Analysis with Neural Networks

- Section 2: Advanced Neural Network Architectures

- Convolutional Neural Networks

- Recurrent Neural Networks

- Long Short-Term Memory Networks

- Reinforcement Learning with Deep Q-Networks

- Section 3: Hybrid Model Architecture

- Autoencoders

- Generative Networks

- Section 4: Road Ahead

- Contemplating Present and Future Developments

- Other Books You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app