Mastering Machine Learning on AWS

Advanced machine learning in Python using SageMaker, Apache Spark, and TensorFlow

- 306 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Mastering Machine Learning on AWS

Advanced machine learning in Python using SageMaker, Apache Spark, and TensorFlow

About this book

Gain expertise in ML techniques with AWS to create interactive apps using SageMaker, Apache Spark, and TensorFlow.

Key Features

- Build machine learning apps on Amazon Web Services (AWS) using SageMaker, Apache Spark and TensorFlow

- Learn model optimization, and understand how to scale your models using simple and secure APIs

- Develop, train, tune and deploy neural network models to accelerate model performance in the cloud

Book Description

AWS is constantly driving new innovations that empower data scientists to explore a variety of machine learning (ML) cloud services. This book is your comprehensive reference for learning and implementing advanced ML algorithms in AWS cloud.

As you go through the chapters, you'll gain insights into how these algorithms can be trained, tuned and deployed in AWS using Apache Spark on Elastic Map Reduce (EMR), SageMaker, and TensorFlow. While you focus on algorithms such as XGBoost, linear models, factorization machines, and deep nets, the book will also provide you with an overview of AWS as well as detailed practical applications that will help you solve real-world problems. Every practical application includes a series of companion notebooks with all the necessary code to run on AWS. In the next few chapters, you will learn to use SageMaker and EMR Notebooks to perform a range of tasks, right from smart analytics, and predictive modeling, through to sentiment analysis.

By the end of this book, you will be equipped with the skills you need to effectively handle machine learning projects and implement and evaluate algorithms on AWS.

What you will learn

- Manage AI workflows by using AWS cloud to deploy services that feed smart data products

- Use SageMaker services to create recommendation models

- Scale model training and deployment using Apache Spark on EMR

- Understand how to cluster big data through EMR and seamlessly integrate it with SageMaker

- Build deep learning models on AWS using TensorFlow and deploy them as services

- Enhance your apps by combining Apache Spark and Amazon SageMaker

Who this book is for

This book is for data scientists, machine learning developers, deep learning enthusiasts and AWS users who want to build advanced models and smart applications on the cloud using AWS and its integration services. Some understanding of machine learning concepts, Python programming and AWS will be beneficial.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Section 1: Machine Learning on AWS

- Chapter 1, Getting Started with Machine Learning for AWS

Getting Started with Machine Learning for AWS

- How AWS empowers data scientists

- Identifying candidate problems that can be solved using ML

- The ML project life cycle

- Deploying models

How AWS empowers data scientists

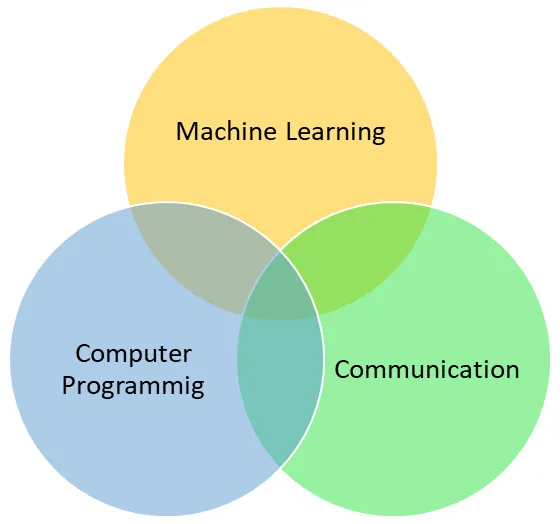

- ML: ML algorithms provide tools to analyze and learn from a large amount of data, and generate predictions or recommendations from that data. It is an important tool for analyzing structured data (such as databases) and unstructured data (such as text documents), and inferring actionable insights from them. A data scientist should be an expert in a plethora of ML algorithms and should understand what algorithm should be applied in a given situation. As data scientists have access to a large library of algorithms that can solve a given problem, they should know which algorithms should be used in each situation.

- Computer programming: A data scientist should be an adept programmer, able to write code to access various ML and statistical libraries. There are a lot of programming languages, such as Scala, Python, and R, that provide a number of libraries that let us apply ML algorithms on a dataset. Hence, knowledge of such tools helps a data scientist to perform complex tasks within a feasible time frame. This is crucial in a business environment.

- Communication: Along with discovering trends in the data and building complex ML models, a data scientist is also tasked with explaining these findings to business teams. Hence, a data scientist must not only possess good communication skills, but also good analytical and visualization skills. This will help them present complex data models in a way that is easily understood by people not familiar with ML. This also helps data scientists to convey their findings to business teams and provide them with guidance on expected outcomes.

Using AWS tools for ML

Identifying candidate problems that can be solved using ML

The ML project life cycle

Data gathering

Evaluation metrics

Table of contents

- Title Page

- Copyright and Credits

- Dedication

- About Packt

- Contributors

- Preface

- Section 1: Machine Learning on AWS

- Getting Started with Machine Learning for AWS

- Section 2: Implementing Machine Learning Algorithms at Scale on AWS

- Classifying Twitter Feeds with Naive Bayes

- Predicting House Value with Regression Algorithms

- Predicting User Behavior with Tree-Based Methods

- Customer Segmentation Using Clustering Algorithms

- Analyzing Visitor Patterns to Make Recommendations

- Section 3: Deep Learning

- Implementing Deep Learning Algorithms

- Implementing Deep Learning with TensorFlow on AWS

- Image Classification and Detection with SageMaker

- Section 4: Integrating Ready-Made AWS Machine Learning Services

- Working with AWS Comprehend

- Using AWS Rekognition

- Building Conversational Interfaces Using AWS Lex

- Section 5: Optimizing and Deploying Models through AWS

- Creating Clusters on AWS

- Optimizing Models in Spark and SageMaker

- Tuning Clusters for Machine Learning

- Deploying Models Built in AWS

- Appendix: Getting Started with AWS

- Other Books You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app