- English

- ePUB (mobile friendly)

- Available on iOS & Android

About this book

A fascinating and instructive guide to Markov chains for experienced users and newcomers alike

This unique guide to Markov chains approaches the subject along the four convergent lines of mathematics, implementation, simulation, and experimentation. It introduces readers to the art of stochastic modeling, shows how to design computer implementations, and provides extensive worked examples with case studies.

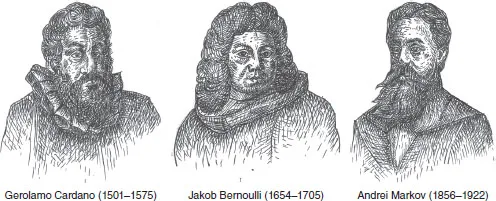

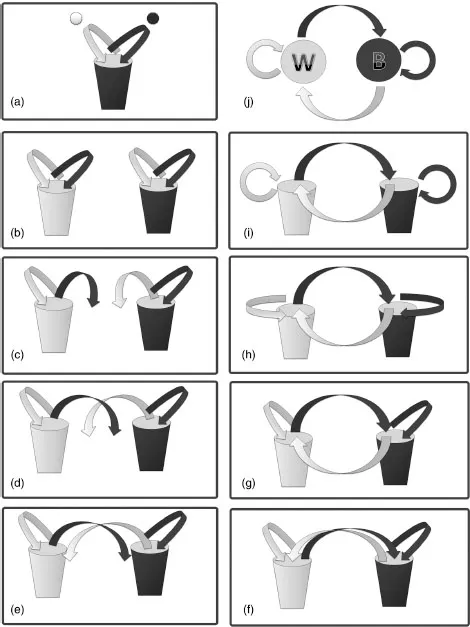

Markov Chains: From Theory to Implementation and Experimentation begins with a general introduction to the history of probability theory in which the author uses quantifiable examples to illustrate how probability theory arrived at the concept of discrete-time and the Markov model from experiments involving independent variables. An introduction to simple stochastic matrices and transition probabilities is followed by a simulation of a two-state Markov chain. The notion of steady state is explored in connection with the long-run distribution behavior of the Markov chain. Predictions based on Markov chains with more than two states are examined, followed by a discussion of the notion of absorbing Markov chains. Also covered in detail are topics relating to the average time spent in a state, various chain configurations, and n-state Markov chain simulations used for verifying experiments involving various diagram configurations.

• Fascinating historical notes shed light on the key ideas that led to the development of the Markov model and its variants

• Various configurations of Markov Chains and their limitations are explored at length

• Numerous examples—from basic to complex—are presented in a comparative manner using a variety of color graphics

• All algorithms presented can be analyzed in either Visual Basic, Java Script, or PHP

• Designed to be useful to professional statisticians as well as readers without extensive knowledge of probability theory

Covering both the theory underlying the Markov model and an array of Markov chain implementations, within a common conceptual framework, Markov Chains: From Theory to Implementation and Experimentation is a stimulating introduction to and a valuable reference for those wishing to deepen their understanding of this extremely valuable statistical tool.

Paul A. Gagniuc, PhD, is Associate Professor at Polytechnic University of Bucharest, Romania. He obtained his MS and his PhD in genetics at the University of Bucharest. Dr. Gagniuc's work has been published in numerous high profile scientific journals, ranging from the Public Library of Science to BioMed Central and Nature journals. He is the recipient of several awards for exceptional scientific results and a highly active figure in the review process for different scientific areas.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

1

Historical Notes

1.1 Introduction

1.2 On the Wings of Dependent Variables

Table of contents

- Cover

- Title page

- Copyright

- Dedication

- Abstract

- Preface

- Acknowledgments

- About the Companion Website

- 1 Historical Notes

- 2 From Observation to Simulation

- 3 Building the Stochastic Matrix

- 4 Predictions Using Two-State Markov Chains

- 5 Predictions Using n-State Markov Chains

- 6 Absorbing Markov Chains

- 7 The Average Time Spent in Each State

- 8 Discussions on Different Configurations of Chains

- 9 The Simulation of an n-State Markov Chain

- A Supporting Algorithms in PHP

- B Supporting Algorithms in JavaScript

- C Syntax Equivalence between Languages

- Glossary

- References

- Index

- EULA

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app