- English

- ePUB (mobile friendly)

- Available on iOS & Android

Deep Learning For Dummies

About this book

Take a deep dive into deep learning

Deep learning provides the means for discerning patterns in the data that drive online business and social media outlets. Deep Learning for Dummies gives you the information you need to take the mystery out of the topic—and all of the underlying technologies associated with it.

In no time, you'll make sense of those increasingly confusing algorithms, and find a simple and safe environment to experiment with deep learning. The book develops a sense of precisely what deep learning can do at a high level and then provides examples of the major deep learning application types.

- Includes sample code

- Provides real-world examples within the approachable text

- Offers hands-on activities to make learning easier

- Shows you how to use Deep Learning more effectively with the right tools

This book is perfect for those who want to better understand the basis of the underlying technologies that we use each and every day.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Discovering Deep Learning

Introducing Deep Learning

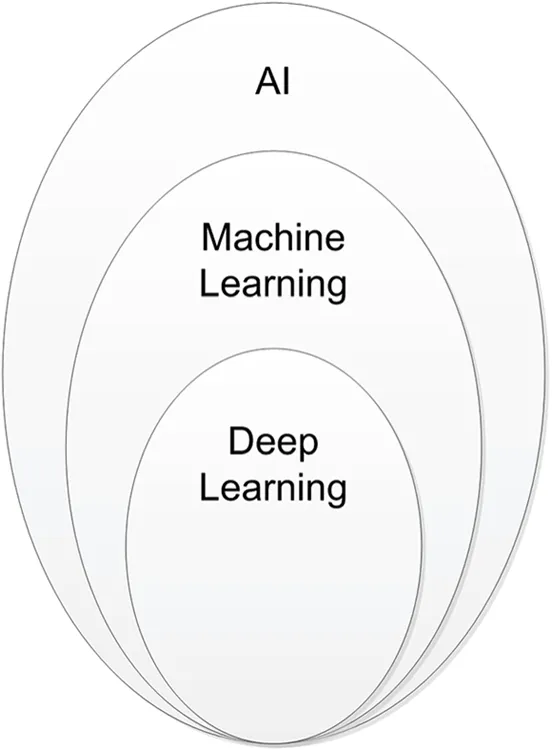

Defining What Deep Learning Means

Starting from Artificial Intelligence

- Learning: Having the ability to obtain and process new information.

- Reasoning: Being able to manipulate information in various ways.

- Understanding: Considering the result of information manipulation.

- Grasping truths: Determining the validity of the manipulated information.

- Seeing relationships: Divining how validated data interacts with other data.

- Considering meanings: Applying truths to particular situations in a manner consistent with their relationship.

- Separating fact from belief: Determining whether the data is adequately supported by provable sources that can be demonstrated to be consistently valid.

- Set a goal based on needs or wants.

- Assess the value of any currently known information in support of the goal.

- Gather additional information that could support the goal.

- Manipulate the data such that it achieves a form consistent with existing information.

- Define the relationships and truth values between existing and new information.

- Determine whether the goal is achieved.

- Modify the goal in light of the new data and its effect on the probability of success.

- Repeat Steps 2 through 7 as needed until the goal is achieved (found true) or the possibilities for achieving it are exhausted (found false).

Considering the role of AI

- Acting humanly: When a computer acts like a human, it best reflects the Turing test, in which the computer succeeds when differentiation between the computer and a human isn't possible (see

http://www.turing.org.uk/scrapbook/test.htmlfor details). This category also reflects what the media would have you believe that AI is all about. You see it employed for technologies such as natural language processing, knowledge representation, automated reasoning, and machine learning (all four of which must be present to pass the test).The original Turing Test didn’t include any physical contact. The newer, Total Turing Test does include physical contact in the form of perceptual ability interrogation, which means that the computer must also employ both computer vision and robotics to succeed. Modern techniques include the idea of achieving the goal rather than mimicking humans completely. For example, the Wright brothers didn’t succeed in creating an airplane by precisely copying the flight of birds; rather, the birds provided ideas that led to aerodynamics, which in turn eventually led to human flight. The goal is to fly. Both birds and humans achieve this goal, but they use different approaches. - Thinking humanly: When a computer thinks as a human, it performs tasks that require intelligence (as contrasted ...

Table of contents

- Cover

- Table of Contents

- Introduction

- Part 1: Discovering Deep Learning

- Part 2: Considering Deep Learning Basics

- Part 3: Interacting with Deep Learning

- Part 4: The Part of Tens

- Index

- About the Authors

- Advertisement Page

- Connect with Dummies

- End User License Agreement

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app