- English

- ePUB (mobile friendly)

- Available on iOS & Android

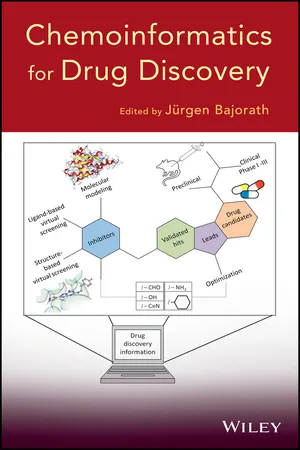

Chemoinformatics for Drug Discovery

About this book

Chemoinformatics strategies to improve drug discovery results

With contributions from leading researchers in academia and the pharmaceutical industry as well as experts from the software industry, this book explains how chemoinformatics enhances drug discovery and pharmaceutical research efforts, describing what works and what doesn't. Strong emphasis is put on tested and proven practical applications, with plenty of case studies detailing the development and implementation of chemoinformatics methods to support successful drug discovery efforts. Many of these case studies depict groundbreaking collaborations between academia and the pharmaceutical industry.

Chemoinformatics for Drug Discovery is logically organized, offering readers a solid base in methods and models and advancing to drug discovery applications and the design of chemoinformatics infrastructures. The book features 15 chapters, including:

- What are our models really telling us? A practical tutorial on avoiding common mistakes when building predictive models

- Exploration of structure-activity relationships and transfer of key elements in lead optimization

- Collaborations between academia and pharma

- Applications of chemoinformatics in pharmaceutical research—experiences at large international pharmaceutical companies

- Lessons learned from 30 years of developing successful integrated chemoinformatic systems

Throughout the book, the authors present chemoinformatics strategies and methods that have been proven to work in pharmaceutical research, offering insights culled from their own investigations. Each chapter is extensively referenced with citations to original research reports and reviews.

Integrating chemistry, computer science, and drug discovery, Chemoinformatics for Drug Discovery encapsulates the field as it stands today and opens the door to further advances.

Trusted by 375,005 students

Access to over 1 million titles for a fair monthly price.

Study more efficiently using our study tools.

Information

CHAPTER 1

WHAT ARE OUR MODELS REALLY TELLING US? A PRACTICAL TUTORIAL ON AVOIDING COMMON MISTAKES WHEN BUILDING PREDICTIVE MODELS

1.1 INTRODUCTION

- Physical properties such as aqueous solubility or octonol/water partition coefficients [2–4]

- Off-target activities such as CYP or hERG inhibition [5–7]

- Binding geometry and affinity of small molecules in protein targets [8].

- How does the dynamic range of the data being modeled impact the apparent performance of the model?

- How does experimental error impact the apparent predictivity of a model?

- How can we determine whether a model is applicable to a new dataset?

- How should we compare the performance of regression models?

python script_name.py (Unix and OS-X)

python.exe script_name.py (Windows)

setwd(“directory_path”)

source(“script.R”)

source(“install_libraries.R”)

1.2 PRELIMINARIES

- Temperature at which the solubility measurement is performed

- Purity of the compound

- Crystal form—different polymorphs of the same compound can have vastly different solubilities.

1.3 DATASETS

LogS = log10((solubility in µg/ml)/(1000.0 * MW))

1.3.1 Exploring Datasets

Table of contents

- COVER

- TITLE PAGE

- COPYRIGHT PAGE

- PREFACE

- CONTRIBUTORS

- CHAPTER 1: WHAT ARE OUR MODELS REALLY TELLING US? A PRACTICAL TUTORIAL ON AVOIDING COMMON MISTAKES WHEN BUILDING PREDICTIVE MODELS

- CHAPTER 2: THE CHALLENGE OF CREATIVITY IN DRUG DESIGN

- CHAPTER 3: A ROUGH SET THEORY APPROACH TO THE ANALYSIS OF GENE EXPRESSION PROFILES

- CHAPTER 4: BIMODAL PARTIAL LEAST-SQUARES APPROACH AND ITS APPLICATION TO CHEMOGENOMICS STUDIES FOR MOLECULAR DESIGN

- CHAPTER 5: STABILITY IN MOLECULAR FINGERPRINT COMPARISON

- CHAPTER 6: CRITICAL ASSESSMENT OF VIRTUAL SCREENING FOR HIT IDENTIFICATION

- CHAPTER 7: CHEMOMETRIC APPLICATIONS OF NAÏVE BAYESIAN MODELS IN DRUG DISCOVERY: BEYOND COMPOUND RANKING

- CHAPTER 8: CHEMOINFORMATICS IN LEAD OPTIMIZATION

- CHAPTER 9: USING CHEMOINFORMATICS TOOLS TO ANALYZE CHEMICAL ARRAYS IN LEAD OPTIMIZATION

- CHAPTER 10: EXPLORATION OF STRUCTURE–ACTIVITY RELATIONSHIPS (SARs) AND TRANSFER OF KEY ELEMENTS IN LEAD OPTIMIZATION

- CHAPTER 11: DEVELOPMENT AND APPLICATIONS OF GLOBAL ADMET MODELS: IN SILICO PREDICTION OF HUMAN MICROSOMAL LABILITY

- CHAPTER 12: CHEMOINFORMATICS AND BEYOND: MOVING FROM SIMPLE MODELS TO COMPLEX RELATIONSHIPS IN PHARMACEUTICAL COMPUTATIONAL TOXICOLOGY

- CHAPTER 13: APPLICATIONS OF CHEMINFORMATICS IN PHARMACEUTICAL RESEARCH: EXPERIENCES AT BOEHRINGER INGELHEIM IN GERMANY

- CHAPTER 14: LESSONS LEARNED FROM 30 YEARS OF DEVELOPING SUCCESSFUL INTEGRATED CHEMINFORMATIC SYSTEMS

- CHAPTER 15: MOLECULAR SIMILARITY ANALYSIS

- SUPPLEMENTAL IMAGES

- INDEX

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app