Deep Learning with TensorFlow 2 and Keras

Regression, ConvNets, GANs, RNNs, NLP, and more with TensorFlow 2 and the Keras API, 2nd Edition

Antonio Gulli, Amita Kapoor, Sujit Pal

- 646 Seiten

- English

- ePUB (handyfreundlich)

- Über iOS und Android verfügbar

Deep Learning with TensorFlow 2 and Keras

Regression, ConvNets, GANs, RNNs, NLP, and more with TensorFlow 2 and the Keras API, 2nd Edition

Antonio Gulli, Amita Kapoor, Sujit Pal

Über dieses Buch

Build machine and deep learning systems with the newly released TensorFlow 2 and Keras for the lab, production, and mobile devices

Key Features

- Introduces and then uses TensorFlow 2 and Keras right from the start

- Teaches key machine and deep learning techniques

- Understand the fundamentals of deep learning and machine learning through clear explanations and extensive code samples

Book Description

Deep Learning with TensorFlow 2 and Keras, Second Edition teaches neural networks and deep learning techniques alongside TensorFlow (TF) and Keras. You'll learn how to write deep learning applications in the most powerful, popular, and scalable machine learning stack available.

TensorFlow is the machine learning library of choice for professional applications, while Keras offers a simple and powerful Python API for accessing TensorFlow. TensorFlow 2 provides full Keras integration, making advanced machine learning easier and more convenient than ever before.

This book also introduces neural networks with TensorFlow, runs through the main applications (regression, ConvNets (CNNs), GANs, RNNs, NLP), covers two working example apps, and then dives into TF in production, TF mobile, and using TensorFlow with AutoML.

What you will learn

- Build machine learning and deep learning systems with TensorFlow 2 and the Keras API

- Use Regression analysis, the most popular approach to machine learning

- Understand ConvNets (convolutional neural networks) and how they are essential for deep learning systems such as image classifiers

- Use GANs (generative adversarial networks) to create new data that fits with existing patterns

- Discover RNNs (recurrent neural networks) that can process sequences of input intelligently, using one part of a sequence to correctly interpret another

- Apply deep learning to natural human language and interpret natural language texts to produce an appropriate response

- Train your models on the cloud and put TF to work in real environments

- Explore how Google tools can automate simple ML workflows without the need for complex modeling

Who this book is for

This book is for Python developers and data scientists who want to build machine learning and deep learning systems with TensorFlow. This book gives you the theory and practice required to use Keras, TensorFlow 2, and AutoML to build machine learning systems. Some knowledge of machine learning is expected.

Häufig gestellte Fragen

Information

2

TensorFlow 1.x and 2.x

Understanding TensorFlow 1.x

import tensorflow as tf message = tf.constant('Welcome to the exciting world of Deep Neural Networks!') with tf.Session() as sess: print(sess.run(message).decode()) tensorflow. The second line defines the message using tf.constant. The third line defines the Session() using with, and the fourth runs the session using run(). Note that this tells us that the result is a "byte string." In order to remove string quotes and b (for byte) we use the method decode().TensorFlow 1.x computational graph program structure

Computational graphs

Execution of the graph

Why do we use graphs at all?

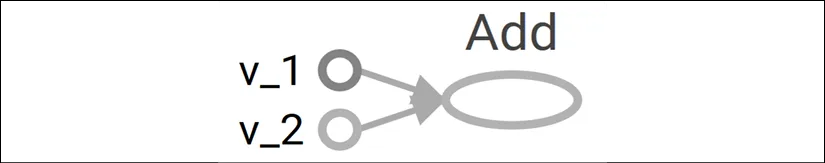

An example to start with

v_1 = tf.constant([1,2,3,4]) v_2 = tf.constant([2,1,5,3]) v_add = tf.add(v_1,v_2) # You can also write v_1 + v_2 instead with tf.Session() as sess: print(sess.run(v_add)) sess = tf.Session() print(sess.run(v_add)) sess.close() [3 3 8 7] close().tf.device(). In our example, the computational graph consists of three nodes, v_1 and v_2 representing the two vectors, and v_add, the operation to be performed on them. Now to bring this graph to life we first need to define a session object using tf.Session(). We named our session object sess. Next, we run it using the run method defined in the Session class as:run (fetches, feed_dict=None, options=None, run_metadata) fetches parameter. Our example has tensor v_add in fetches. The run me...