![]()

SECTION 1

AUDIO-VISUAL SPEECH PROCESSING: IMPLICATIONS FOR THEORIES OF SPEECH PERCEPTION

![]()

CHAPTER ONE

The use of auditory and visual information during phonetic processing: implications for theories of speech perception

Kerry P. Green University of Arizona, Tucson, USA

INTRODUCTION

It has been well-documented that visual information from a talker’s mouth and face play a role in the perception and understanding of spoken language (for reviews, see Summerfield, 1987; Massaro, 1987). Much of this research has examined the benefit that the visual information provides to the recognition of speech presented under noisy situations in normal hearing adults (e.g. Sumby & Pollack, 1954). A study by McGurk and MacDonald (1976) was the first to demonstrate that visual information plays a role in the perception of clear, unambiguous speech tokens. They dubbed auditory syllables consisting of labial consonants such as /ba/ onto a videotape of a talker saying different syllables such as /ga/. When observers watched and listened to the videotape, they heard the talker saying a syllable like /da/ or / 6a/. However, when asked to face away from the video monitor and just listen to the videotape, they heard the auditory tokens correctly. This type of stimulus has been termed a “fusion token”, because the response suggests a blending or fusing of the place information from the two modalities. A second type of token consisting of an auditory /ga/ dubbed onto a visual articulation of /ba/ was often perceived as /bga/. Such stimuli are referred to as “combination tokens”, because the response reflects a combining of the information from the two modalities.

Since the findings of McGurk and MacDonald (1976; MacDonald & McGurk, 1978), the McGurk effect has been used extensively as a behavioural tool to examine the processes involved in the integration of information from the auditory and visual modalities during speech perception. A number of studies have been conducted in an attempt to shed light on four different issues concerning the integration of auditory and visual phonetic information. The first issue concerns the various stimulus factors that influence the McGurk effect. One approach to understanding the integration processes is to examine the boundary conditions under which integration does and does not occur. For example, a comparison of this research with other auditory-visual phenomena (e.g. the ventriloquism effect), has led some researchers to suggest that the integration of auditory and visual speech information may be accomplished by a module specific to the processing of speech (Radeau, 1994).

A second issue concerns the level of phonetic processing at which the auditory and visual information are integrated to produce the effect, and whether the information from the two modalities is processed independently or interactively. Since the phonetic information is presented to the perceptual system in separate modalities, the auditory and visual information will be processed separately at least in the early stages of processing (e.g., Roberts & Summerfield, 1981; Saldava & Rosenblum, 1994). However, at some point during phonetic perception, the two sources of information are combined to influence the phonetic outcome. The main question addressed by many of these studies is whether the information is combined at an early or late stage of phonetic processing. A related question of interest is whether there is any interaction between the two sources of information prior to their integration.

A third important issue concerns the effect of language or developmental experience on the McGurk effect. Currently, there is debate as to whether the ability to discriminate different phonetic categories reflects the initial capacity of the perceptual system at birth, or is the result of experience with a particular language along with the operation of perceptual learning mechanisms (e.g. Pisoni, Lively & Logan, 1994). In a similar manner, researchers have questioned whether the ability to integrate phonetic information from the auditory and visual modalities requires experience watching the movement of talkers’ mouths while at the same time hearing a speech utterance (Diehl & Kluender, 1989). Recent studies examining the McGurk effect in young infants and children (e.g. Rosenblum, Smuckler & Johnson, 1997; Massaro et al., 1986) as well as cross-linguistically (Massaro et al., 1993; Sekiyama & Tohkura, 1993) are attempts to address this issue (for a review see Burnham, this volume).

The fourth issue that researchers have investigated is the underlying neurological systems involved in the integration of auditory and visual speech information. Some studies have examined the integration of auditory and visual speech in patients with damage to either the right or left cerebral hemispheres (Campbell et al., 1990) as well as the hemispheric lateralization of the McGurk effect in normal adults (Baynes, Funnell & Fowler, 1994). Others have employed various types of sophisticated imaging techniques to view the activation of the brain under different types of unimodal and bimodal speech conditions (Sams et al., 1991; Campbell, 1996). The intent of such studies is to determine the various types of cognitive processes that might be involved in the processing and integration of phonetic information from the auditory and visual modalities. The results from the different studies addressing the four issues have important implications for theories of speech perception which must account for how and when the information from the two modalities is integrated during speech processing. Moreover, since the auditory signal by itself provides sufficient information for accurate speech perception under most conditions, a good theory should also provide an account of why the two signals are combined.

In this chapter, I will describe studies that examine the level of processing at which the auditory and visual information are integrated, as well as the effect of developmental experience. In the second section, I review some of the research examining the effect of visual influences on phonetic processing of the auditory signal, while in the third section I examine the effect of developmental experience on the McGurk effect. The fourth section discusses the implications of the various studies with respect to theories of speech perception.

VISUAL INFLUENCES ON PHONETIC PERCEPTION

Although the McGurk effect demonstrates an influence of visual information on phonetic perception, it does not isolate the stage of phonetic processing at which the visual and auditory information are combined. The visual information could be combined after the extraction of phonetic prototypes from the auditory signal and act as a kind of visual bias on the phonetic decision of the auditory information. This would be a post-phonetic access approach to the integration of the auditory and visual information. A second possibility is that the visual and auditory information are mapped onto the prototype at the same level of phonetic processing, referred to by some as a “late integration” approach (Robert-Ribes, Schwartz & Escudier, 1995). In one version of this approach, Massaro’s Fuzzy Logic Model of Perception (FLMP), separate evaluations of the different dimensions in each modality are extracted independently and then mapped onto the phonetic prototypes (Massaro, 1987). In this model, there is no interaction of the auditory and visual dimensions until the information is mapped onto the prototypes. An alternative version of a late integration approach is to have the separate evaluations along certain dimensions be made contingent upon the evaluations along other dimensions (e.g. Crow ther & Batchelder, 1995). This type of model, termed “conditionally independent” (CI), would allow for early interaction among the auditory and visual information, even though the integration of information itself occurs late. Finally, a third possibility is that the visual and auditory information are combined before the information from either modality is mapped onto the phonetic prototypes, usually referred to as “early integration” (Robert-Ribes, Schwartz & Escudier, 1995; see Schwartz, Robert-Ribes & Escudier, this volume).1

In an attempt to examine these different possibilities, my colleagues and I have been investigating how conflicting visual information influences the phonetic processing of the auditory speech signal. One of our earliest studies examined whether a change in the perceived place of articulation resulting from the McGurk effect influenced the processing of other dimensions of the auditory signal such as voice-onset-time (VOT) (Green & Kuhl, 1989). Previous studies had shown that the voicing boundary along a VOT continuum ranging from a voiced to voiceless stop consonant varied as a function of the place of articulation of the consonant. A bilabial continuum typically produces a boundary at a relatively short VOT value while an alveolar continuum produces a moderate and a velar continuum a relatively long VOT boundary (Lisker & Abramson, 1970; Miller, 1977). Green and Kuhl (1989) investigated whether the perception of voicing was made with respect to the place of articulation resulting from the combined auditory and visual information in the McGurk effect, or based solely on the place value of the auditory token. An auditory /ibi–ipi/ continuum was dubbed onto a sequence of visual /igi/ tokens and presented to subjects in an auditory-visual (AV) and an auditory-only (AO) condition. Due to the McGurk effect, the AV tokens were perceived as ranging from /idi/ to /iti/.

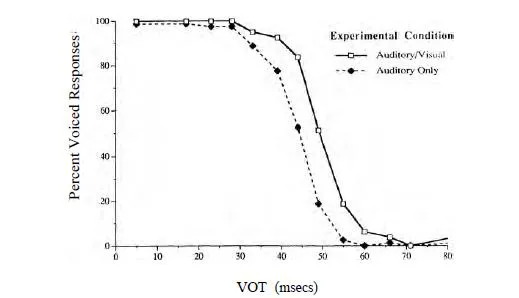

The results from this experiment (shown in Figure 1) revealed a significant shift in the percentage of voiced responses for the AV condition (e.g. /d/) relative to the AO (e.g. /b/) even though the auditory tokens were identical in the two conditions. This shift indicates that the McGurk effect can impact on the phonetic processing of other dimensions of the auditory signal. The fact that the voicing boundary is modified as a function of the combined auditory and visual place information demonstrates that the visual information does not serve to simply “bias” the phonetic decision made on the basis of the auditory information. One can imagine such bias occurring to change the perceived place decision of an auditory token, especially if that token were somewhat ambiguous with respect to place of articulation. But, to change the voicing decision as well would require a reanalysis of the voicing information. From this it can be concluded that the auditory and visual information are combined by the time a phonetic decision is made, ruling out the possibility of a post-phonetic integration of the auditory and visual information.

FIG. 1. Per cent of voiced responses for the /ibi-ipi/ continuum under the auditory-visual and auditory-only conditions

Other studies have investigated whether the perception of speech is influenced by the surrounding speech context. Such influences, usually referred to as “context effects”, have often been interpreted as revealing the operation of an early interaction of complex cues during speech perception (Repp, 1982). Overall, two kinds of context effects have been investigated with respect to the auditory-visual perception of speech. One kind consists of nonphonetic contextual information, such as changes in speaking rate or talker characteristics. These contexts influence the phonetic interpretation of speech without providing direct information about segmental identity (Miller, 1981; for a review, see Johnson, 1990). A second kind of context effect involves the influence of neighbouring segments on the phonetic interpretation of a target phoneme. For example, the type of vowel following a consonant influences the interpretation of a preceding fricative (Mann & Repp, 1980). Such phonetic context effects are usually thought to reflect the tacit knowledge of coarticulatory effects from speech production during phonetic perception (Repp, 1982), although other interpretations are possible (Diehl, Kluender & Walsh, 1990).

Two studies have examined whether nonphonetic contextual information in the visual modality, such as information for speaking rate or talker characteristics, influence phonetic processing. Green and Miller (1985) examined whether visual rate information influences the voicing boundary along a /bi–pi/ continuum. Previous research had shown the perception of voicing to be influenced by the speaking rate of the auditory token: fast tokens produced a shorter VOT boundary than slow tokens did (Green & Miller, 1985; Summerfield, 1981). Green and Miller (1985) dubbed tokens from an auditory /bi–pi/ continuum spoken at a medium rate of speech onto visual articulations of /bi/ and /pi/ spoken at fast and slow rates of speech. They found a shift in the VOT boundary as a function of whether the same auditory tokens were paired with either the fast or the slow visual tokens. This finding demonstrates that visual rate information, like auditory rate information, plays a role in the perception of voicing.2

In another study, Strand and Johnson (1996) examined whether the auditory distinction between /s/ and 4/ / would be influenced by visual information for talker gender. Previous research had shown that the spectra of the frication noise corresponding to /s/ and /f/ varies with gender characteristics. The overall spectrum is shifted towards higher frequencies for female relative to male talkers (Schwartz, 1968). More important, Mann and Repp (1980) found that the category boundary separating /s/ and /j/ is influenced by the gender characteristics of the talker saying the following...