Containers in OpenStack

Pradeep Kumar Singh, Madhuri Kumari, Vinoth Kumar Selvaraj, Felipe Monteiro, Vinoth Kumar Selvaraj, Venkatesh Loganathan

- 176 pages

- English

- ePUB (adapté aux mobiles)

- Disponible sur iOS et Android

Containers in OpenStack

Pradeep Kumar Singh, Madhuri Kumari, Vinoth Kumar Selvaraj, Felipe Monteiro, Vinoth Kumar Selvaraj, Venkatesh Loganathan

À propos de ce livre

A practical book which will help the readers understand how the container ecosystem and OpenStack work together.About This Book• Gets you acquainted with containerization in private cloud• Learn to effectively manage and secure your containers in OpenStack• Practical use cases on container deployment and management using OpenStack componentsWho This Book Is ForThis book is targeted towards cloud engineers, system administrators, or anyone from the production team who works on OpenStack cloud. This book act as an end to end guide for anyone who wants to start using the concept of containerization on private cloud. Some basic knowledge of Docker and Kubernetes will help.What You Will Learn• Understand the role of containers in the OpenStack ecosystem• Learn about containers and different types of container runtimes tools.• Understand containerization in OpenStack with respect to the deployment framework, platform services, application deployment, and security• Get skilled in using OpenStack to run your applications inside containers• Explore the best practices of using containers in OpenStack.In DetailContainers are one of the most talked about technologies of recent times. They have become increasingly popular as they are changing the way we develop, deploy, and run software applications. OpenStack gets tremendous traction as it is used by many organizations across the globe and as containers gain in popularity and become complex, it's necessary for OpenStack to provide various infrastructure resources for containers, such as compute, network, and storage.Containers in OpenStack answers the question, how can OpenStack keep ahead of the increasing challenges of container technology? You will start by getting familiar with container and OpenStack basics, so that you understand how the container ecosystem and OpenStack work together. To understand networking, managing application services and deployment tools, the book has dedicated chapters for different OpenStack projects: Magnum, Zun, Kuryr, Murano, and Kolla.Towards the end, you will be introduced to some best practices to secure your containers and COE on OpenStack, with an overview of using each OpenStack projects for different use cases.Style and approachAn end to end guide for anyone who wants to start using the concept of containerization on private cloud.

Foire aux questions

Informations

Working with Container Orchestration Engines

- Introduction to COE

- Docker Swarm

- Apache Mesos

- Kubernetes

- Kubernetes installation

- Kubernetes hands-on

Introduction to COE

- Provisioning and managing hosts on which containers will run

- Pulling the images from the repository and instantiating the containers

- Managing the life cycle of containers

- Scheduling containers on hosts based on the host's resource availability

- Starting a new container when one dies

- Scaling the containers to match the application's demand

- Providing networking between containers so that they can access each other on different hosts

- Exposing these containers as services so that they can be accessed from outside

- Health monitoring of the containers

- Upgrading the containers

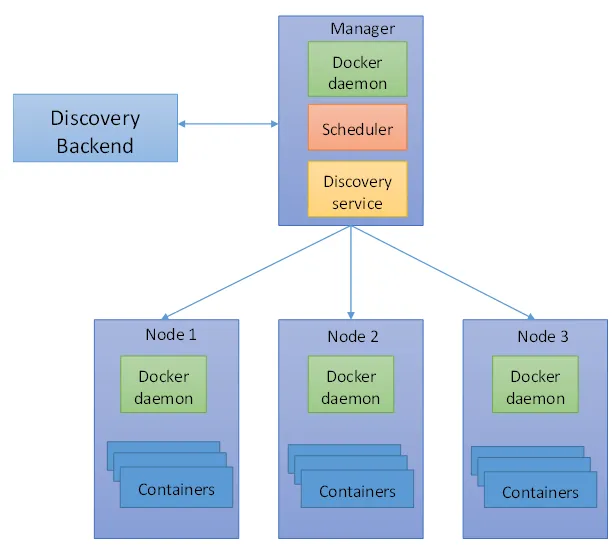

Docker Swarm

Docker Swarm components

Node

Manager node

Worker node

Tasks

Services

Discovery service

Scheduler

Swarm mode

$ docker swarm init

$ docker swarm join