Architecture is the reaching out for the truth.

Introduction

This book is about building a next-generation IT infrastructure. To understand what that means, one needs to start by looking at what constitutes current-generation IT infrastructure. But how did we arrive at the current infrastructure? To get a sensible perspective on that, it helps to back up and look at where computing started.

In the early days of computing, there was a tight connection among users, computers, and applications. A user would typically have to be in the same building as the computer, if not in the very same room. There would be little or no ambiguity about which computer the application was running on. This description holds up when one thinks about the “early days” as referring to an ENIAC, an IBM 360, or an Apple II.

Since those days, enterprise IT organizations have increasingly used networking technologies to allow various kinds of separation. One set of technologies that goes by the name of storage networking allows computers and the storage underpinning applications to be separated from each other to improve operational efficiency and flexibility. Another set of technologies called local-area networking allows users to be separated from computing resources over small (campus-size) distances. Finally, another set of technologies called wide-area networking allows users to be separated from computing resources by many miles, perhaps even on the other side of the world. Sometimes practitioners refer to these kinds of networks or technologies by the shorthand SAN, LAN, and WAN (storage-area network, local-area network, wide-area network, respectively). The most familiar example of a WAN is the Internet, although it has enough unique characteristics that many people prefer to consider it a special case, distinct from a corporate WAN or a service provider backbone that might constitute one of its elements.

It is worth considering in a little more detail why these forms of separation are valuable. Networking delivers obvious value when it enables communication that is otherwise impossible, for example, when a person in location A must use a computer in location B, and it is not practical to move either the person or the computer to the other location. However, that kind of communication over distance is clearly not the motivation for storage networking, where typically all of the communicating entities are within a single data center. Instead, storage networking involves the decomposition of server/storage systems into aggregates of servers talking to aggregates of storage.

New efficiencies are possible with separation and consolidation. Here’s an example: suppose that an organization has five servers and each uses only 20% of its disk. It turns out that it’s typically not economical to buy smaller disks, but it is economical to buy only two or three disks instead of five, and share those among the five servers. In fact, the shared disks can be arranged into a redundant array of independent disks (RAID)1 configuration that will allow the shared disks to handle a single disk failure without affecting data availability—all five servers can stay up despite a disk failure, something that was not possible with the disk-per-server configuration. Although the details vary, these kinds of cost savings and performance improvements are typical for what happens when resources can be aggregated and consolidated, which in turn typically requires some kind of separation from other kinds of resources.

Although these forms of networking (SAN, LAN, WAN) grew up more or less independently and evolved separately, all forms of networking are broadly equivalent in offering the ability to transport bit patterns from some origin point to some destination point. It is perhaps not surprising that over time they have borrowed ideas from each other and started to overlap or converge. Vendors offering “converged” or “unified” data center networking are effectively blurring the line between LAN and SAN, while vendors offering “WAN optimization” or “virtual private LAN services” are encouraging reconsideration of the separation between LAN and WAN.

Independently of the evolution of networking technologies, IT organizations have increasingly used virtualization technologies to create a different kind of separation. Virtualization creates the illusion that the entire computer is available to execute a progam while the physical hardware might actually be shared by multiple such programs. Virtualization allows the logical server (executing program) to be separated cleanly from the physical server (computer hardware). Virtualization dramatically increases the flexibility of an IT organization, by allowing multiple logical servers—possibly with radically incompatible operating systems—to share a single physical server, or to migrate among different servers as their loads change.

Partly as a consequence of the rise of the Internet, and partly as a consequence of the rise of virtualization, there is yet a third kind of technology that is relevant for our analysis. Cloud services offer computing and storage accessed over Internet protocols in a style that is separated not only from the end-users but also from the enterprise data center.

A cloud service must be both elastic and automatic in its provisioning—that is, an additional instance of the service can be simply arranged online without requiring manual intervention. Naturally, this also leads to requirements of automation with respect to both billing for and terminating service, or else those areas would become operational bottlenecks for the service. The need for elastic automatic provisioning, billing, and termination in turn demand the greatest possible agility and flexibility from the infrastructure.

If we want to build a cloud service—whether public or private, application focused or infrastructure focused—we have to combine the best available ideas about scaling, separation of concerns, and consolidating shared functions. Presenting those ideas and how they come together to support a working cloud is the subject of this book.

There are two styles of organization for the information presented in the rest of the book. The first is a layered organization and the other is a modular organization. The next two sections explain these two perspectives.

Layers of function: the service-oriented infrastructure framework

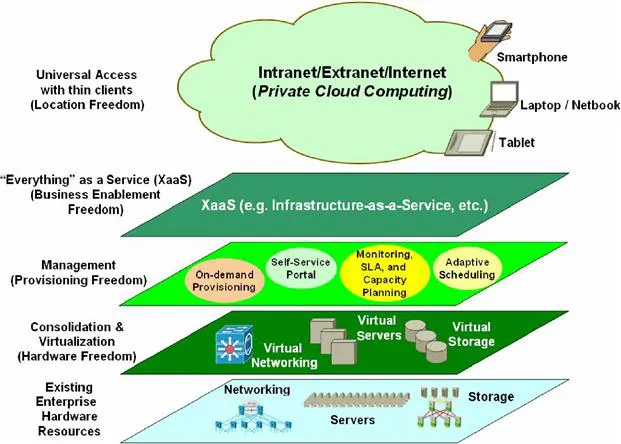

There are so many different problems to be solved in building a next-generation infrastructure that it’s useful to organize the approach into layers. The top layer supplies various kinds of fantastically powerful, incredibly flexible services to end-users. The bottom layer is a collection of off-the-shelf hardware of various kinds—servers, storage, networking routers and switches, and long-distance telecom services. The intervening layers use the relatively crude facilities of the lower layers to build a new set of more sophisticated facilities.

This particular layered model is called a service-oriented infrastructure (SOI) framework and is illustrated in Figure 1.1. The layer immediately above the physical hardware is concerned with virtualization—reducing or eliminating the limitations associated with using particular models of computers, particular sizes of disks, and so on. The layer above that is concerned with management and provisioning—associating the idealized resources with the demands that are being placed. A layer above management and provisioning exports these automatic capabilities in useful combinations through a variety of network interfaces, allowing the resources to be used equally well for a high-level cloud software as a service (SaaS) and a lower-level cloud infrastructure as a service (IaaS).

Figure 1.1 The SOI framework

In the course of discussing choices and trade-offs to be made, there will be references to these layers.

Blocks of function: the cloud modules

Throughout the book, while keeping in mind the SOI structure, the chapters are organized around a different paradigm: consider a cloud computer to be made of various modules roughly similar to the central processing unit (CPU), RAM, bus, disk, and so on that are familiar elements of a conventional computer. As illustrated in Figure 1.2, there are several modules making up this functional layout:

• Server module

• Storage module

• Fabric module

• WAN module

• End-user type I—branch office

• End-user type II—mobile

Figure 1.2 Cloud computing building blocks

Server module

The server module is analogous to the CPU of the cloud computer. The physical servers or server farm within this module form the core processors. It is “sandwiched” between a data center network and a storage area network.

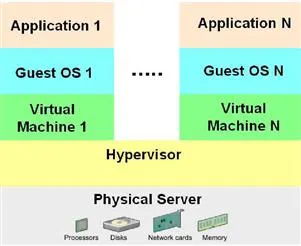

As previously noted, server virtualization supports multiple logical servers or virtual machines (VMs) on a single physical server. A VM behaves exactly like a standalone server, but it shares the hardware resources (e.g., processors, disks, network interface cards, and memory) of the physical server with the other VMs. A virtual machine monitor (VMM), often referred to as a hypervisor, makes this possible. The hypervisor issues guest operating systems (OSs) with a VM and monitors the execution of these guest OSs. In this manner, different OSs, as well as multiple instances of the same OS, can share the same hardware resources on the physical server. Figure 1.3 illustrates the simplified architecture of VMs.

Figure 1.3 Simplified architecture of virtual machines

Server virtualization reduces and consolidates the number of physical server units required in the data center, while at the same time increasing the average utilization of these servers. For more details on server consolidation and virtualization, see Chapter 2, Next-Generation Data Center Architectures and Technologies.

Storage module

The storage module provides data storage for the cloud computer. It comprises the SAN and the storage subsystem that connects storage devices such as just a bunch of disks (JBOD), disk arrays, and RAID to the SAN. For more details on SAN-based virtualization, see Chapter 2.

SAN extension

SAN extension is required when there is one or more storage modules (see Figure 1.2) across the “cloud” (WAN module) for remote data replication, backup, and migration purposes. SAN extension solutions include Wave-Division Multiplexing (WDM) n...