Data Engineering with Python

Work with massive datasets to design data models and automate data pipelines using Python

- 356 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Data Engineering with Python

Work with massive datasets to design data models and automate data pipelines using Python

About this book

Build, monitor, and manage real-time data pipelines to create data engineering infrastructure efficiently using open-source Apache projects

Key Features

- Become well-versed in data architectures, data preparation, and data optimization skills with the help of practical examples

- Design data models and learn how to extract, transform, and load (ETL) data using Python

- Schedule, automate, and monitor complex data pipelines in production

Book Description

Data engineering provides the foundation for data science and analytics, and forms an important part of all businesses. This book will help you to explore various tools and methods that are used for understanding the data engineering process using Python.The book will show you how to tackle challenges commonly faced in different aspects of data engineering. You'll start with an introduction to the basics of data engineering, along with the technologies and frameworks required to build data pipelines to work with large datasets. You'll learn how to transform and clean data and perform analytics to get the most out of your data. As you advance, you'll discover how to work with big data of varying complexity and production databases, and build data pipelines. Using real-world examples, you'll build architectures on which you'll learn how to deploy data pipelines.By the end of this Python book, you'll have gained a clear understanding of data modeling techniques, and will be able to confidently build data engineering pipelines for tracking data, running quality checks, and making necessary changes in production.

What you will learn

- Understand how data engineering supports data science workflows

- Discover how to extract data from files and databases and then clean, transform, and enrich it

- Configure processors for handling different file formats as well as both relational and NoSQL databases

- Find out how to implement a data pipeline and dashboard to visualize results

- Use staging and validation to check data before landing in the warehouse

- Build real-time pipelines with staging areas that perform validation and handle failures

- Get to grips with deploying pipelines in the production environment

Who this book is for

This book is for data analysts, ETL developers, and anyone looking to get started with or transition to the field of data engineering or refresh their knowledge of data engineering using Python. This book will also be useful for students planning to build a career in data engineering or IT professionals preparing for a transition. No previous knowledge of data engineering is required.

]]>

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

Section 1: Building Data Pipelines – Extract Transform, and Load

- Chapter 1, What is Data Engineering?

- Chapter 2, Building Our Data Engineering Infrastructure

- Chapter 3, Reading and Writing Files

- Chapter 4, Working with Databases

- Chapter 5, Cleaning and Transforming Data

- Chapter 6, Building a 311 Data Pipeline

Chapter 1: What is Data Engineering?

- What data engineers do

- Data engineering versus data science

- Data engineering tools

What data engineers do

- How do we find out which locations sell the most widgets?

- How do we find out the peak times for selling widgets?

- How many users put widgets in their carts and remove them later?

- How do we find out the combinations of widgets that are sold together?

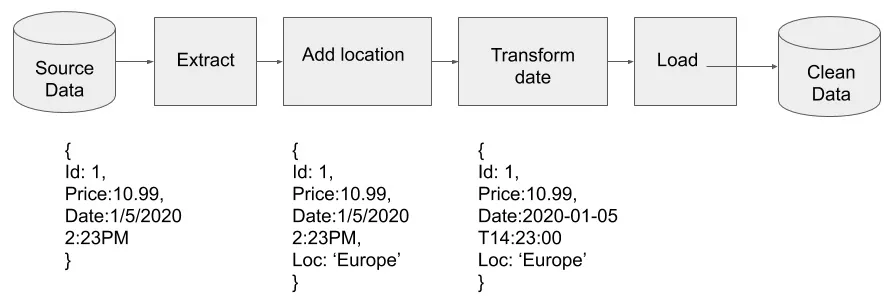

- Extract the data from each database.

- Add a field to tag the location for each transaction in the data

- Transform the date from local time to ISO 8601.

- Load the data into the data warehouse.

Required skills and knowledge to be a data engineer

Table of contents

- Data Engineering with Python

- Why subscribe?

- Preface

- Section 1: Building Data Pipelines – Extract Transform, and Load

- Chapter 1: What is Data Engineering?

- Chapter 2: Building Our Data Engineering Infrastructure

- Chapter 3: Reading and Writing Files

- Chapter 4: Working with Databases

- Chapter 5: Cleaning, Transforming, and Enriching Data

- Chapter 6: Building a 311 Data Pipeline

- Section 2:Deploying Data Pipelines in Production

- Chapter 7: Features of a Production Pipeline

- Chapter 8: Version Control with the NiFi Registry

- Chapter 9: Monitoring Data Pipelines

- Chapter 10: Deploying Data Pipelines

- Chapter 11: Building a Production Data Pipeline

- Section 3:Beyond Batch – Building Real-Time Data Pipelines

- Chapter 12: Building a Kafka Cluster

- Chapter 13: Streaming Data with Apache Kafka

- Chapter 14: Data Processing with Apache Spark

- Chapter 15: Real-Time Edge Data with MiNiFi, Kafka, and Spark

- Appendix

- Other Books You May Enjoy

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app