Applied Multivariate Statistics for the Social Sciences

Analyses with SAS and IBM’s SPSS, Sixth Edition

- 794 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Applied Multivariate Statistics for the Social Sciences

Analyses with SAS and IBM’s SPSS, Sixth Edition

About this book

Now in its 6th edition, the authoritative textbook Applied Multivariate Statistics for the Social Sciences, continues to provide advanced students with a practical and conceptual understanding of statistical procedures through examples and data-sets from actual research studies. With the added expertise of co-author Keenan Pituch (University of Texas-Austin), this 6th edition retains many key features of the previous editions, including its breadth and depth of coverage, a review chapter on matrix algebra, applied coverage of MANOVA, and emphasis on statistical power. In this new edition, the authors continue to provide practical guidelines for checking the data, assessing assumptions, interpreting, and reporting the results to help students analyze data from their own research confidently and professionally.

Features new to this edition include:

- NEW chapter on Logistic Regression (Ch. 11) that helps readers understand and use this very flexible and widely used procedure

- NEW chapter on Multivariate Multilevel Modeling (Ch. 14) that helps readers understand the benefits of this "newer" procedure and how it can be used in conventional and multilevel settings

- NEW Example Results Section write-ups that illustrate how results should be presented in research papers and journal articles

- NEW coverage of missing data (Ch. 1) to help students understand and address problems associated with incomplete data

- Completely re-written chapters on Exploratory Factor Analysis (Ch. 9), Hierarchical Linear Modeling (Ch. 13), and Structural Equation Modeling (Ch. 16) with increased focus on understanding models and interpreting results

- NEW analysis summaries, inclusion of more syntax explanations, and reduction in the number of SPSS/SAS dialogue boxes to guide students through data analysis in a more streamlined and direct approach

- Updated syntax to reflect newest versions of IBM SPSS (21) /SAS (9.3)

- A free online resources site at www.routledge.com/9780415836661 with data sets and syntax from the text, additional data sets, and instructor's resources (including PowerPoint lecture slides for select chapters, a conversion guide for 5th edition adopters, and answers to exercises)

Ideal for advanced graduate-level courses in education, psychology, and other social sciences in which multivariate statistics, advanced statistics, or quantitative techniques courses are taught, this book also appeals to practicing researchers as a valuable reference. Pre-requisites include a course on factorial ANOVA and covariance; however, a working knowledge of matrix algebra is not assumed.

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app.

Information

Chapter 1

Introduction

1.1 Introduction

- A researcher is comparing two methods of teaching second-grade reading. On a posttest the researcher measures the participants on the following basic elements related to reading: syllabication, blending, sound discrimination, reading rate, and comprehension.

- A social psychologist is testing the relative efficacy of three treatments on self-concept, and measures participants on academic, emotional, and social aspects of self-concept. Two different approaches to stress management are being compared.

- The investigator employs a couple of paper-and-pencil measures of anxiety (say, the State-Trait Scale and the Subjective Stress Scale) and some physiological measures.

- A researcher comparing two types of counseling (Rogerian and Adlerian) on client satisfaction and client self-acceptance.

- Any worthwhile treatment will affect the participants in more than one way. Hence, the problem for the investigator is to determine in which specific ways the participants will be affected, and then find sensitive measurement techniques for those variables.

- Through the use of multiple criterion measures we can obtain a more complete and detailed description of the phenomenon under investigation, whether it is teacher method effectiveness, counselor effectiveness, diet effectiveness, stress management technique effectiveness, and so on.

- Treatments can be expensive to implement, while the cost of obtaining data on several dependent variables is relatively small and maximizes information gain.

- To review some basic concepts (e.g., type I error and power) and some issues associated with univariate analysis that are equally important in multivariate analysis.

- To discuss the importance of identifying outliers, that is, points that split off from the rest of the data, and deciding what to do about them. We give some examples to show the considerable impact outliers can have on the results in univariate analysis.

- To discuss the issue of missing data and describe some recommended missing data treatments.

- To give research examples of some of the multivariate analyses to be covered later in the text and to indicate how these analyses involve generalizations of what the student has previously learned.

- To briefly introduce the Statistical Analysis System (SAS) and the IBM Statistical Package for the Social Sciences (SPSS), whose outputs are discussed throughout the text.

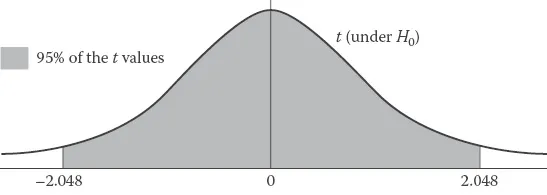

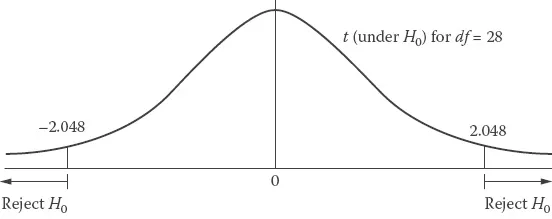

1.2 Type I Error, Type II Error, and Power

α | β | 1 − β |

|---|---|---|

.10 | .37 | .63 |

.05 | .52 | .48 |

.01 | .78 | .22 |

- The α level set by the experimenter

- Sample size

- Effect size—How much of a difference the treatments make, or the extent to which the groups differ in the population on the dependent variable(s).

Table of contents

- Cover

- Title

- Copyright

- Dedication

- Contents

- Preface

- 1. Introduction

- 2. Matrix Algebra

- 3. Multiple Regression for Prediction

- 4. Two-Group Multivariate Analysis of Variance

- 5. K-Group MANOVA: A Priori and Post Hoc Procedures

- 6. Assumptions in MANOVA

- 7. Factorial ANOVA and MANOVA

- 8. Analysis of Covariance

- 9. Exploratory Factor Analysis

- 10. Discriminant Analysis

- 11. Binary Logistic Regression

- 12. Repeated-Measures Analysis

- 13. Hierarchical Linear Modeling

- 14. Multivariate Multilevel Modeling

- 15. Canonical Correlation

- 16. Structural Equation Modeling

- Appendix A: Statistical Tables

- Appendix B: Obtaining Nonorthogonal Contrasts in Repeated Measures Designs

- Detailed Answers

- Index