Design of Intelligent Applications using Machine Learning and Deep Learning Techniques

- 448 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

Design of Intelligent Applications using Machine Learning and Deep Learning Techniques

About this book

Machine learning (ML) and deep learning (DL) algorithms are invaluable resources for Industry 4.0 and allied areas and are considered as the future of computing. A subfield called neural networks, to recognize and understand patterns in data, helps a machine carry out tasks in a manner similar to humans. The intelligent models developed using ML and DL are effectively designed and are fully investigated – bringing in practical applications in many fields such as health care, agriculture and security. These algorithms can only be successfully applied in the context of data computing and analysis. Today, ML and DL have created conditions for potential developments in detection and prediction.

Apart from these domains, ML and DL are found useful in analysing the social behaviour of humans. With the advancements in the amount and type of data available for use, it became necessary to build a means to process the data and that is where deep neural networks prove their importance. These networks are capable of handling a large amount of data in such fields as finance and images. This book also exploits key applications in Industry 4.0 including:

· Fundamental models, issues and challenges in ML and DL.

· Comprehensive analyses and probabilistic approaches for ML and DL.

· Various applications in healthcare predictions such as mental health, cancer, thyroid disease, lifestyle disease and cardiac arrhythmia.

· Industry 4.0 applications such as facial recognition, feather classification, water stress prediction, deforestation control, tourism and social networking.

· Security aspects of Industry 4.0 applications suggest remedial actions against possible attacks and prediction of associated risks.

- Information is presented in an accessible way for students, researchers and scientists, business innovators and entrepreneurs, sustainable assessment and management professionals.

This book equips readers with a knowledge of data analytics, ML and DL techniques for applications defined under the umbrella of Industry 4.0. This book offers comprehensive coverage, promising ideas and outstanding research contributions, supporting further development of ML and DL approaches by applying intelligence in various applications.

Trusted by 375,005 students

Access to over 1 million titles for a fair monthly price.

Study more efficiently using our study tools.

Information

1

Contents

1.1 Introduction

- Data acquisition

- Data ingestion

- Data quality and cleansing

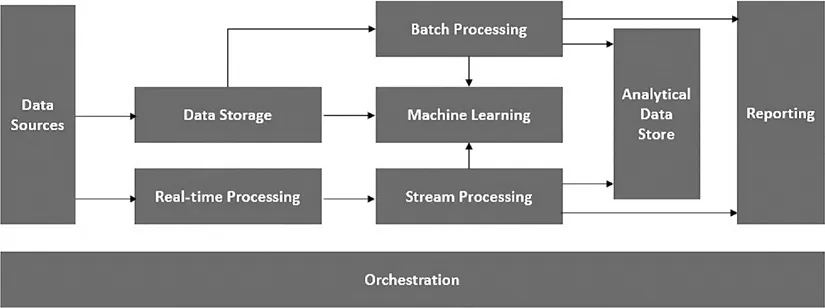

1.2 Reference Architecture

- Data sources

- Data storage

- Batch processing

- Real-time message ingestion

- Stream processing

- ML

- Analytical data store

- Analytics and reports

- Orchestration

1.2.1 Data Sources

- Application data stores such as relational databases (SQL Server, Oracle, MySQL and so on), or it can also be NoSQL databases (Cassandra, MongoDB, PostgreSQL and so on)

- Log files and flat files generated by various types of business applications, monitoring and logging software (Splunk, ELK and so on)

- IoT devices that produce data

1.2.2 Data Storage

1.2.3 Batch Processing

1.2.4 Real-Time Message Ingestion

1.2.5 Stream Processing

1.2.6 Machine Learning

1.2.7 Analytical Data Store

1.2.8 Analytics and ...

Table of contents

- Cover

- Half Title

- Title Page

- Copyright Page

- Table of Contents

- Preface

- Editors

- Contributors

- 1. Data Acquisition and Preparation for Artificial Intelligence and Machine Learning Applications

- 2. Fundamental Models in Machine Learning and Deep Learning

- 3. Research Aspects of Machine Learning: Issues, Challenges, and Future Scope

- 4. Comprehensive Analysis of Dimensionality Reduction Techniques for Machine Learning Applications

- 5. Application of Deep Learning in Counting WBCs, RBCs, and Blood Platelets Using Faster Region-Based Convolutional Neural Network

- 6. Application of Neural Network and Machine Learning in Mental Health Diagnosis

- 7. Application of Machine Learning in Cardiac Arrhythmia

- 8. Advances in Machine Learning and Deep Learning Approaches for Mammographic Breast Density Measurement for Breast Cancer Risk Prediction: An Overview

- 9. Applications of Machine Learning in Psychology and the Lifestyle Disease Diabetes Mellitus

- 10. Application of Machine Learning and Deep Learning in Thyroid Disease Prediction

- 11. Application of Machine Learning in Fake News Detection

- 12. Authentication of Broadcast News on Social Media Using Machine Learning

- 13. Application of Deep Learning in Facial Recognition

- 14. Application of Deep Learning in Deforestation Control and Prediction of Forest Fire Calamities

- 15. Application of Convolutional Neural Network in Feather Classifications

- 16. Application of Deep Learning Coupled with Thermal Imaging in Detecting Water Stress in Plants

- 17. Machine Learning Techniques to Classify Breast Cancer

- 18. Application of Deep Learning in Cartography Using UNet and Generative Adversarial Network

- 19. Evaluation of Intrusion Detection System with Rule-Based Technique to Detect Malicious Web Spiders Using Machine Learning

- 20. Application of Machine Learning to Improve Tourism Industry

- 21. Training Agents to Play 2D Games Using Reinforcement Learning

- 22. Analysis of the Effectiveness of the Non-Vaccine Countermeasures Taken by the Indian Government against COVID-19 and Forecasting Using Machine Learning and Deep Learning

- 23. Application of Deep Learning in Video Question Answering System

- 24. Implementation and Analysis of Machine Learning and Deep Learning Algorithms

- 25. Comprehensive Study of Failed Machine Learning Applications Using a Novel 3C Approach

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app